Google gave us a look at the future of its products and services today at its annual I/O developer conference in Mountain View, California.

There were a number of key announcements that will help shape the future of Android and other major platforms, including a new application called Google Lens, changes to Google Home, and some Google Photos additions. The mobile giant also presented a couple of features we should expect to see in the upcoming Android O update, but stopped short of showing any new hardware.

Here are the biggest announcements from the opening day keynote.

Android 0

Android O still doesn’t have a name, but we at least know a few of the features it will launch with. The next version of Android will get Picture-to-Picture, an automatic multi-window feature that will let you continue to watch videos or read an article while you browse through the OS. It looks extremely similar to a feature already found on YouTube that moves the video you are watching to the bottom corner of your display as you browse for other content.

The next version of Android will come with Notification Dots, or alerts that appear as blobs above app icons when you have an unread notification. If you long-press the app icon you will be presented with a speech bubble preview of the notification. From there, you can go into the notification by pressing the preview. I’m not convinced this is any more convenient than swiping down from the top of the screen, but I’ll reserve judgement until I’ve tried it out.

https://twitter.com/CrazyNalin/status/864910369734746113

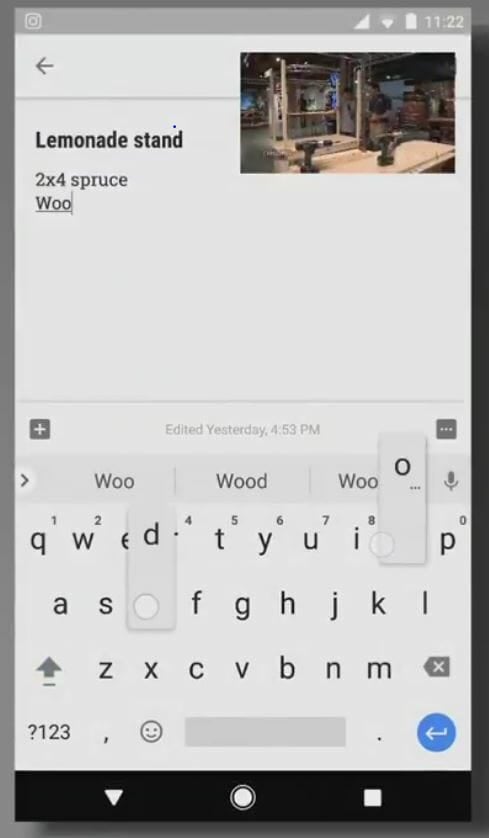

Copy and paste is also getting revamped. Google explained the most copied text besides phone numbers are businesses, people, and places. Thanks to machine learning, users can now press on a single word to highlight and copy an entire phrase or title. For example, if you press the street number of an address, the entire address will be selected.

Google also unveiled Android Go, a new streamlined version of Android for low-cost devices.

The first beta for Android O is available today at Android.com/beta.

Google.ai and Cloud TPU

This year’s I/O conference focused on using artificial intelligence to streamline processes. Google announced Google.ai, a new division created to expedite developments in artificial intelligence by making AI design new models for itself, instead of using human labor. Google says the new division will help see gains in AI for all of its products, especially Google Assistant and Google Search.

The company also released its second-generation Tensor Processing Units (TPU), which are designed to speed up machine learning processors. That task is usually delegated to CPUs and GPUs, but Google wanted to create a custom chip that could process AI tasks faster.

“To put this into perspective, our new large-scale translation model takes a full day to train on 32 of the world’s best commercially available GPU’s—while one 1/8th of a TPU pod can do the job in an afternoon,” Google wrote in a statement.

Our new Cloud TPUs accelerate a wide range of machine learning workloads, including training and inference → https://t.co/aWvTVMn54Q #io17 pic.twitter.com/Bm5e8Gud6s

— Google (@Google) May 17, 2017

The first-generation TPU was used by AlphaGo, an AI that defeated the highest-ranked Go player in the world.

Google Home

Hands-free calling is coming to Google’s smart speaker. Users can now call any number from the U.S. and Canada for free by saying “Google, call X.” The speaker will use machine learning to determine which of its six supported users is speaking, so if you ask it to “call mom” you won’t awkwardly end up with your roommate’s mom on the other end of the line.

The hands-free feature will start rolling out in the “next few months.”

Home is also getting Proactive Assistance so it can start telling you when to leave for your next appointment, or let you know about your flight status without needing to be prompted. This sounds like a great feature for forgetful people like myself.

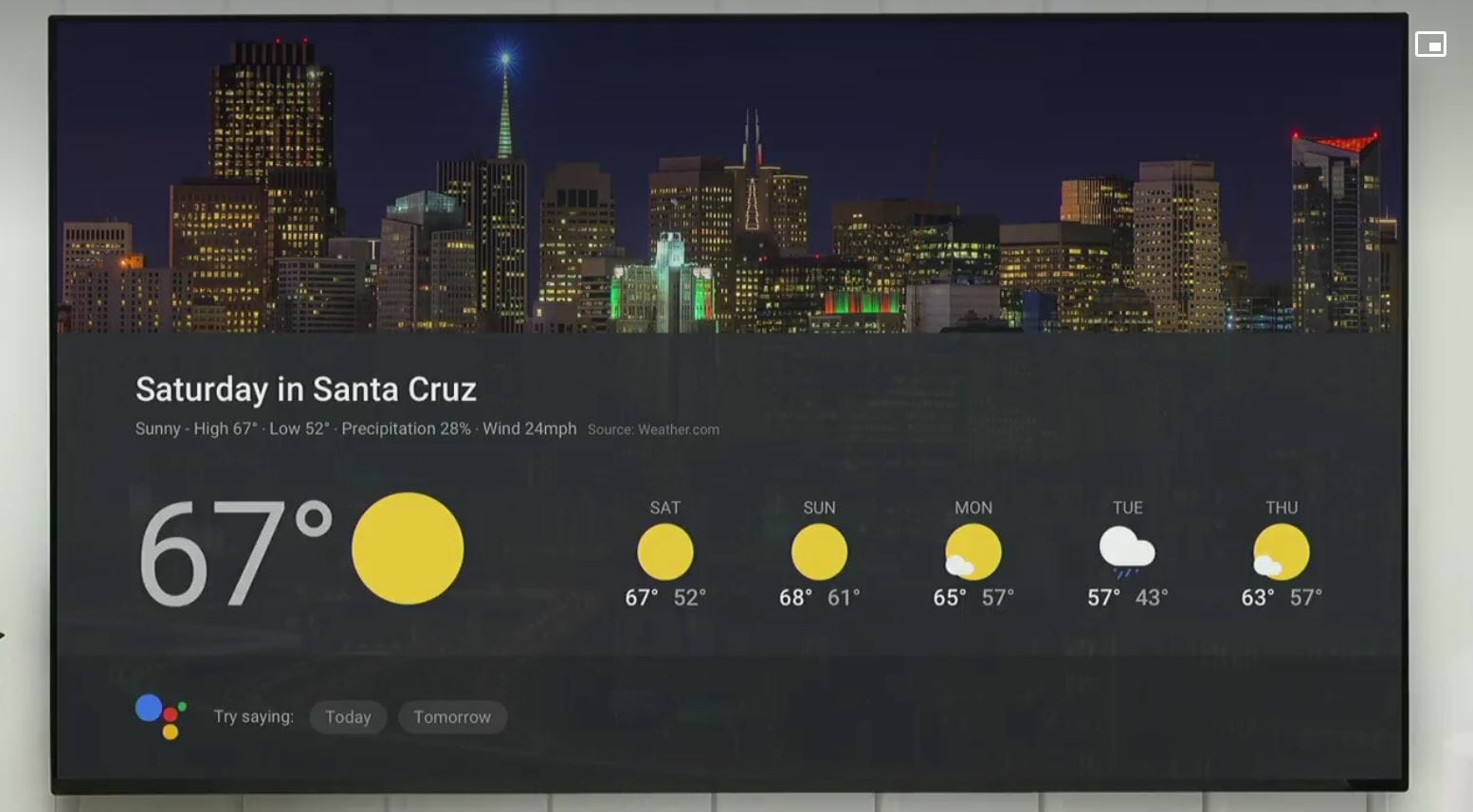

Spotify, SoundCloud, and Deezer were added to the list of music streaming services that are compatible with Google Home, and Chromecast will also be updated to start working with the speaker to show visual responses on your TV.

You can ask Home to pull up your calendar and it’ll use Chromecast to display it on your TV.

Google Photos

Google announced three new features for its Photos application.

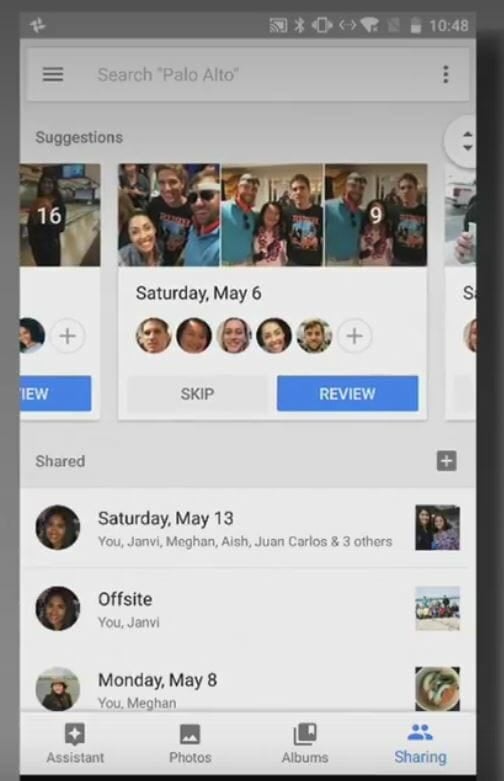

First is Suggested Sharing, which uses machine learning to make suggestions for sharing images. For example, if someone takes photos of their friends in a bowling alley, Google will determine which captured moments were important, and suggest sending those images to the people who appear in them. It will then ask recipients to add their own photos, and pool them together in one shared album.

Google also announced Shared Libraries, which is basically Google Docs meets Google Photos. It allows users to share entire albums, or only images that include specific people. An example here would be a dad sharing every photo he takes of his daughter with his wife. Google uses machine learning to determine who is in the image, and then automatically sends all images that include that person to selected recipients. It sounds a little creepy, but it seemed to work well during the demo.

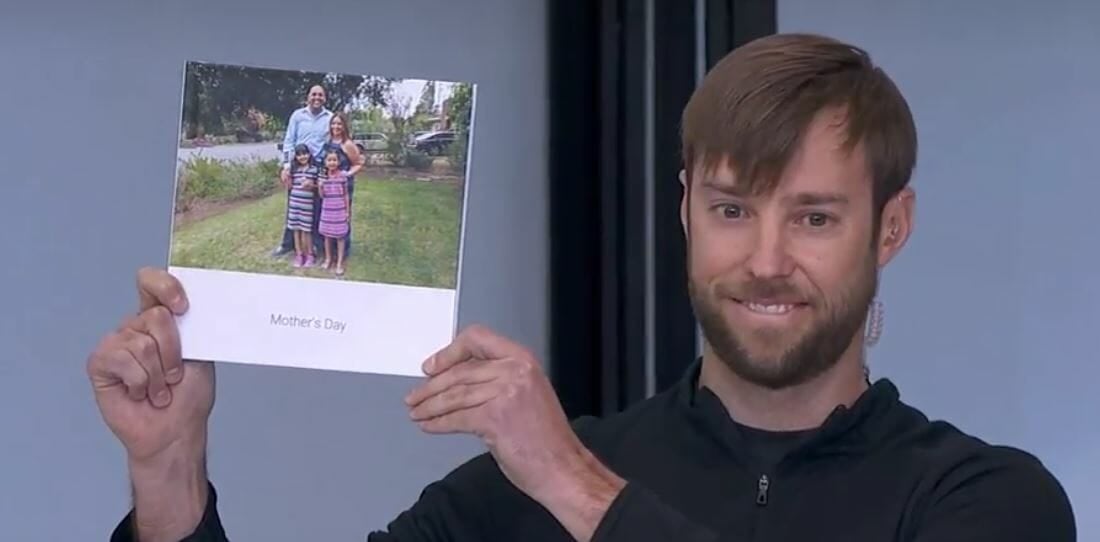

Photo Books is the third new launch out of Google Photos. It allows users to select a bunch of images, and quickly turn them into a physical photo book. A softcover book will cost $10.00 for 20 pages with 7-inch squares, while a hardcover edition will run you $20 for 9-inch squares. Machine learning simplifies the process of selecting images by choosing the 40 best photos from any large set of images.

Google Assistant

Google announced that its Assistant is now available on the iPhone.

The new Google Assistant SKD will allow device manufactures to build Google Assistant into whatever device they are building, from toys to drink-mixing robots. Expect devices listed as having “Google Assistant built-in” from companies like Sony, JBL, LG, Panasonic, Anker, and Polk to start popping up.

New languages will also be rolling out for Assistant in French, Brazilian Portuguese, Spanish, German, Italian, Korean, and Japanese on both Android and iOS.

Google Lens

One of the biggest announcements from the event is a new application called Google Lens. It uses artificial intelligence to recognize images captured from your smartphone’s camera. The goal is to help users understand what they are looking at so they can take appropriate action. For example, if a user points their camera at a flower, Google Lens can tell them what flower they are looking at. If Google Lens is shown an image of a restaurant, it can pull up information about it, like its user rating, prices, and ambiance.

With Google Lens, your smartphone camera won’t just see what you see, but will also understand what you see to help you take action. #io17 pic.twitter.com/viOmWFjqk1

— Google (@Google) May 17, 2017

Google Lens can even sign-in to a Wi-Fi network by simply scanning the password on the back of the router.

The new app will be compatible with Google Photos, which means all images you’ve already taken can be analyzed with Lens. It will be rolling out “later this year.”

Actions with Google

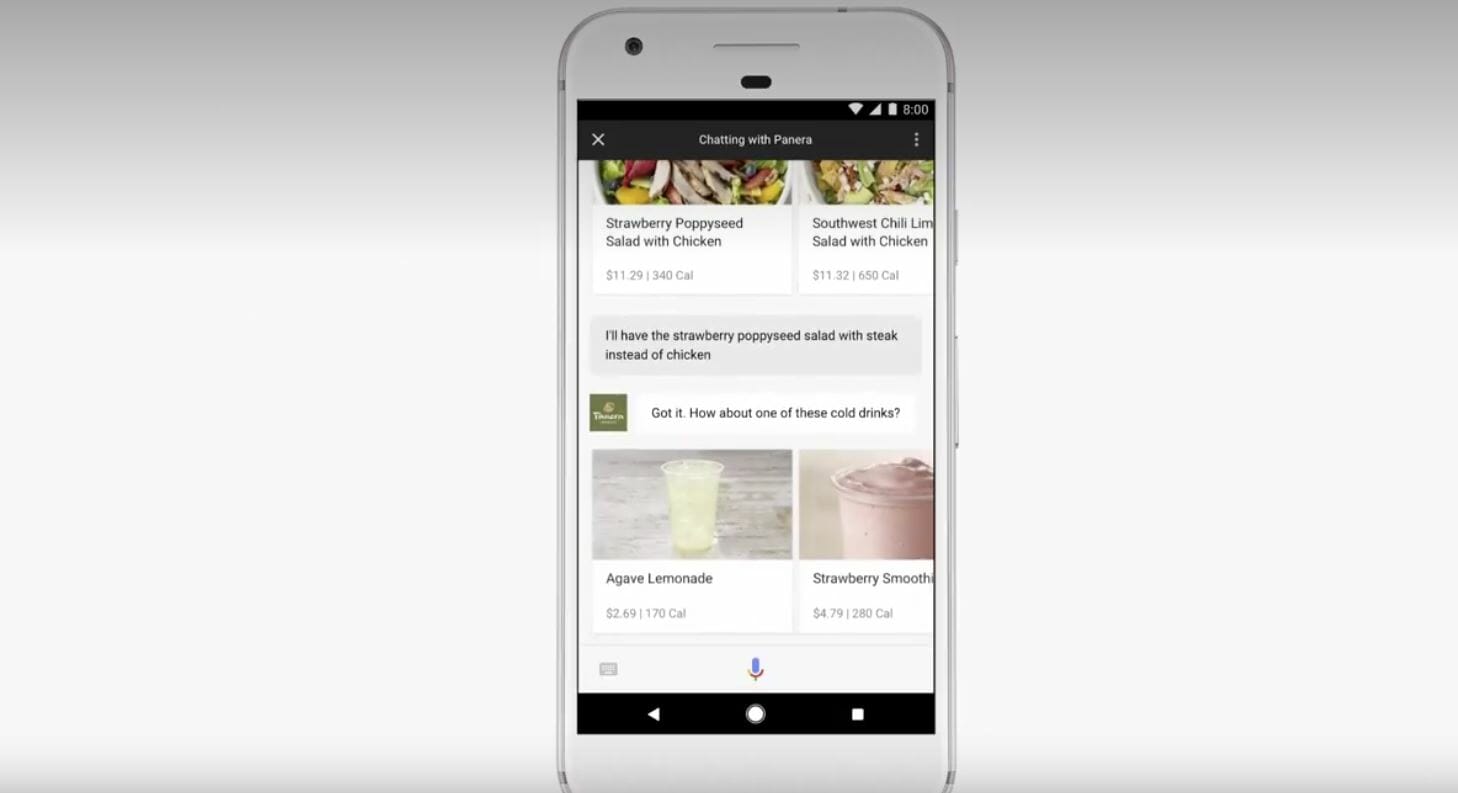

The Google Assistant is now available for third parties, like restaurants and movie theaters.

Google showed off how to use Actions to order Panera Bread. Just say “I’d like delivery from Panera,” then follow a robot’s prompts to checkout. You’ll be eating out of a bread bowl within minutes without having to create an account or install another piece of software.

YouTube

The YouTube TV app will now support 360-degree video navigable via remote. It sounds tedious, and not all that appealing on a flat display, but it’s now there for people to try it out.

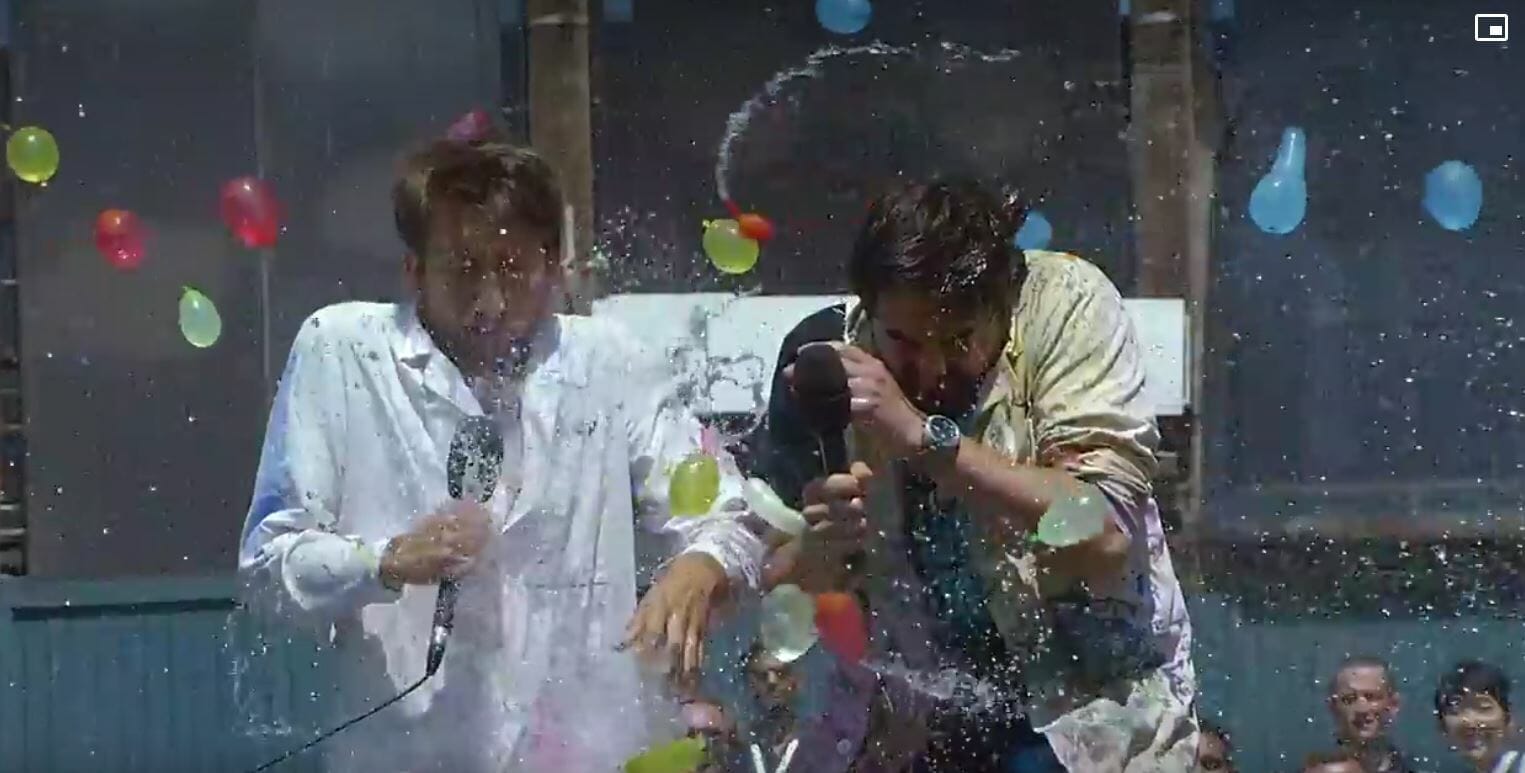

Super Chat is also getting expanded so users can now spend money to make things happen during a live YouTube video. The demo given onstage featured the Slow Mo Guys, who said they would have one water balloon thrown at them for every dollar Super Chatted to them. Google sent them $500, so they had 500 balloons thrown at them. Before Super Chat only let users spend money to have their comments featured on the chat box of a live video.

Virtual Reality

Google didn’t focus too much on VR this year. LG will make a Daydream-ready flagship smartphone later this year, while Samsung will update its Galaxy S8 to be Daydream compatible. HTC and Lenovo will release new headsets by the end of 2017.

Gmail

Google will start using machine learning to scan emails and suggest three basic responses users can edit and send. It’s similar to the quick messages you can send out for explaining why you can’t answer your phone, except this uses algorithms to read your email and suggest catered responses.

Bringing our AI-first approach to more products, we’re introducing Smart Reply to 1 billion users in #Gmail → https://t.co/FKhOiBN41e #io17 pic.twitter.com/fF5GxGVMq6

— Google (@Google) May 17, 2017

Google for Jobs

The company also plans to release a new job search engine that will leverage AI to provide users with appropriate listings. It will come with a one-click “apply” button that lets you send out your application by simply pressing a big blue button.

With Google for Jobs, we’ll use machine learning to help people find jobs, and make it easier for companies to find talent. #io17 pic.twitter.com/OOZv945Nih

— Google (@Google) May 17, 2017

The feature will be coming to the U.S. in the “coming months.”

Almost all of Google’s newly announced features will be available by the end of the year, so expect to see a lot more coverage in the next few months. We expect Google to announce new products, including a successor to the Google Pixel, around October for those of you disappointed by the lack of hardware at the event.