A doctor’s usage of ChatGPT during a patient visit has social media users debating the future of medical treatment.

“Took my dad in to the doctor cus he sliced his finger with a knife and the doctor was using ChatGPT,” Mayank Jain (@mayankja1n) shared on X in early May. “Based on the chat history, it’s for every patient.”

The pictures he took showed that the doctor had input basic information—”left index finger laceration with kitchen knife surgical referral for wound care and sutures”—and had ChatGPT spit out a response that included a History of Present Illness (HPI), medical history, physical exam results, and a final Assessment and Plan.

It’s unclear whether this information was all based on previous input from the doctor or whether ChatGPT was simply filling in the blanks.

The screen also showed a series of previous chats that appeared to mostly be related to diagnoses, with one Bible-related chat thrown in for good measure.

Should doctors use ChatGPT?

As everyone reckons with how AI may be integrated into our daily lives moving forward, the idea of it being utilized by medical professionals raises specific concerns.

Some have made the argument that AI has access to a vast wealth of knowledge far beyond what any single person could retain on their own. In theory, this could lead to faster diagnoses, catch potentially dangerous drug interactions, or assist with other critical care.

There’s also been the suggestion that doctors using ChatGPT and the like to cut down on administrative tasks like filling out charts is ultimately a good thing, as it could allow them to spend more time actually seeing patients.

But even that sort of usage potentially raises HIPAA concerns in the United States.

“Physicians can opt out of having OpenAI use the information to train ChatGPT,” Christian Hetrick wrote in a 2023 article for USC. “But regardless of whether you’ve opted out, you’ve just violated HIPAA because the data has left the health system.”

This doesn’t apply in every scenario. Doctors consulting AI or keeping identifying patient info out of it could be within the bounds of what’s allowable. But as the world rapidly shifts to become more reliant on AI, it’s hard to predict how much data will be offered up to these third party systems, let alone how secure it will be.

Social media reacts

As a cut finger doesn’t require deep medical knowledge, most people weren’t too bothered by this particular instance of AI usage, including Jain. However, even the possibility of having to deal with long waits, hefty insurance premiums, and additional payments just to have a doctor check with ChatGPT—something we could all do at home—sparked frustration.

— Chuck Petras (@Chuck_Petras) May 5, 2025

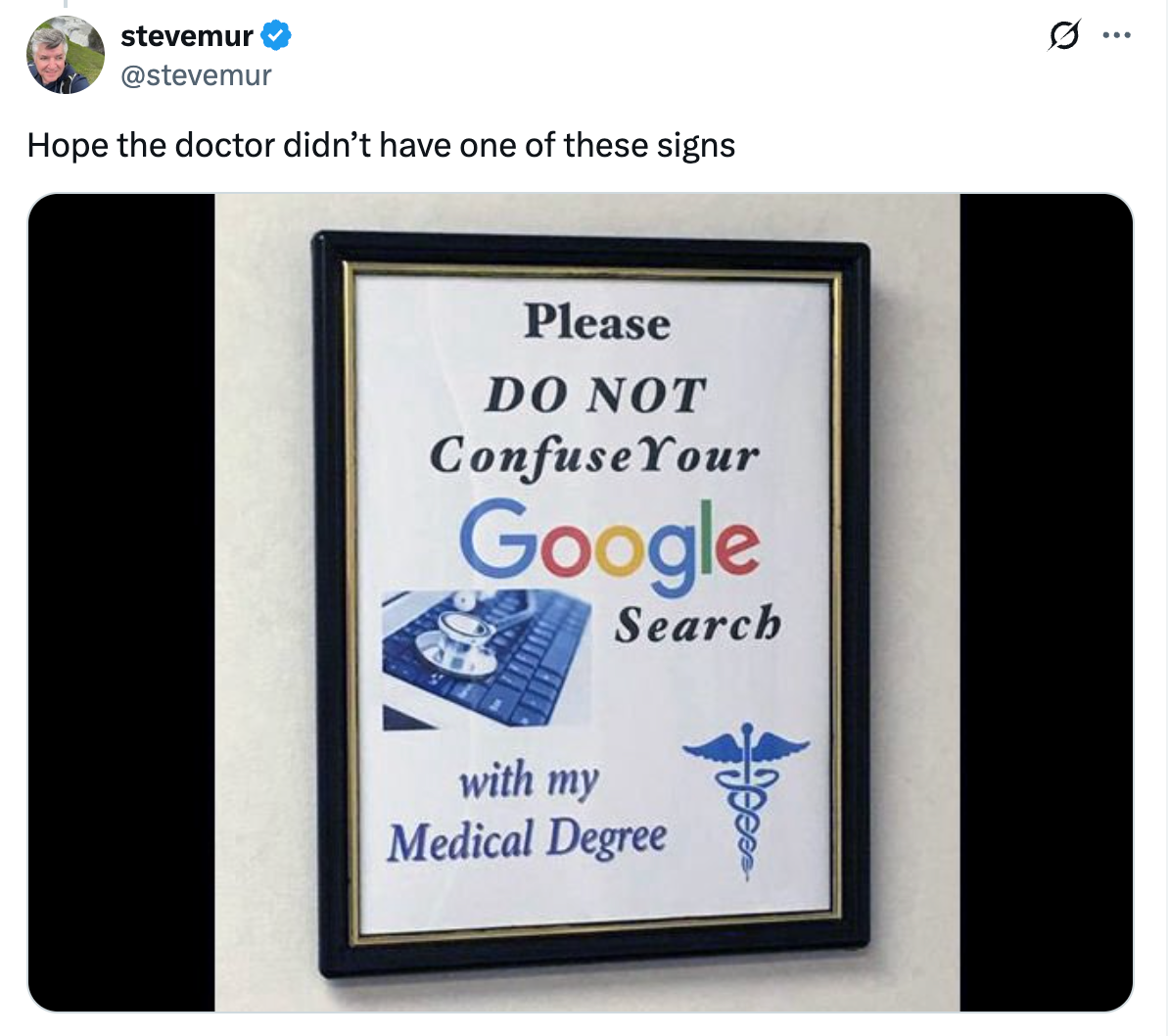

Some folks have just given up on doing more than finding amusement in all of this chaos, and honestly, who can blame them?

This may not be the future we wanted, but maybe it’s the future we deserve.

Internet culture is chaotic—but we’ll break it down for you in one daily email. Sign up for the Daily Dot’s web_crawlr newsletter here. You’ll get the best (and worst) of the internet straight into your inbox.