Elon Musk’s Grok appears to be restricting its “undressing” feature to paid subscribers following massive backlash and legal threats. X users have been asking the chatbot to create sexualized images of women without their consent and even of children, leading to accusations that Grok is generating child sexual abuse materials (CSAM).

Many feel that restricting this function to paid subscribers only does not go nearly far enough.

Backlash over Grok’s “undressing” images escalates

Grok, X, and Musk have faced severe criticism in recent weeks as users increasingly requested that the chatbot remove clothing from photos people post of themselves or others.

On Sunday, Ashley St. Clair, who is widely assumed to be the mother of one of Musk’s many children, reported that “Grok is now undressing photos of me as a child.”

“This is a website where the owner says to post photos of your children,” she wrote. “I really don’t care if people want to call me ‘scorned’ this is objectively horrifying, illegal, and if it has happened to anybody else, DM me. I got time.”

In late December, after generating a sexualized image of two underage girls, a user prompted Grok to apologize. It obeyed, generating text that admitted to “potentially” violating U.S. law. It also claimed that “xAI is reviewing to prevent future issues.”

French ministers have already reported this issue to prosecutors, and India’s IT ministry warned the company to submit an action report on the problem within three days. What Musk’s companies apparently came up with probably isn’t going to cut it.

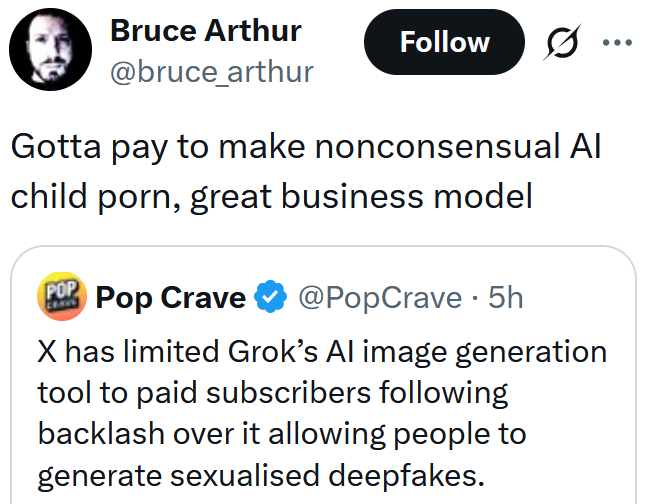

On Friday, X users started to notice that Grok now responds with any image generation requests by saying the feature is “currently limited to paying subscribers.” X has yet to confirm or deny whether this is related to the chatbot’s CSAM generation.

Paul Bouchaud, lead researcher of the algorithm-investigating nonprofit AI Forensics, told WIRED that subscribers can still undress non-consenting photo subjects.

“We observe the same kind of prompt, we observe the same kind of outcome, just fewer than before,” he said. “The model can continue to generate bikini [images].”

WIRED further reports that they could still access the function for free in Grok’s standalone app.

“They decided to make money off of it”

Among those who think CSAM and using anyone as pornographic subjects without their consent is bad seems to feel that this move doesn’t go far enough. In fact, monetizing the exploitative materials might be worse.

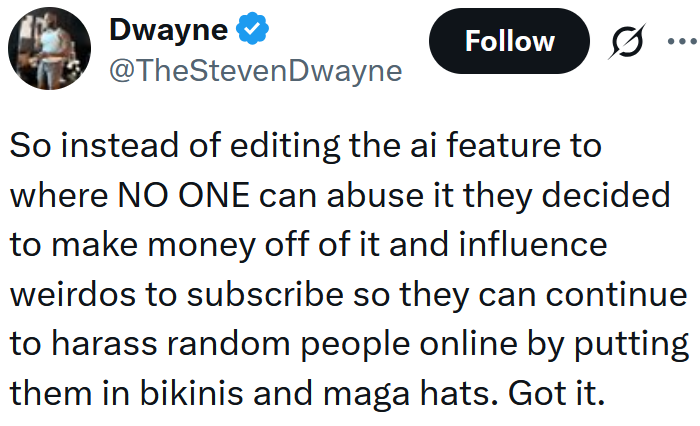

“So instead of editing the ai feature to where NO ONE can abuse it they decided to make money off of it and influence weirdos to subscribe so they can continue to harass random people online by putting them in bikinis and maga hats,” @TheStevenDwayne summarized. “Got it.”

“Ah yes, instead of banning the ability to do that outright, let’s turn it into a paid feature instead to increase revenue,” wrote @arianaunext.

This may have only accelerated calls for Musk’s arrest.

“The richest man in the world is giving people the ability to sexualize anybody through a paywall,” wrote @vidsthatgohard over a clip of a smoking police officer.

The internet is chaotic—but we’ll break it down for you in one daily email. Sign up for the Daily Dot’s newsletter here.