Facebook is getting rid of doubt as to what content is allowed and forbidden on its platform by publishing its internal guidelines for the first time. The 27-page document includes the blueprint from which moderators determine whether posts that include violence, harassment, abuse, or pornography should be removed.

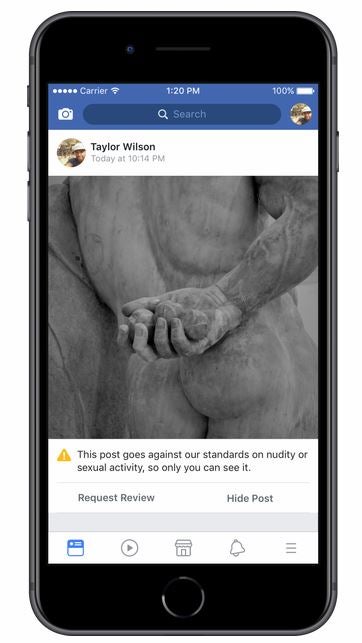

The social network is also adding the ability for users to appeal a decision they feel may have been wrongly enforced. Now, you can appeal to have deleted photos, videos, and posts put back on your timeline. Once a request is submitted, reviewers have 24 hours to determine whether it should be restored.

While Facebook has provided a watered down version of the guidelines moderators use to police content, it hasn’t ever given users this level of transparency. Rather than overhaul the standards—which have gotten Facebook into a good deal of trouble over the years—the company has instead chosen to update the publicly available version with a greater level of explanation. It now includes detailed outlines on how to deal with bullying, threats, nudity, fake news, and spam, among other things. The rules will be translated into 40 languages for the public.

“The vast majority of people who come to Facebook come for very good reasons,” Monika Bickert, vice president of global product management at Facebook, said in a blog post. “But we know there will always be people who will try to post abusive content or engage in abusive behavior. This is our way of saying these things are not tolerated. Report them to us, and we’ll remove them.”

The expanded guidelines may be lengthy, but they’re broken up into six categories: Violence and criminal behavior, safety, objectionable content, integrity and authenticity, respecting intellectual property, and content-related requests. Within those categories are subcategories, like “hate speech” and “graphic violence” found within “objectionable content.”

It gives users a better understanding of the nuances reviewers must recognize to make correct decisions on content that may not be clearly defined in the rules. For example, Facebook does not allow violence committed against a human or animal that includes captions with “enjoyment of suffering” or “remarks that speak positively of violence.” But it will allow—under a warning screen and age restriction—photos of “throat-slitting” and “victims of cannibalism.” Similarly, Facebook filters hate speech into three tiers, each with a set of specific prompts to watch out for.

The social network has been caught up in a whirlwind of controversy since the start of the year. Earlier this month, CEO Mark Zuckerberg was forced to stand before Congress to address a privacy scandal that saw the personal information of 87 million people exploited.

https://www.youtube.com/watch?v=gvc8t4MuStM

During the hearings, Zuckerberg was asked whether the site purposefully censors Republican viewpoints. Zuckerberg admitted an “enforcement error” led to banning conservative blogging duo Diamond and Silk, who will testify against Facebook later this week. Zuckerberg said he is concerned about the problem and is actively working to rid its review process of political bias.

Facebook’s failure to properly clean its site of abuse and hate speech led the United Nations to blame it for inciting a possible genocide against the Rohingya minority in Myanmar. A New York Times report from earlier this week described real-world attacks in Indonesia, India, and Mexico provoked by hate speech and fake news that spread through News Feeds. Sri Lanka took a stand against social media by banning Facebook, WhatsApp, and Instagram in an attempt to prevent further violence against Muslim minorities.

Facebook employs 7,500 people, 40 percent more than last year, to review content and determine whether it should be allowed on the site. The company will double its 10,000-person safety, security, and product and community operation teams by the end of this year. It conducts weekly audits to review its decisions but recognizes mistakes are inevitable.

“Another challenge is accurately applying our policies to the content that has been flagged to us,” Bickert wrote. “In some cases, we make mistakes because our policies are not sufficiently clear to our content reviewers; when that’s the case, we work to fill those gaps. More often than not, however, we make mistakes because our processes involve people, and people are fallible.”

Facebook claims it posted its complete set of internal rules to provide clarity and receive feedback from users for improvements. Bickert told reporters at Facebook’s Menlo Park headquarters last week she fears terrorist or hate groups could game the rulebook so their posts avoid detection by moderators, but said transparency outweighs those concerns.

“I think we’re going to learn from that feedback,” she said. “This is not a self-congratulatory exercise. This is an exercise of saying, here’s where we draw the lines, and we understand that people in the world may see these issues differently. And we want to hear about that, so we can build that into our process.”

In May, Facebook will launch “Facebook Forums: Community Standards” in Germany, France, the U.K., India, Singapore, and the U.S. to ask for feedback about community standards directly from users. You can read the updated community standards on its website.