Donald Trump loves to tweet and he often claims he’s “number one” on Facebook.

It’s clear the man sees social media as key to his reelection strategy. And that includes willful disinformation. Much of what Trump posts or amplifies on social media is false or misleading.

And with deepfake technology becoming more convincing and easier to use, it’s quite possible we will soon see deepfakes of Trump’s political opponents appearing on his social media feeds. Until now, it wasn’t clear how social media companies will work to stop their spread.

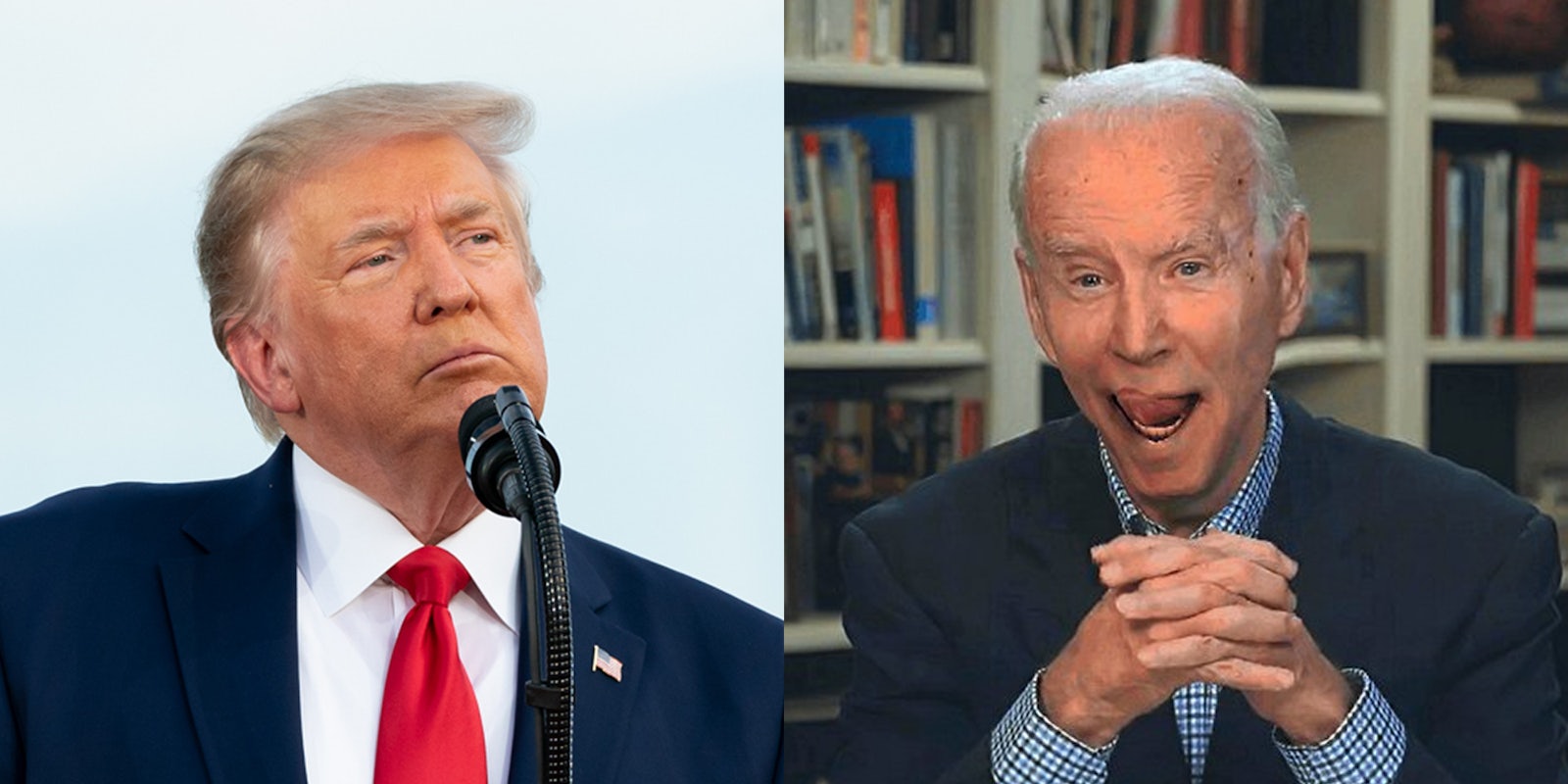

Trump is already pushing the limits of this before the election even ramps up into high gear. We’ve already seen Trump post a manipulated GIF that made Biden look like he was creepily sticking his tongue out, which was called a deepfake by some, but doesn’t actually qualify.

But it’s probably more could come.

And deepfakes present a maddening dilemma.

Generally, deepfakes are described as videos that are made using artificial intelligence to make a known person appear to be saying or doing something that they never actually said or did.

Think photoshop, but for videos.

Many first learned about deepfakes when comedian Jordan Peele used this technology to turn himself into former President Barack Obama in 2018, where he humorously made Obama say that “President Trump is a total and complete dipshit” in his fraudulent video.

But Trump has a much wider platform and it’s not hard to imagine Trump posting or retweeting a deepfake of presidential candidate Joe Biden appearing to say something terrible.

What would happen next would depend on the social media platform where it was shared. Would they delete it? Would they warn users that it’s not a real video? We asked.

Facebook has been known for allowing politicians to lie in their ads, so we wondered if they’d allow Trump to post a deepfake in an ad.

A spokesperson replied that regardless of whether the deepfake was shared as a regular post or an ad, it would be removed, as the platform’s community guidelines don’t allow users to post videos that “would likely mislead an average person to believe that a subject of the video said words that they did not say.”

“The policy is part of our community standards, so it’s content we remove off the platform, regardless of the speaker and regardless of whether it’s an ad,” the spokesperson says.

As for Twitter, a spokesperson replied that the deepfake would not be removed. Instead, a warning label would be placed on the video to inform users that it’s fraudulent.

“We would likely label tweets with deepfakes/manipulated media rather than remove them,” the spokesperson said.

Whether you agree with these approaches, it won’t be easy for social media companies to deal with the problem of deepfakes going forward. Any deepfake that is taken down can spread elsewhere on the internet and might be reposted on the platform where it was originally taken down.

It’s a cat-and-mouse game. And if a social media company takes too long to take down a video or flag it, then it might have already had its desired effect before its removed.

John Villasenor, a professor of electrical engineering, law, and public policy at UCLA who has researched deepfakes extensively, tells the Daily Dot that a lot of people who saw the video may never find out what they saw was fake. That means it could influence a lot of people while it’s up and hasn’t been debunked.

An MIT study from a couple of years ago found fake news spreads a lot faster than real news.

“Let’s say you have a highly influential deepfake that’s released two weeks before the election, and let’s say within hours the person portrayed files a complaint,” Villasenor says. “If you have it seen by two million people, in whatever time it is before it gets taken down, then you had an impact. If two million people saw it, then maybe only 500,000 will see the subsequent announcement that it was actually fake, so you still have the potential for a very substantive impact.”

Villasenor says deepfake technology has become “much more accessible” in the past year or two, and anyone who wants to have one made could get one made. And he says once it’s out there, it’s almost impossible to get rid of it for good.

Hao Li, a deepfake pioneer and CEO of Pinscreen, told the Daily Dot that deepfakes have improved significantly over time. Where it was once relatively easy to tell a deepfake wasn’t a real video, it’s starting to get much harder.

“It’s really difficult to know what’s real and what isn’t, but the problem is the majority of people wouldn’t know,” Li says.

In terms of making a deepfake of Joe Biden, Li says it wouldn’t be hard to do.

“You can basically have an organized group that just puts some effort into production, and you just need to find a double of Joe Biden and then deepfake Joe Biden’s face on them and make him say things he never said,” Li says.

Li adds that anything Trump shares on social media reaches a “huge audience,” so a lot of people would see the deepfake very quickly.

He says the technology is getting so good that a talented deepfake creator could likely make a deepfake that wouldn’t be detected by the artificial intelligence social media companies are working on to detect these videos. This detection technology is good and always getting better, but deepfake creators are simultaneously getting better at finding ways to evade detection.

Unfortunately, though, the only way we’ll be able to see how this really plays out is when it eventually happens.

READ MORE: