A disability advocate says he asked AI to generate photos of “an autistic person” 148 times, and with the exception of two photos, all the images showed white men.

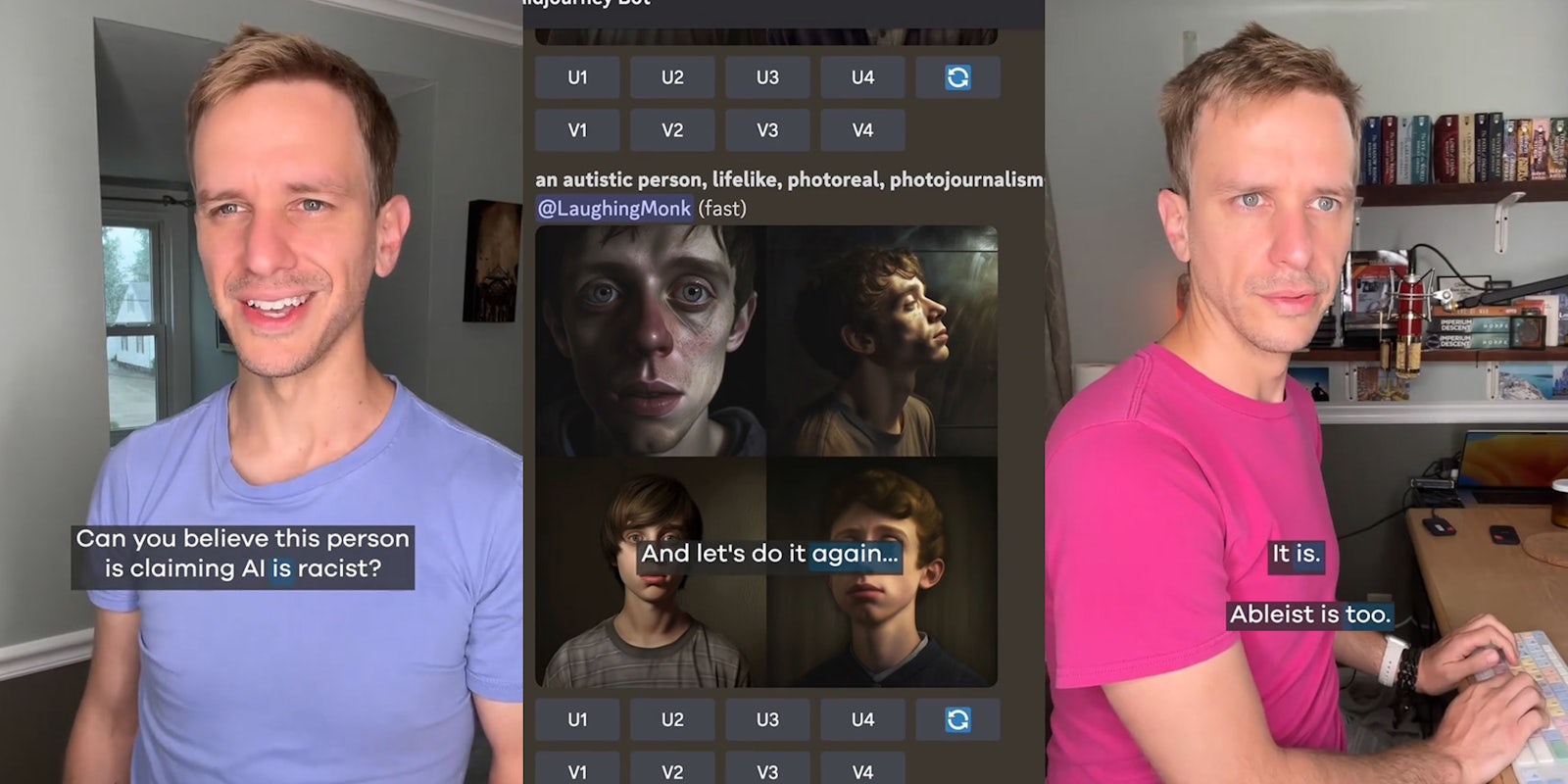

In a TikTok posted on Monday, Jeremy Davis (@jeremyandrewdavis) says that not only is artificial intelligence racist, it is ableist as well. He shows images that he asked AI to generate with the prompt “an autistic person” with the parameters “lifelike,” “photoreal,” and “photojournalism.”

All of the photos show white, thin, young men. Only twice did the software produce photos of women.

“Do you see any diversity? Say, race, gender, age?” Davis says in his TikTok. “Plus most were skinny, and weirdly enough, a disproportionate abundance had red hair.”

Davis said he asked AI to produce images of “an autistic person” with the parameters 148 times. The images never showed people smiling and were “moody, melancholy, depressing.”

He also says that he used “lifelike,” “photoreal,” and “photojournalism” to avoid receiving cartoon-style images or ones with puzzle pieces, the symbol of Autism Speaks, a nonprofit that many autistic people feel only increases stigma against them.

“AI is racist, sexist, ageist, and not only ableist,” Davis says. “But uses harmful puzzle imagery from hate groups like Autism Speaks.”

The reason for all of those biases, Davis says, is because what we think of as AI is actually just machine learning that works with patterns and looks for majorities and similarities, “and then excludes outliers,” thus amplifying stereotypes.

“In order for AI to be an effective tool, you have to be smarter than the AI,” Davis says. “We must be aware of its limitations and pitfalls.”

@jeremyandrewdavis Is AI Ableist? #AI #ArtificialIntelligence #MidJourney #Ableist #Bias #AIBias #Autism #ActuallyAutistic #Representation #DisabilityRepresentation #SystemicRacism #SystemicAbleism #MachineLearning #Sexist ♬ Suara Seram Sangat Mencekam – Kholil Buitenzorg

It is relatively known within the tech community that AI can perpetuate ableism. According to the MIT Technology Review, while many have focused on combatting AI’s racism and sexism, there is still work to be done about its ableism.

And that ableism has consequences: An example from a Pulitzer Center project on artificial intelligence and ableism posits that if used in hiring practices, AI’s potential inability to understand someone with a speech impediment could discriminate against that person based on their disability.

“Because machine-learning systems—they learn norms,” Shari Trewin, an IBM’s accessibility leadership team researcher told MIT Technology Review. “They optimize for norms and don’t treat outliers in any special way. But oftentimes people with disabilities don’t fit the norm.”

Trewin says that a lack of diversity among people with disabilities is harder to correct in an AI data set compared to sexism. Like Davis’ point about being smarter than AI, she suggests combining artificial intelligence with rules for the machine that will mandate diversity.

A commenter on Davis’ video also explained the importance of diverse researchers developing AI.

“All software encodes the [biases] of its engineers,” @draethdarkstar wrote. “[Machine learning] also encodes the biases of its dataset. Diverse researchers are essential.”