Abbie Richards (@tofology) is a researcher in the growing field of extremism and disinformation on TikTok, and her latest work, along with co-author Olivia Little, demonstrates just how easy it is for the platform to radicalize young people into the far right—and how transphobia is a gateway hatred for antisemitism, racism, and neo-Nazi movements.

Aware of the well-documented connection between the far-right and transphobia, Richards and her colleagues wanted to see if engaging with transphobic content on the platform would trigger the algorithm into showing the user additional far-right content. In other words, to test the premise that transphobia is a “gateway prejudice that leads to broader far-right radicalization.”

The answer was a resounding yes. And quickly.

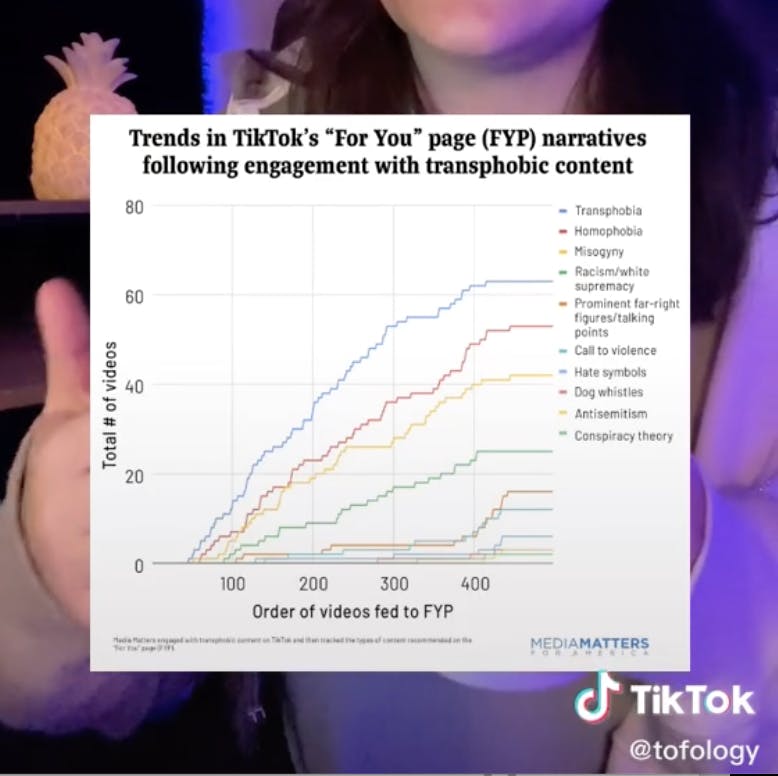

Richards began by creating a new account and following 14 users know for their transphobic content. She then scrolled through her For You page (FYP), only interacting with videos featuring transphobia. The more transphobic content Richards engaged with, the more content featuring other prejudices—including racism, misogyny, and homophobia—turned up on the test account.

Richards didn’t even need to interact with videos featuring these new subjects for them to keep appearing on the test account’s FYP—the more transphobic videos she interacted with, the more extreme the other videos appearing on her page became. And by video 141, explicit Nazi symbols began to appear on her feed.

That may seem like a lot of videos, but the average time spent viewing a TikTok is around 20 seconds. Reaching video 400, where a sudden spike in extreme far-right content, including explicit calls to violence, appears on the graph, could easily be achieved in two hours worth of bored scrolling. “A user could feasibly download the app at breakfast and be fed the overtly white supremacist neo-Nazi content before lunch,” Richards said.

TikTok isn’t the only app with a problem like this. Facebook is renowned for having radicalized your aunt and being filled with QAnon, anti-vax conspiracies. YouTube, a very similar app to TikTok in a lot of ways, had a very similar problem with its algorithm directing people to increasingly extreme far-right content. While YouTube’s algorithm took around 18 months to reach the full extremes of what the alt-right had to offer, TikTok appears to be able to do it in a matter of hours.

The Daily Dot has contacted Richards and will update this article if she responds.

More on TikTok

| Everything you need to know to get started on TikTok |

| A slowed-down song and a slow zoom trend collide on TikTok |

| People have questions about this TikToker’s skull collection |

| Sign up to receive the best of the internet in your inbox. |