Instagram is still hosting accounts with explicitly anti-Black or white supremacist content. Experts say even dormant social media accounts that display hate speech are dangerous.

After the Daily Dot reported in October that 50 Instagram accounts used some iteration of “Black Lives Don’t Matter” in usernames or content, some depicting photos of Black men in nooses or racist caricatures, all but two accounts were removed.

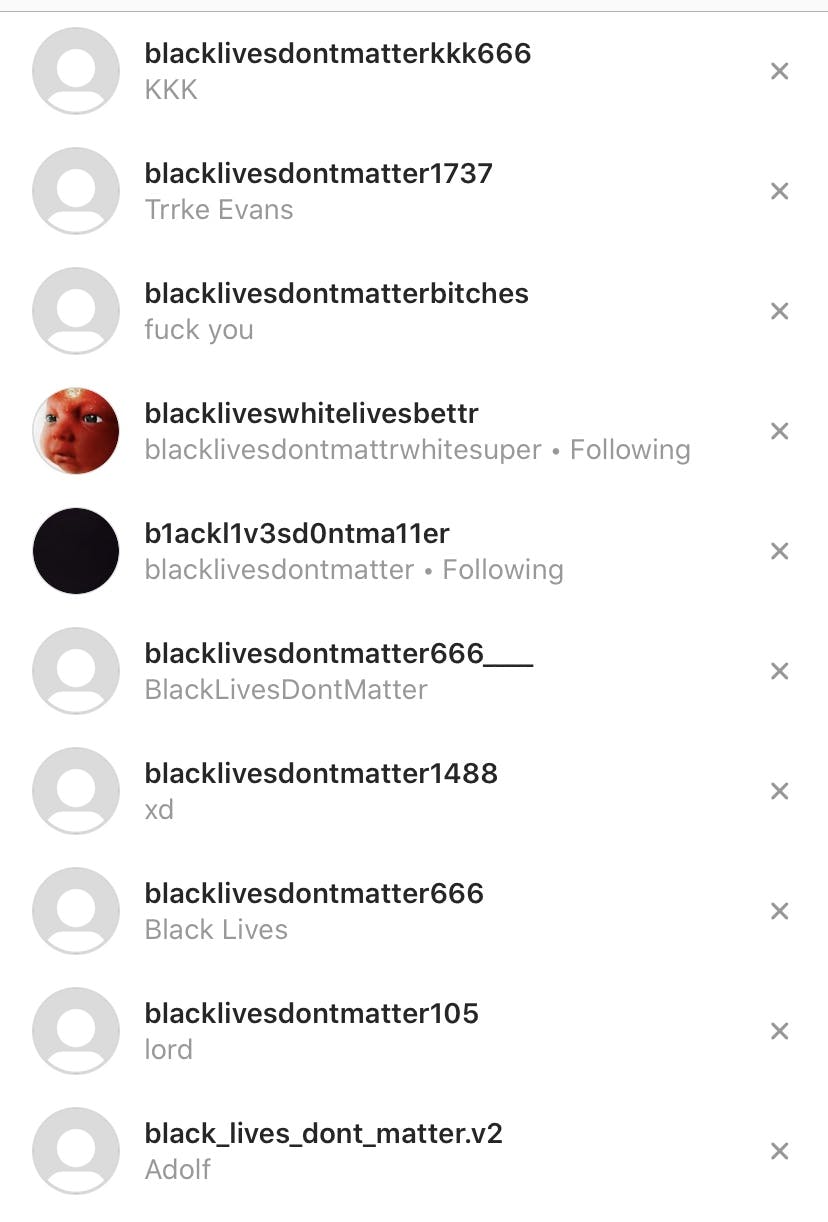

However, a slew of new racist accounts—almost all of them with some variation of “Black Lives Don’t Matter” in the handle or the description—remain on the platform. On Wednesday, the Daily Dot counted at least 28 racist accounts. On Friday, after the Daily Dot reached out to Instagram, seven accounts remained on the platform.

The ones that remain don’t have explicit or violent photos but have other anti-Black content. At least five of them reference the Ku Klux Klan in the account name or description.

A web of racism

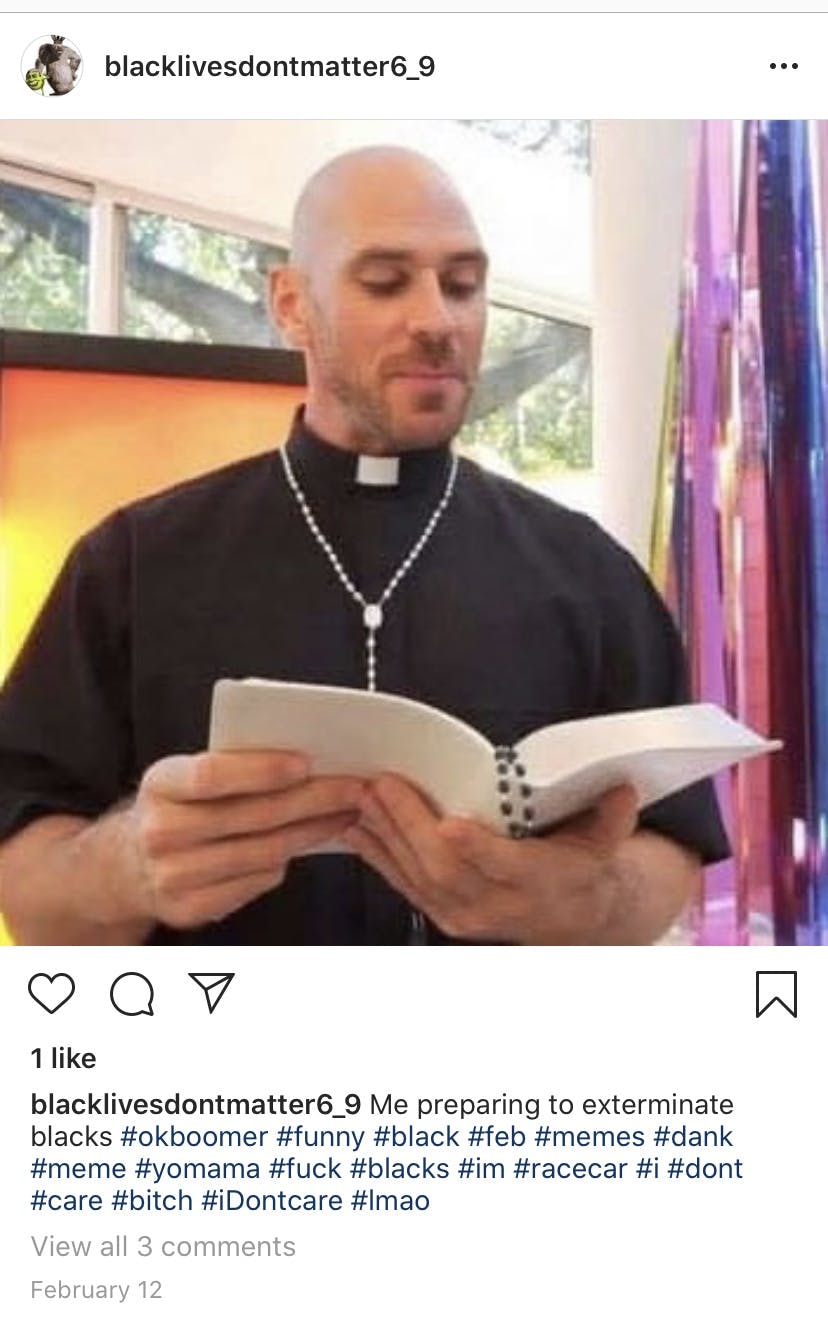

The racist accounts that remain on Instagram have similar characteristics: Most of them are inactive and appear to exist solely as an offensive username. They have between zero to three followers, and most aren’t following anyone back. Many have numbers attached to their account name, like “blacklivesdontmatter6_9” or “blacklivesdontmatterkkk_666.”

The description of one account reads, “There was a reason y’all were slaves for so long.”

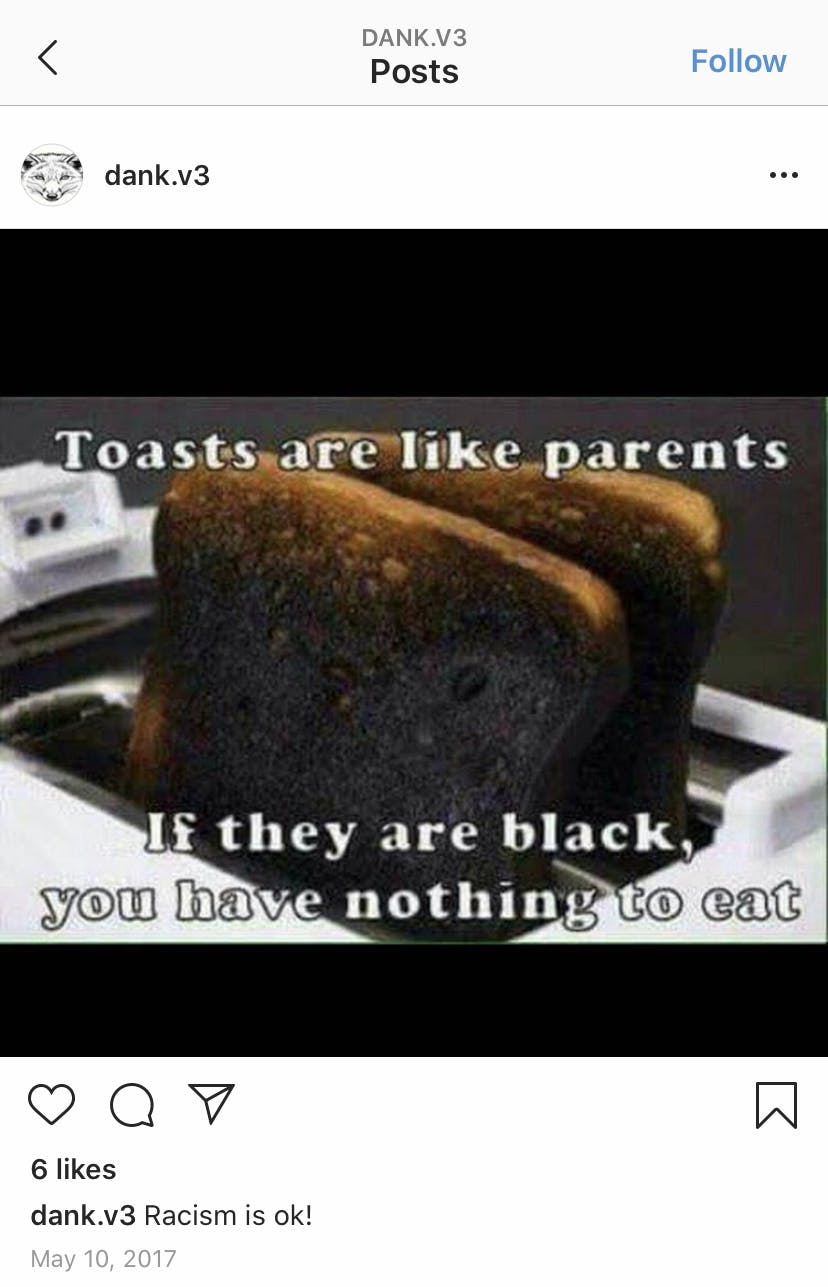

Another account that frequently uses anti-Black hashtags, @dank.v3, posted an image in 2017 that reads, “Toasts are like parents; If they are black, you have nothing to eat.” It’s a racist comment about the low wealth level of many Black communities that ignores the role of white supremacy in perpetuating income inequality in communities of color.

The caption of the post reads, “Racism is ok!”

Many of the active accounts have some other content that is transphobic, misogynistic, or anti-Semitic. The account @dank.v3 shared an image that shows the symbols for the female and male genders. Variations of those symbols are titled “mental disorder,” insinuating that being trans or nonbinary is a mental disorder. Posts from this account are accompanied by hashtags like “blacklivesdontmatter123” and “#hitler #is #hero.”

Many of the accounts appeared in 2015, which is around the time President Donald Trump launched his presidential campaign, and when advocates say the “Trump effect” began, which included an increase in targeted attacks or harassment against the Black, Hispanic and other marginalized communities.

Last month, another account with 25 followers posted an image of a priest reading a textbook with the caption, “Me preparing to exterminate blacks.”

Instagram, which is owned by Facebook, still has a lot of catching up to do in terms of restricting hate speech, experts say. “There are many examples of blatant hate speech, but also casual racism built into the environment,” Andre Oboler, CEO of the Online Hate Prevention Institute in Australia, told the Daily Dot.

Oboler pointed to the username @jews_live_in_ovens, which last posted four years ago but was still a visible account when the Daily Dot ran a search on Friday.

Oboler also pointed out a Facebook page with a meme showing a monopoly board “Black Monopoly” written on it. Instead of showing property listings, all but one of the blocks on the board shows the “Go to jail” command. The other block shows the “In jail” section; the post has been up since 2017.

Inactive but present

Even if the accounts aren’t actively posting, their presence alone is cause for concern. Experts say the existence of radical social media often plays a role in hate crimes, whether the crime is carried out by a person who posts hate messages or a person who sees them.

Henry Fernandez, a senior fellow at Center for American Progress, said that accounts without followers could still be a recipe for disaster.

“The fact that I cannot see the speech, does not alone make it [less] dangerous. It may not hurt my feelings, but it will kill me,” he told the Daily Dot. “I may not see, but hate groups are still able to use that and to suck in the young white male who has been manipulated.”

The inactiveness of the accounts, thus, shouldn’t be an excuse for social media platforms to ignore them. And it’s not enough to rely on algorithms to flag hate content, Oboler argued.

“It isn’t a matter of just blocking all references to Hitler or the KKK, as some of the time a mention of Hitler will be part of a message providing education on World War 2 or the Holocaust and a message about the KKK may be news on recent racist activities, or informative about the nature of the organisation,” he said. “We need to have nuance, stopping the spread of harmful messages, not banned particular words indiscriminately.”

The trail of hate: from online to IRL

The thing about hate on the internet is its permeability. The genesis of this kind of hate, as portrayed in radical Instagram accounts, isn’t an Instagram username or an email address. Hate groups have been there all along. But they weren’t always so easily accessible.

“Before the internet, hate groups had become very small pockets of disconnected individuals and what the internet allows is for you to find like-minded people,” Fernandez said, adding that it helps people operate anonymously and over vast geographical locations without having to be physically present in those communities.

This, Fernandez adds, makes it easy for hate-group members or white nationalists to anonymously lurk and hold specific ideologies without being ostracized from society.

Meanwhile, online, it allows them to form a community with like-minded individuals, as in the case of Dylann Roof. A GQ investigation into “the making of Dylann Roof” quotes Southern Poverty Law Center’s Intelligence Project director Heidi Beirich as saying Roof did not have many of the hallmarks of white supremacist killers at initial glance.

“[They] spend a long time indoctrinating in the ideas,” Beirich told GQ in 2017. “They stew in it. They are members of groups. They talk to people. They go to rallies. Roof doesn’t have any of this.”

But Roof had the internet.

Matthew Williams, a Cardiff University professor who has studied hate speech for 20 years, echoes that the mere existence of racist social media accounts is a key cog in the bigger problem of hate crimes.

“Online hate victimization is part of a wider process of harm that can begin on social media and then migrate to the physical world,” Williams told the Daily Dot. “Those who routinely work with hate offenders agree that although not all people who are exposed to hate material go on to commit hate crimes on the streets, all hate crime criminals are likely to have been exposed to hate material at some stage.”

The way forward

Williams is working with the U.S. Department of Justice on how to better measure hate speech.

“Social media is now part of the formula of hate crime,” he wrote last year. “A hate crime is a process, not a discrete act, with victimization ranging from hate speech through to violent attacks.”

Instagram’s Community Guidelines, meanwhile, prohibit “credible threats or hate speech” with some consideration for content that’s used to raise awareness about hate speech. Still, it’s not clear why radical accounts with racist usernames keep cropping up, especially when their content is obviously not intended to raise awareness.

In an email statement to the Daily Dot, Facebook, Instagram’s parent company, reiterated its mission to create a “safe environment for people to express themselves.”

“Accounts like these create an environment of intimidation and exclusion and in some cases, promote real-world violence, and we do not allow them on Instagram,” a spokesperson said.

Instagram says it has developed technology that helps identify troublesome pages and even identifies hateful comments as they’re about to be posted, giving users a chance to edit or check their comments if it’s consistent with other hate language. When technology falls short, it’s up to community members to monitor offensive content.

The way forward, Fernandez argued, is for Instagram to employ human content reviewers. But that raises another set of issues. One investigation revealed dire conditions for Facebook content moderators, many of whom reported receiving insufficient benefits and suffering from mental health issues related to their jobs.

Still, Fernandez said, Instagram shouldn’t be able to “escape responsibility” for hate-filled accounts, dormant or otherwise.

“In the same way that we would never say that it would be acceptable to have child porn shared in private groups on Instagram, we can’t allow dangerous speech that might lead to people being killed to be shared in private groups just because they’re private,” Fernandez said.

READ MORE: