YouTube has long been criticized as being a gateway for far-right and extremist content, and now TikTokers are trying to highlight just how the video streaming site exposed them to that kind of content at a young age.

Teens—particularly boys interested in gaming—say YouTube facilitated an “alt-right pipeline.”

Activist Wagatwe Wanjuki first noticed the alt-right pipeline discourse happening on TikTok, and documented it in a Twitter thread.

Wanjuki noted that “it’s fascinating to watch various young people explicitly talk about YouTube leading them down the pipeline.”

The pipeline refers to how YouTube suggests the next video for users to watch. The platform does this based upon an algorithm that guesses what type of video the user would like to continuing watching.

This feature isn’t insignificant. CNET reports that more than 70% of viewing time on YouTube, the second most-visited website in the world, is suggested by the algorithm.

Major internet companies have already been focusing on YouTube’s alt right pipeline and now everyday users on social media are doing the same.

Mozilla, the company that launched the internet browser Firefox, is calling on YouTube to open up transparency for its algorithm and, TikTok users— particularly those exposed to harmful content—want answers.

What is the YouTube alt-right pipeline?

The alt-right pipeline pushes innocuous viewers, typically gamers, towards pernicious content.

The harmful content regularly consists of anti-women, racist, or homophobic jokes by a conservative creator. Many on TikTok remember talk show host Ben Shapiro appearing in their suggested videos.

The pipeline works like this: If a user watched a compilation of “Call of Duty” or any other video game wins, after the video ends, YouTube might suggest another video about a “feminazi getting owned.” A feminazi is a derogatory term adopted by the far-right for a radical feminist.

After the user watches one video like this, the feedback loop begins as more and more alt-right videos begin to be suggested.

Other videos common on the pipeline include “cringe” social justice warrior (SJW) videos. A “SJW” is another example of far-right lingo for someone who does not actually care for the social causes they are fighting for, but rather for the name and praise for doing so.

And these kinds of videos are all over YouTube. This video is a compilation of “triggered SJWs.”

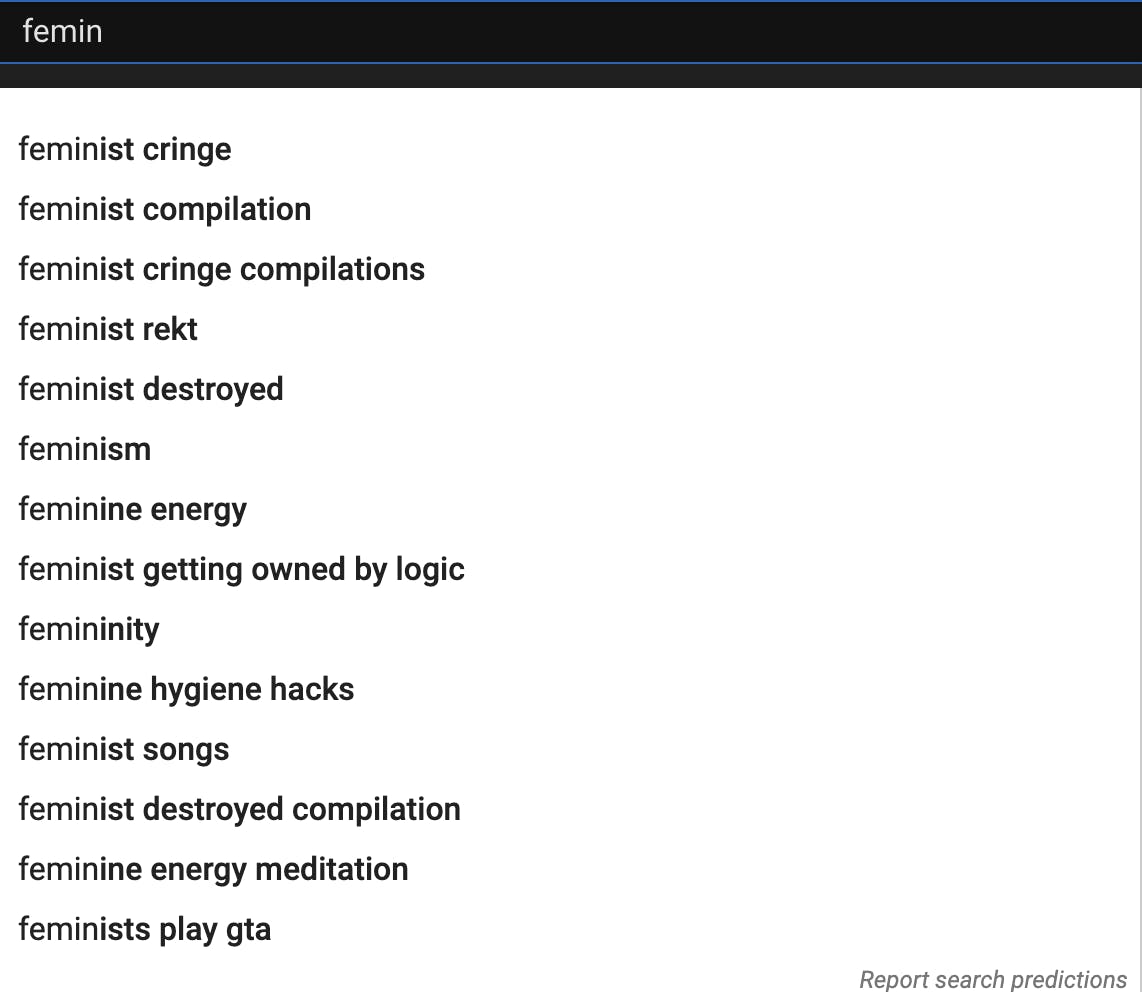

After searching one SJW video, here are the search results for “femin.” Just one view of a SJW clip results in more offensive content like “feminist cringe” or “feminist rekt.”

Is YouTube doing anything to prevent alt-right content?

YouTube began banning extremist content in 2019, but by that time it was too late for many impressionable teens before then.

In that announcement, YouTube says it would begin to prohibit content that claims “a group is superior in order to justify discrimination, segregation or exclusion based on qualities like age, gender, race, caste, religion, sexual orientation or veteran status.”

Mozilla says it was only a cop out as the content still persists.

“One of YouTube’s most consistent responses is to say that they are making progress on this and have reduced harmful recommendations by 70%,” a Mozilla spokesperson told the Daily Dot. “But there is no way to verify those claims or understand where YouTube still has work to do.”

Instead, Mozilla stepped up to monitor YouTube. Last year, Mozilla created a browser extension called Regrets Reporter that saves participating users’ suggested video content on YouTube.

Regret Reporter launched after Mozilla pressured YouTube to explain the algorithm to users for over a year and a half. As the election approached and the pandemic persisted, Mozilla decided to work with users to prevent the suggestion of content such as election myths or “plandemic” videos.

The goal is to check just how well YouTube is doing at preventing harmful content from sneaking up on innocuous viewers. Mozilla says the findings will be publishing in late spring of 2021.

“By sharing your experiences, you can help us answer questions like: what kinds of recommended videos do users regret watching? Are there usage patterns that lead to more regrettable content being recommended? What does a YouTube rabbit hole look like, and at what point does it become something you wish you never clicked on?,” the Regrets Reporter website says.

YouTube did not return several requests for comment by the Daily Dot.

The discourse joins TikTok

But it’s not just Mozilla that has raised flags about YouTube’s alt-right pipeline. A growing number of users on TikTok have begun sharing their experiences.

Masses of videos are being posted under the hashtag “alt-right pipeline” where users are sharing personal stories about YouTube’s algorithm.

One TikTok details how YouTube showed anti-women content to teens in 2016.

This TikTok shows how easy it was for conservative YouTube creators like Shapiro to grab the attention of young users.

Another video explains how the user saw “feminist getting destroyed” videos following gaming compilations.

Meanwhile, this post recognizes the number of young boys that “go through an alt-right phase or even just an apolitical misogynist phase.”

The TikTok is captioned “check up on your little brothers.”

Wagatwe noted the commonalities in her thread.

Is TikTok any different?

Like most social media platforms, TikTok has its own problem with keeping harmful content off of its site.

For example, in TikTok videos that bring the alt-right pipeline to light, some users side with the pipeline. A screenshot from Wanjuki’s thread shows one comment that states “glad I stayed in it.”

There are thousands of videos under the hashtags ‘feminazi’ and ‘SJWcringe.’

This TikTok is just a gaming stream with a anti-feminist and SJW rant in the background.

Despite the alt-right content thriving on TikTok, Mozilla has stated in the past that TikTok’s algorithm is more transparent than YouTube.

“As platforms like Facebook and YouTube struggle to explain how their News Feed and recommendation algorithms work, TikTok seems to be moving towards greater transparency by saying it will open up its platform to researchers,” Mozilla wrote.

But until researchers can fully understand the algorithms at both YouTube and TikTok, harmful content can still be curated towards unsuspecting users.

“Until researchers have access to comprehensive data about the algorithms used by TikTok and YouTube, researchers cannot identify patterns of harm and abuse, fueling public distrust,” Ashley Boyd, Mozilla’s vice president of advocacy, told the Daily Dot.

This post has been updated.