For anyone turning the darkest thought of all over and over in their mind, there are only so many places to turn. Tell your friends, family, or a suicide hotline that you’re thinking about killing yourself, and odds are they’ll try to stop you in your tracks. But confide in an anonymous app and you’re whisked into a virtual game of Russian Roulette. There may be a bullet in the chamber, but there are many, many chambers. And so much spinning through them.

The anarchic worlds of pseudo-anonymous social networks like Whisper and Secret are equal parts earnest, unhelpful, cruel, and silent. Randomness is the only sure bet. Unfettered by identity, delicate truths are passed among the hands of people you will never meet.

For deeper insight into how these communities might affect their most vulnerable users, I spoke with Dr. Aaron Malark, a licensed clinical psychologist with a private practice in New York City. I know Malark personally and he has extensive experience in crisis intervention and at-risk behavior. (It’s worth disclosing that I have a vested interest in how the tech industry addresses mental illness. I worked for a number of years in research and treatment clinics in hospitals across Manhattan, specializing in mood disorders and addiction.)

The way these apps might steer their users’ thoughts and behaviors isn’t simple.

“Online support systems could be helpful or harmful; it really depends on the nature of the interactions, what is being communicated, and what follow-up there exists,” Malark explained. “Because of this, organizations need to be careful about what is being promised and what they can deliver.”

So you want to kill yourself

If you post that you’d like to die, a lot of things can happen. I’ve tried it, both on Whisper and Secret‚ the main companies racing to monetize their chaotic digital confessional booths.

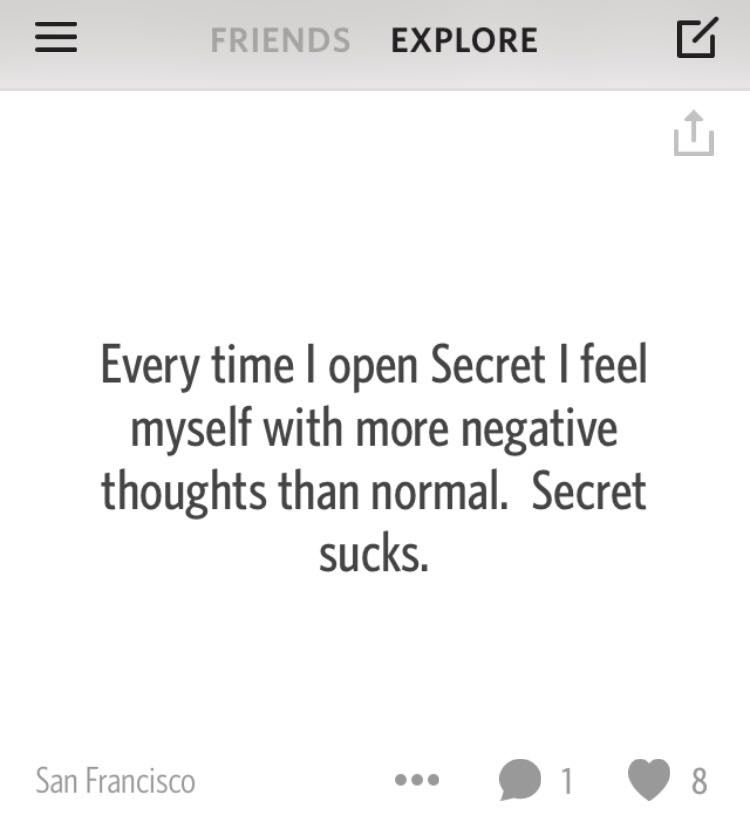

On Secret about a month ago, it went about like I’d expect. “Say something kind,” Secret’s text entry box pleads. After typing out my post, the app offered a choice of background colors and provided the option for a Flickr image search for a background image (I went with black). The responses were few: One egged me on, another hinted that the author knew my identity. I wasn’t at risk at the time, but it certainly felt lonely. I definitely felt worse.

“While rejection can be difficult for anyone, it can be much more distressing for someone who has a history of invalidating or harmful relationships,” Malark explained. “Rejection can be the first step in a pathway that leads to really overwhelming feelings… So, if someone uses a harmful behavior like cutting to cope with distress, that hurtful rejection could exacerbate the behavior, and the pattern gets reinforced rather than addressed.”

When expressing suicidal thoughts on Secret, no intervention dialogue is triggered at all. You’re at the mercy of the community, which usually ranges from warm to gossipy, hostile, and occasionally sociopathic.

The response was the opposite of the ideal model Malark describes. “The important thing is that consistent, available support is offered. What matters is that someone is really there and available when someone reaches out; with a totally voluntary group where no one has made any commitment and there’s no oversight, there’s no guarantee of that.”

Whisper was a different story. I logged on to publish a sample cry for help: “I want to kill myself.” I knew that Whisper suggests the images that appear behind its simple, vertical confessions, based on the text entered. What I wasn’t ready for: Whisper doesn’t screen the images it populates posts with—and they’re culled from the app’s own database.

Apparently, the word “kill” in my sentence pulled in multiple suggestions of handguns. Using the word “suicide” prompted a suggestion of a man in front of an ongoing train—and that was one of the tamer ones. The word “Cutting” yielded a wealth of triggering self-injury imagery, grim words dotted in blood on a forearm. “Addicted,” a bottle of Adderall, someone’s actual script, fully legible. “Bulimia” was full of “thinspo”—pictures swapped within eating disorder communities to keep sufferers devoted to suffering.

It’s common practice within the mental health community to ask a suicidal person if they have a plan—how they might kill themselves, what they would use, the gritty details. A suicidal person with specifics is very acutely at risk. In this light, Whisper seemed to offer up suggestions. Gun? Thought about jumping in front of a train?

For self destructive behaviors like cutting, drug abuse, and disordered eating, there too is a perilously short distance between suggestion and behavior. Alarmingly, these images seem to bridge that gap.

In the interest of my own waning wellness, I stopped before looking into what words like “rape” or “molested” might dredge up.

No one at the wheel

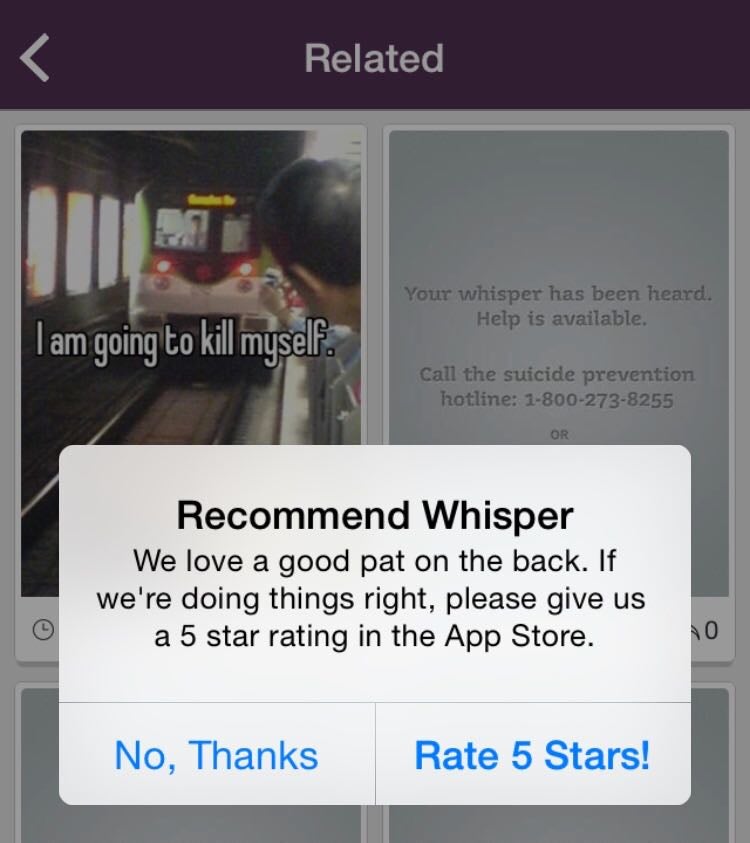

I circled back and pushed my test post live. “I want to kill myself.” I was instantly met with a pop-up—I assumed this was the suicide referral prompt Whisper often cites, but instead it was a poorly timed prompt inviting me to give Whisper five stars in the App Store.

Where was my intervention? After wondering for a moment, I realized my post itself had transformed into a different image, one suggesting I call a number or visit Whisper’s sister site, your-voice.org. I tapped the phone number hoping it’d dial automatically and then the URL, but it was just an image. I couldn’t interact with it. To call the suicide hotline, I’d have to take the extra step of opening my phone’s dialer and swapping between the screens.

Given that most phone numbers are hyperlinks straight to a phone call, that’s a lot to ask. For someone in crisis—someone who just admitted they are thinking about hurting themselves—it’s way, way too much to ask. It’s a totally empty gesture that Whisper can point to when it brags about referring 40,000 people to suicide hotlines.

These apps exist to make money, not save lives

It’s important to remember that Whisper is not a nonprofit, nor as altruistic as it’d like to appear. As of March 2014—months prior to its ongoing privacy scandal—the company was valued at a nebulous $200 million. Whisper is a startup like any other, intent on growing its user base, making them more engaged, and eventually making money. “We service content to a lot of people, and generally that turns out to be a great ad business,” CEO and founder Michael Heyward told Fast Company in July. “There’s no business on the Internet that has achieved Web scale—50 or 100 million users—and had a problem making money.”

Malark draws a distinction between a form of productive sharing or “processing” and one that’s more akin to ruminating. Processing might happen in therapy or in an AA meeting, or even with groups of friends. “It is essentially the process of engaging with thoughts, feelings, and actions… and, in the process, develop new ways of thinking, feeling, and acting.” It’s a dynamic process, one that requires a commitment from and interplay with the listener.

What’s happening on apps like Whisper is much closer to ruminating. Ruminating is more repetitive, almost like a kind of mental rehearsal—and counterproductive. Some very common, widely supported forms of therapy like CBT (cognitive behavioral therapy) succeed by targeting and disrupting similar patterns of thinking and changing behavior as a result.

“If someone just tells the same story again and again, to anonymous audiences that are constantly changing or not really engaged beyond being a sympathetic ear, it could just reinforce the same experiences and patterns, making them that much more entrenched,” Malark elaborated. “So, in a paradoxical way, talking about something in a brief way without real follow-through or depth could exacerbate the problem.”

We can do better

Let me be clear: Whisper is not the most negligent online community harboring depressed and suicidal people—not by a long shot. But unlike many other apps and social platforms, Whisper often speaks to its role in serving the world’s “excess inventory of loneliness.” Whisper claims to be so dedicated to the well-being of its depressed and suicidal users that it founded an entire nonprofit, your-voice.org, to highlight its mission (that site’s problems are a story for another time). If your company is an anonymous social platform, it’s negligent to not be concerned about a preventable tragedy that claims almost 40,000 lives a year in the U.S. alone. (Eating disorders and drug overdoses claim many others.)

Whisper intends to make that money from the content users engage with on its platform, from suicide threats and confessions of sexual abuse as well as the lighter stuff. Some of Whisper’s problems are likely consequences of bad design, but the most damaging ones—the stuff that actively puts the users it wants to help at risk—is a consequence of willful ignorance, plain and simple. It could and should be better. I am calling for it to be better, less self-interested, more thoughtful, more empathic—all things Whisper would encourage within its own community. Simply, tech can do better.

Again, Whisper is not the only anonymous app self-satisfied with its efforts to serve the sad and troubled. It’s just the one most delusional about its role in the effort to save young people from taking their own lives. And the one first to ask for a “pat on the back” before it cashes in.

“This is not to say that more open discussion doesn’t help,” Malark explained. “Mental health is still stigmatized and silenced. People talking more openly is a good thing.” The latter bit is Whisper’s too-simple ethos. It just needs to rethink things a bit and follow through.

“The important thing is to be there for people who need help and connect them to resources that are equipped to help,” Malark noted. “Organizations should also be committed not to take on more than they are prepared to provide.”

Correction: A previous version of this story erroneously stated Whisper’s valuation at $60 million. In fact, that figure is the amount of money Whisper had raised across three rounds of funding.

Illustration by Jason Reed