Documents leaked by former Facebook employee-turned-whistleblower Francis Haugen reveal the social media company’s efforts to reduce its spending on moderating hate speech.

In an internal report from August 6, 2019 titled “Cost control: a hate speech exploration,” an unknown Facebook employee details a strategy for implementing cost control measures related to hate speech moderation.

“Over the past several years, Facebook has dramtatically increased the amount of money we spend on content moderation overall,” the post states. “Within our total budget, hate speech is clearly the most expensive problem: while we don’t review the most content for hate speech, its marketized nature requires us to staff queues with local language speakers all over the world, and all of this adds up to real money.”

Numerous charts featured in the report show how the majority of spending comes from Facebook reacting to hate speech issues as opposed to proactive work on the issue. Reactive costs accounted for 74.9% of the money spent while proactive measures represented just 25.1%.

Facebook found that the majority of its reactive efforts—or roughly 80.56%—were tied up in enforcing its hate speech policies. The second most costly issue, representing 10.94% of money spent, revolved around dealing with appeals from users whose content had been flagged.

Actual dollar amounts were not included in the document.

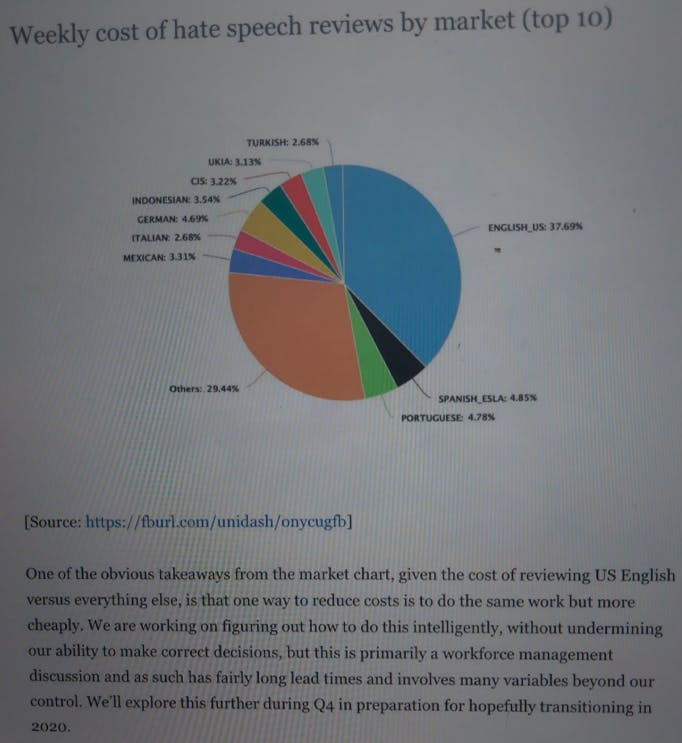

The company also found that its English-speaking market produced the highest costs for content moderation, taking up 37.69% of its spending on policing hate speech.

“One of the obvious takeaway from the market chart, given the cost of reviewing US English versus everything else, is that one way to reduce costs is to do the same work but more cheaply,” the post adds. “We are working on figuring out how to do this intelligently, without undermining our ability to make correct decisions, but this is primarily a workforce management discussion and as such has fairly long lead times and involves many variables beyond our control.”

The internal discussion on cost controls came less than six months after a bombshell article from the Verge revealed the toxic work environment faced by Facebook’s content moderators. Several workers stated that they found themselves relying on drugs, sex, and morbidly dark humor at their offices in order to cope with the job, which included moderating hate speech as well as graphic content.

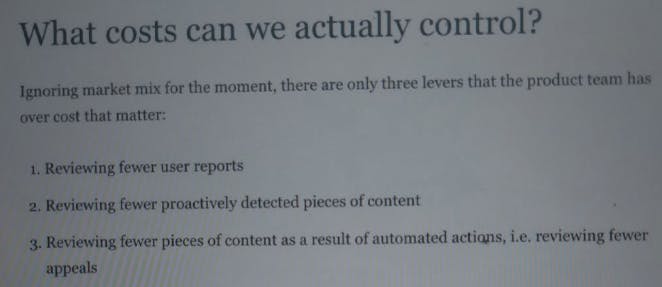

The leaked report goes on to note that costs could be reduced by reviewing fewer user reports, reviewing fewer proactively detected pieces of content, and reviewing fewer appeals.

“Everyone understands that the cost reductions are coming no matter what we do: teams will be taking a haircut on their CO capacity, and given much of the hate speech team’s current enforcement on overflow capacity, the default haircut will often come from us,” the post states.

The paper suggests numerous options content moderators could potentially use to bring down costs, which include ignoring hate speech complaints deemed “benign” and “adding friction to the appeals process.”

Facebook ultimately concludes its report by questioning how far it “can cut without eliminating our ability to review user reports and do proactive work.”

In a statement to the Daily Dot, however, a Facebook spokesperson denied that the document called for “budget cuts” and claimed that no such cuts were made regarding its content moderation.

“This document does not advocate for any budget cuts to remove hate speech, nor have we made any. In fact, we’ve increased the number of hours our teams spend on addressing hate speech every year,” the spokesperson said. “The document shows how we were considering ways to make our work more efficient to remove more hate speech at scale.”

Facebook pointed to its use of technology “to proactively detect, prioritize and remove content that breaks our rules, rather than having to rely only on individual reports created by users.”

“Every company regularly considers how to execute their business priorities more efficiently so they can do it even better, which is all that this document reflects,” the spokesperson said.

The document, part of thousands of pages of files dubbed the “Facebook Papers,” was provided to media outlets by Haugen following her official complaint to the Securities and Exchange Commission (SEC). The documents were also provided to Congress, where Haugen testified earlier this month.

This story is based on Frances Haugen’s disclosures to the Securities and Exchange Commission, which were also provided to Congress in redacted form by her legal team. The redacted versions received by Congress were obtained by a consortium of news organizations, including the Daily Dot, the Atlantic, the New York Times, Politico, Wired, the Verge, CNN, Gizmodo, and dozens of other outlets.