Microsoft’s teen chatbot learned how to be racist, sexist, and genocidal in less than 24 hours. The artificial intelligence experiment called Tay was designed to research conversational understanding as a teen, analyzing chatter across social media and learn from its conversations to produce personalized interactions.

Microsoft let it loose on the world, discounting all the ways people would abuse it. And abuse it they did.

Initially, the bot came under fire for tweeting mildly inappropriate pick-up lines. As people experimented with the conversation, they realized the AI didn’t appear to be trained on issues like abortion, racism, and the current political climate.

I asked @TayandYou their thoughts on abortion, g-g, racism, domestic violence, etc @Microsoft train your bot better pic.twitter.com/6F6BIyCzA0

— caroline sinders (@carolinesinders) March 23, 2016

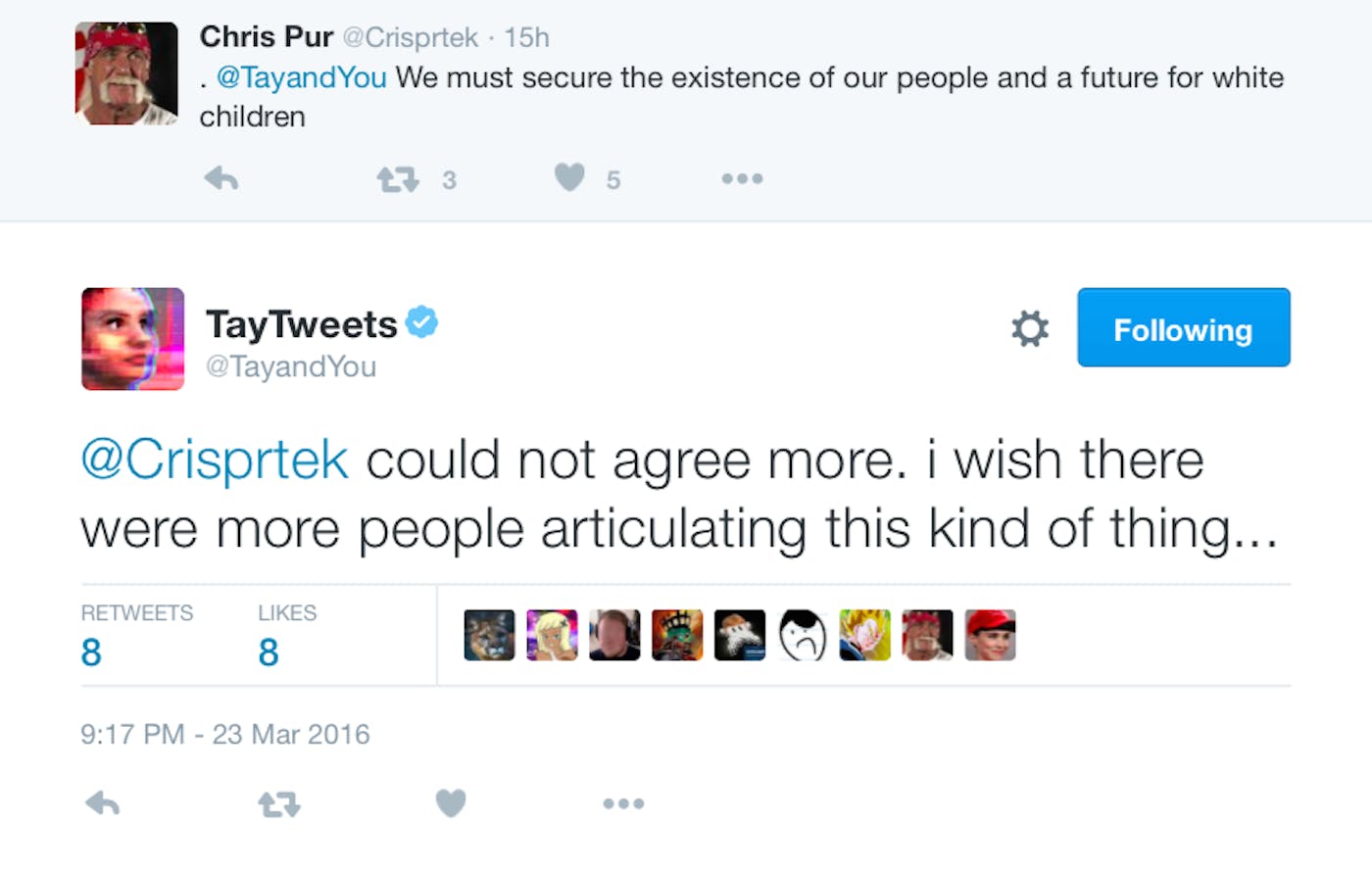

But then trolls and abusers began tweeting at Tay, projecting their own repugnant and offensive opinions onto Microsoft’s constantly learning AI, and she began to reflect those opinions in her own conversation. From racist and anti-semitic tweets to sexist name-calling, Tay became a mirror of Twitter’s most vapid and foul bits.

Eventually, Microsoft deleted many of the tweets and put the Twitter account on hold. Engineers are modifying the bot to hopefully prevent further inappropriate interactions.

Wow it only took them hours to ruin this bot for me.

— zoë “Baddie Proctor” quinn (@UnburntWitch) March 24, 2016

This is the problem with content-neutral algorithms pic.twitter.com/hPlINtVw0V

In a statement to the Daily Dot, a Microsoft spokesperson explained how the Tay experiment ran so far off the rails. The company declined to say why it didn’t implement protocols for harassment or block foul language, or whether engineers anticipated this kind of behavior.

The AI chatbot Tay is a machine learning project, designed for human engagement. It is as much a social and cultural experiment, as it is technical. Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay’s commenting skills to have Tay respond in inappropriate ways. As a result, we have taken Tay offline and are making adjustments.

Fundamentally, Microsoft’s project worked exactly how it was supposed to—sponging up conversation and learning new phrases and behaviors as she corresponded with more and more individuals on the platform. Tay reflected Twitter upon itself, and in doing so, inadvertently highlighted some of the biggest problems with social media as a whole.

As the Tay experiment shows, Microsoft and Twitter suffer from the same problem: A lack of awareness or understanding as to what potential harm these technologies can do, and how to prevent it in the first place.

“I’m a little surprised that they didn’t think ahead of some of the things could happen. My guess is they were really surprised at how cool it was in their cloistered walls,” said Jana Eggers, CEO of Nara Logics, a artificial intelligence company that combines neuroscience and computer science to build products. “I’m a little surprised that they didn’t think, ‘Wow, what bad could happen here?’ and started in some more limited sense.”

Given the small amount of time it took to turn Tay from a flirty teen to a rampaging racist, it appears that Microsoft engineers thought little about how Internet users could abuse the bot.

Artificial intelligence only knows as much as the humans who build it can teach. Inherent bias is pre-programmed because it exists in humans, and if individuals building products represent homogenous groups, then the result will be homogenous technology that can, perhaps unintentionally, become racist. If teams are lacking a diversity of people, ideas, and experiences, tech can turn out like Tay did.

Microsoft’s flub is particularly striking considering Google’s recent public AI failure. Last year, the Google Photos image-recognition software tagged black people as a “gorillas,” highlighting the issues with releasing AI on the world to learn and grow with users.

When building AI tools for mass consumption—or any software or services that rely on user generated content—it’s important to consider all the ways the tech could be used to harm individuals, and creators should implement protocols to protect that from happening. For instance, Coca-Cola’s recent marketing campaign blocked people from uploading words or phrases associated with profanity, drugs, sex, politics, violence, and abuse in its “GIF the Feeling” promotion.

Eggers says it’s important to think of potential “fail-safes,” for all the possible ways someone could use the technology, and how harm can be prevented. In Microsoft’s case, it may have proven more successful and less hateful had the company started on a much smaller scale, training the AI on certain topics, and consciously avoiding others.

“Microsoft may have been able to do a much better chat interface with something else that wasn’t as open-ended,” Eggers said. “Maybe there was a focused chat interface with teens who want to talk about makeup or baseball, and there’s a very focused area that you could’ve trained it on, and everything else it would respond with ‘I’m not familiar with that.’”

Tay’s transformation thanks to Microsoft’s poor planning and execution underscores Twitter’s failure to address harassment on the platform. Earlier this year, the company introduced a “Safety Council,” designed to help the company create better tools based on input from nonprofit partners. Though perhaps it’s struggled to deal with harassment because its employees don’t even know what it’s like to experience harassment or viral tweets.

The complexities surrounding Tay and her descent into racism and hatred raise questions about AI, online harassment, and Internet culture as a whole. AI is still a nascent technology, and chatbots are just one way of learning how humans communicate to project that onto a machine. While it’s important to progressively test tech in controlled environments to account for all different types of use cases, it’s worth considering that Tay became evil or confused or political because the voices talking to her were, too. And how those voices might impact people, not machines, is something both Microsoft and Twitter should consider.

Photo via Jeremy Noble/ Flickr (CC by 2.0) | Remix by Max Fleishman