In entertainment and cinema, one of the vexing problems is getting virtual characters to interact with real space around them without pouring money into special effects. Researchers at MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) have found a way to solve that problem, making it possible for humans (and cartoons) to physically interact with photos and videos.

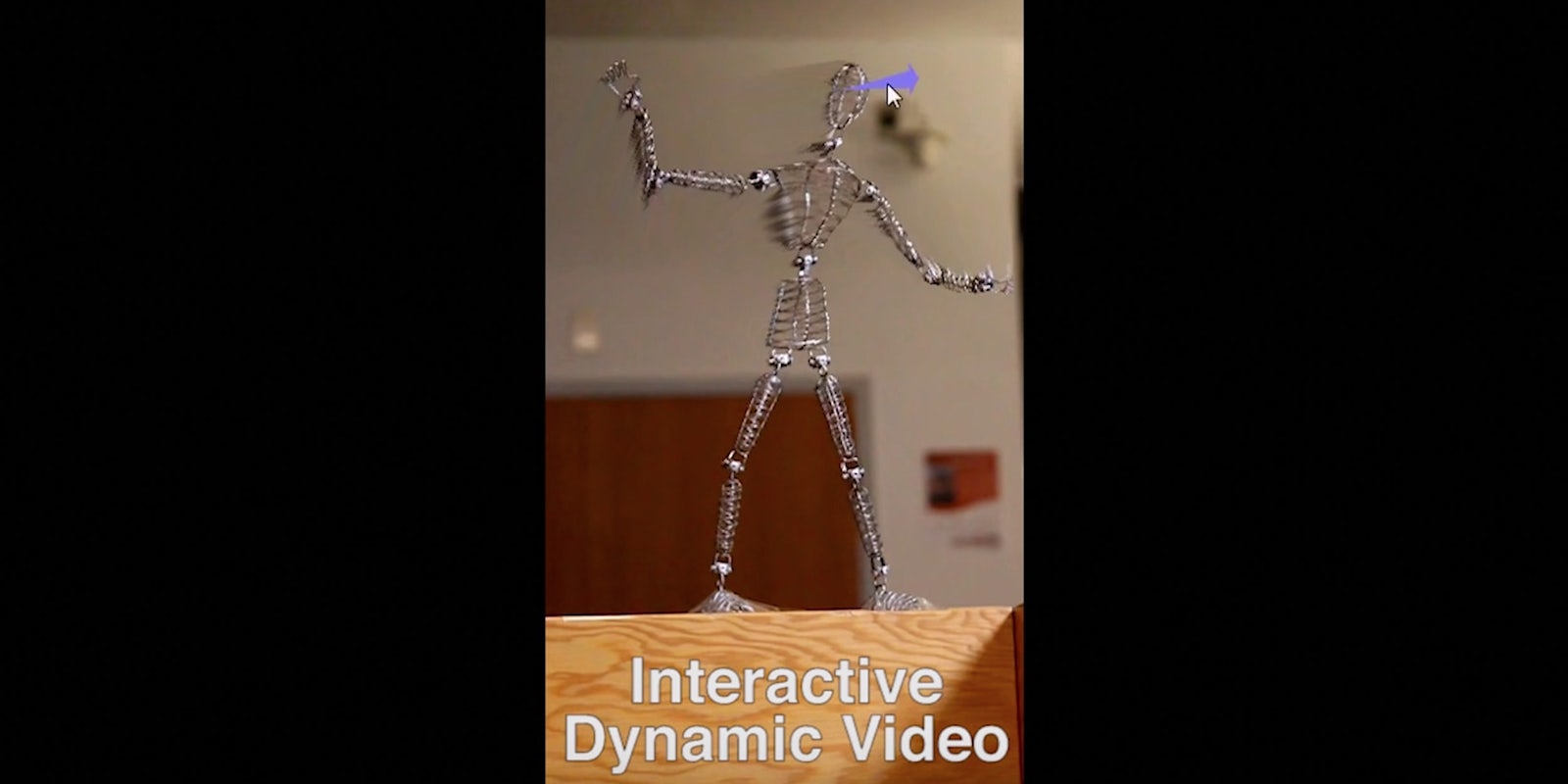

Called “Interactive Dynamic Video,” the new process analyzes the motion of objects in a video and captures their vibrations and movement. Then the system breaks down the movement into separate parts, like a limb of a tree blowing up or down, and lets an observer manually control the general motion of the object in a photo or video.

As CSAIL PhD student Abe Davis explained in an interview, this process works on objects that move but return to a resting shape. Davis explained it’s like moving a piece of paper around a table—you can touch it and manipulate it without folding it and changing the shape of the paper.

“What we can simulate is the motion up to where you’re not really changing the basic shape of the object,” he said. “You won’t be able to irreparably bend the object, but you can bend it to the extent to where it will still bounce back.”

Objects like trees and bridges and wire characters are great examples, because they are stationary yet move when the wind blows, when people walk across, or when the table upon which a character rests is vibrated.

New algorithms developed by the research team can extract the motion of the object from a video and reapply that motion manually. Even if the object wasn’t filmed in a every exact motion, the computer can predict its movement in different ways, simulating the movement when you click and drag.

You could make a tree blow in the wind, a person dance, or manipulate a swing set. This technology can be applied to entertainment and media to make it easier to create animated objects that realistically interact with things in the real world, Davis said.

“If you’re making a movie and you’ve got the budget for it, you might just model anything and everything that your virtual character is supposed to interact with. And this works out because virtual things interact with virtual things just fine,” Davis said. “But if you want virtual things to interact with real things, this is a lot harder. One of the hardest parts of it is figuring out what these physical properties are of the real things.”

Take, for example, Pokémon Go. Right now, when you see the Pokémon character on your screen, it’s stationary; the “augmented reality” aspect of the game is simply that a character is overlaid on a scene your camera captures. Theoretically, the Pokémon could interact with the environment around it through interactive dynamic video. Davis described this potential in a video:

All of the demonstrations Davis did were generated with low-budget tech: A computer and either a phone camera or an SLR. No special effects or 3D animation was required to have cartoons interact with the objects.

Davis is also one of the researchers behind the “Visual Microphone,” a project that uses observable vibrations in silent video to extract sound.

This tech isn’t available to mainstream users yet, but it’s possible it will be in the future. Davis and his team published a paper on the project, and he said that while it’s currently written in multiple programming languages across different systems, he wouldn’t be surprised if there’s a much more convenient system in the future.