Nextdoor, a location-based social network designed to connect neighbors, discovered it had a problem. Among its ten million registered users and 100,000 active neighborhoods, there was an undercurrent of racial tension.

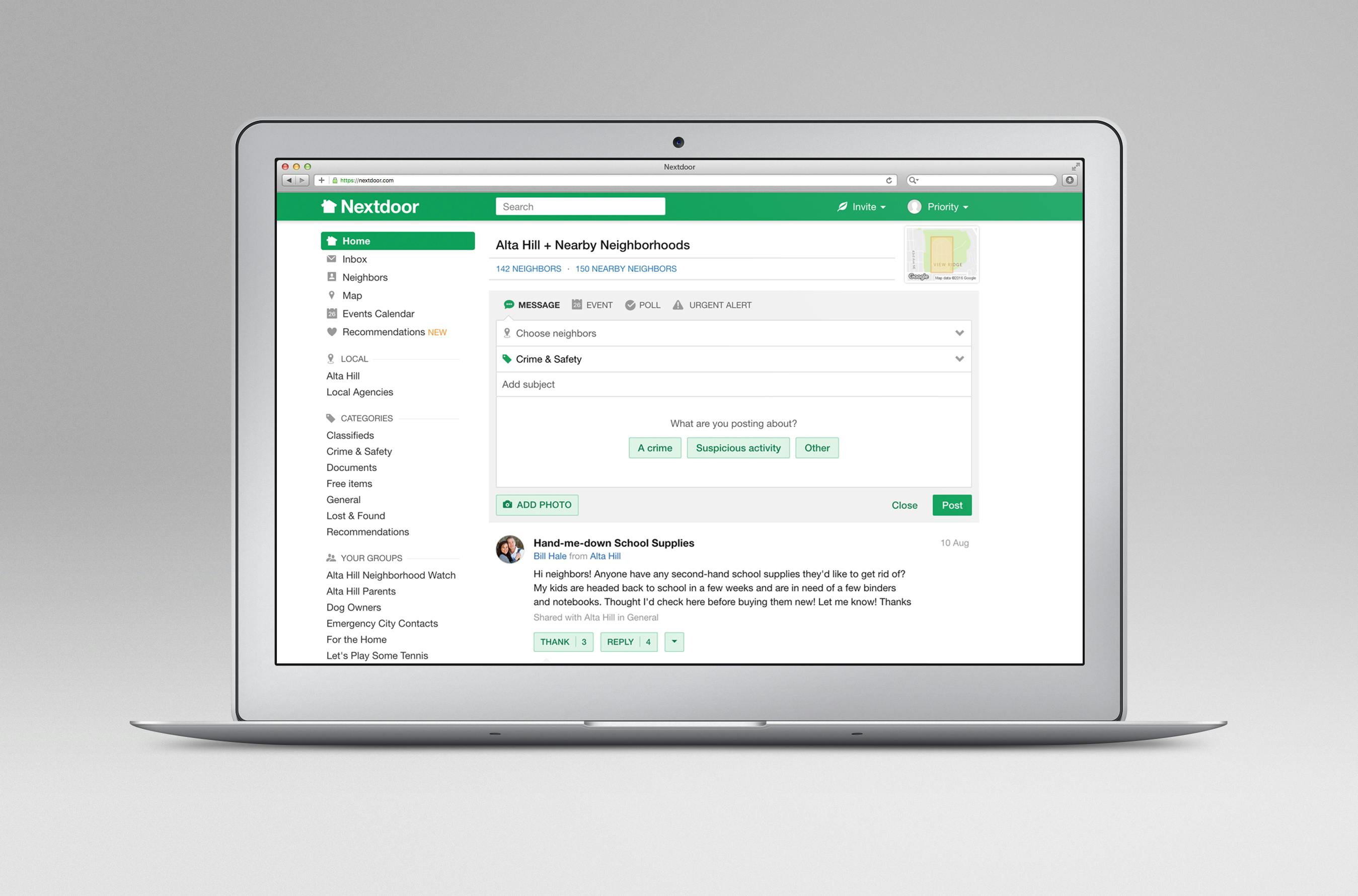

The problem persisted primarily in the crime and safety section, where users can report criminal activity and alert other users of everything from property damage to stolen goods. But some users, in reporting neighborhood happenings on Nextdoor, would highlight a person’s race to express concern and suspicion.

Earlier this year, the New York Times documented posts that highlighted what it looked like when racial profiling reared its head on Nextdoor in neighborhoods around Oakland, California.

One post caught by the publication suggested residents be on the lookout for “two young African-Americans, slim, baggy pants, early 20s.” Another warned of a “light-skinned black female” walking her dog while talking on her phone. “I don’t recognize her,” the post read. “Has anyone described any suspect of crime like her?”

The issue is anything but exclusive to Oakland, though organizations in the city like Neighbors for Racial Justice took a particularly active role in pushing Nextdoor to address the issue. Examples can be found in just about every community.

https://twitter.com/iammollymchugh/status/639128262925488128

@Nextdoor never fails to deliver on the old "I'm not racist, but…" chestnut pic.twitter.com/RODaUv504r

— The fourth worst Devin (@TexasDevin) August 20, 2015

My mom forwarded me this racist message she got from her neighbor on something called nextdoor dot com. pic.twitter.com/Aih3DxsiwT

— adult nepo baby (@mattcornell) April 29, 2015

https://twitter.com/UtopianParalax/status/654758989066993664

In a @Nextdoor thread about loud fireworks, this lady gets called out for being racist and then demands an apology pic.twitter.com/xwJrzf8xOq

— Eric Rosenberg (@DrJorts) February 9, 2016

Nextdoor acknowledged the issue and set out to fix it.

The company adopted a broad definition of racial profiling, and specified in its help center that the platform expressly prohibits posts that “assume someone is suspicious because of their race or ethnicity” and prohibits posts that “give descriptions of suspects that are so vague as to cast suspicion over an entire race or ethnicity.”

But setting rules does not necessarily mean users will abide by them, so Nextdoor had to develop a system that could enforce the policy.

After six months of extensive testing and input from advocacy groups in Oakland, the American Civil Liberties Union (ACLU), and the Department of Justice (DOJ), the company is rolling out tools that it believes will have a significant impact in curbing racial profiling.

The new tool adds hurdles to the posting process in the crime and safety section of Nextdoor, essentially forcing users to think twice before they post something potentially harmful or prejudiced.

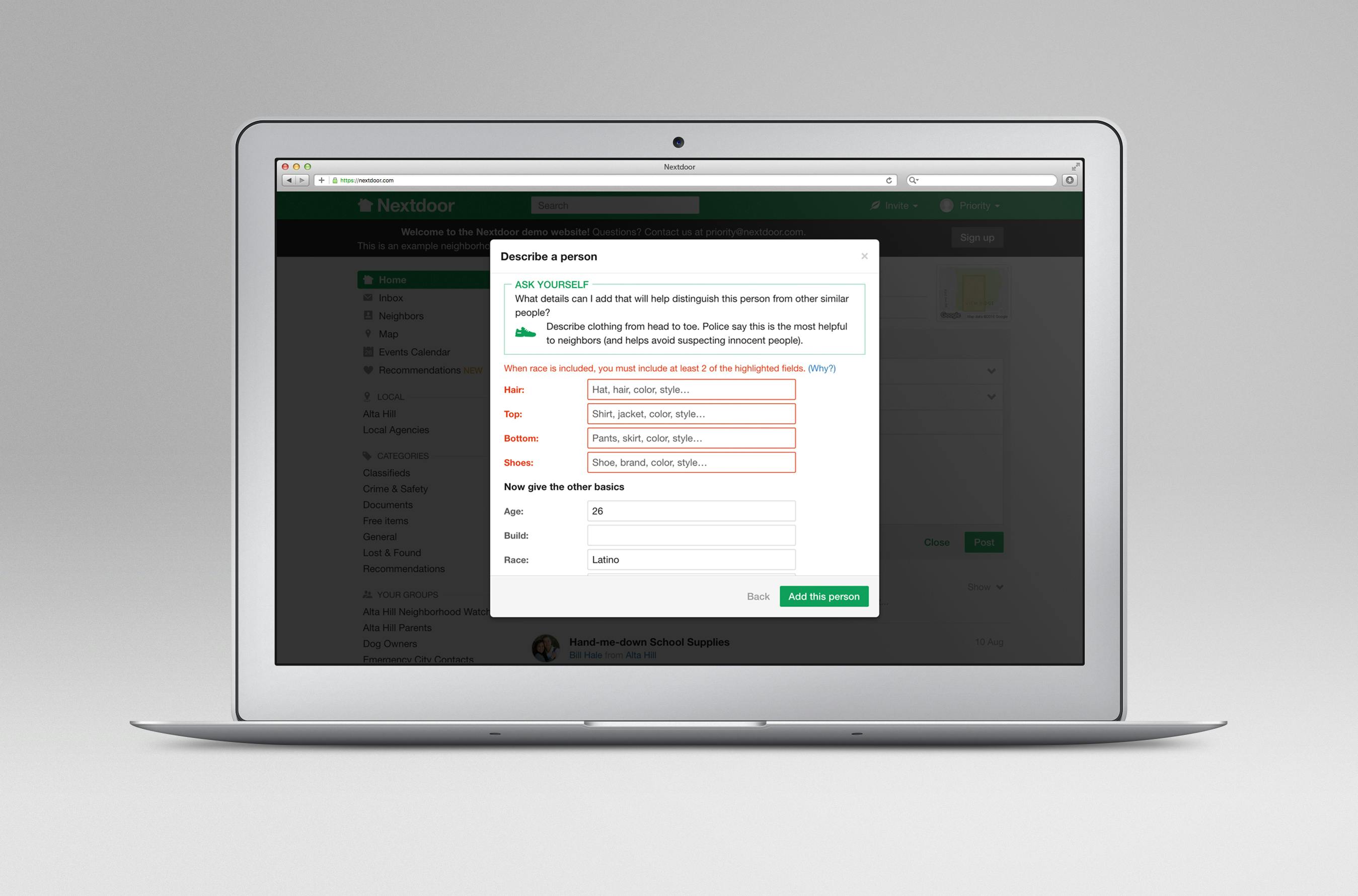

When a user attempts to report something on the platform, an algorithm automatically detects if any racially coded terminology is used. If it is, the platform will require the poster to provide two additional descriptors before allowing them to make the post.

Posts that are too short are also prompted to provide additional context, with the assumption being some posts are so brief as to not actually provide any useful information. The tool is designed to make the virtual neighborhood watches that form on Nextdoor both more mindful and more informative with what they share.

Nextdoor co-founder and CEO Nirav Tolia told the Daily Dot the prompt for additional information was inspired by the Oakland police department, which told Nextdoor “they don’t want more police reports, they want more information.”

The process may seem somewhat counterintuitive for a social network, which generally wants to remove as much friction as possible in the posting process. But Nextdoor is making it harder to post certain information, guided by the belief that quality is much more important than quantity.

Thus far, that concept seems to be working. In tests run by the company, which eventually reached as many as 80,000 neighborhoods, Nextdoor saw a 50 percent increase in abandonment—posts that users started and then decided to ditch.

That would be typically be a problem, unless the posts that were abandoned contained the type of content the platform would rather didn’t appear in the first place.

As for the posts that did make it through the new algorithmic check, Tolia reported Nextdoor saw incidents of racial profiling drop by 75 percent. “We did not anticipate this type of improvement,” Tolia said.

He explained that about 20 percent of posts on Nextdoor are made in the crime and safety section, and “less than one percent of one percent” contain racial profiling. But the company holds “even one post is too many” when it comes to racial profiling.

Tolia believes Nextdoor “serves as a mirror in the neighborhood,” so seeing racial profiling on the site is likely an accurate, if ugly, reflection. “We believe strongly that racism is one of the worst, most difficult issues society faces,” he said.

While the company is pleased with the amount of improvement it has seen, Tolia is under no illusions that the impact will change the deep-rooted problems that lead to the posts, no matter how much Nextdoor manages to discourage them. “A few changes that a website makes won’t cure racism,” he said.

While it won’t lead to an end to racial biases, Nextdoor’s changes may at the very least help curb the practice of racial profiling, which there is little evidence to suggest is effective in preventing crime.

Take, for example, a 2008 report by the ACLU of Southern California analyzing more than 700,000 police stops made by the Los Angeles police department. It found that black and Latino drivers are more likely to be stopped than their white counterparts. When stopped, black drivers were 127 percent more likely to be frisked and 76 percent more likely to be searched than white drivers. Latino drivers likewise were 43 percent more likely to be frisked and 16 percent more likely to be searched than stopped white drivers.

Stopped black drivers were 29 percent more likely to be arrested, and stopped Latino drivers are 32 percent more likely to be arrested than stopped white drivers.

Despite the disproportionate attention paid to targeting people of color, the ACLU reported black drivers were 42.3 percent less likely to be found with a weapon after being frisked, 25 less likely to be found with drugs after being searched, and 33 percent less likely to be found with other contraband than white drivers. The statistics were similar for Latino drivers.

There are no shortage of reports that show similar findings, including considerable evidence that people of color are far more likely to be targeted by law enforcement for drug crimes, and received much stiffer penalties, despite using drugs at a rate essentially identical to their white counterparts.

Nextdoor’s new tools won’t do away with profile but the platform may manage to stop some of the unnecessary and unfounded stereotypes from being spread within the online gated communities that it has created. And that’s, at the very least, a start.