If a teenager is struggling with depression, he or she might be wary of turning to a parent or guidance counselor or even friends. But they could feel comfortable doing something digitally that they do every day—sending a text.

Crisis Text Line is facilitating these types of interactions 24 hours a day. Crisis volunteers sit at computers, waiting for texts to come in, and support individuals in the throes of personal or mental crises. They’re not certified therapists, but rather volunteers from all walks of life who can empathize with struggling individuals, provide support, and escalate conversations to professionals if need be.

Nancy Lublin, founder and CEO of Crisis Text Line, created the company after her time at DoSomething.org, a nonprofit that empowers young people to give back to their communities. The organization runs text campaigns to reach young users; when they sent mass texts, something curious happened: People texted back very intimate and personal struggles.

Realizing there was an urgent need for SMS-based support, Lublin founded Crisis Text Line in 2013, and has processed almost 18 million text messages on the platform. On Wednesday, Lublin announced the company is using Twilio’s SMS services to power its conversations, and plans to expand internationally over the next couple years.

“We are not advice, we are not therapy,” Lublin said in an interview. “We’re helping people get from hot to cold and trying to identify coping skills that work for them.”

Seventy-four percent of texters are between the ages of 13 and 25; six percent of texters are under the age of 13, the fastest growing age group for suicides in the U.S. for the first time. The organization is providing a crucial and tech-centric service that appeals to a younger generation accustomed to texting and tweeting. Suicide risks assessments are required for each conversation.

As all these text messages come in, Crisis Text Line is collecting anonymized data about the individuals, and provides comprehensive and unprecedented datasets to researchers, journalists, and others interested in learning about patterns in mental health.

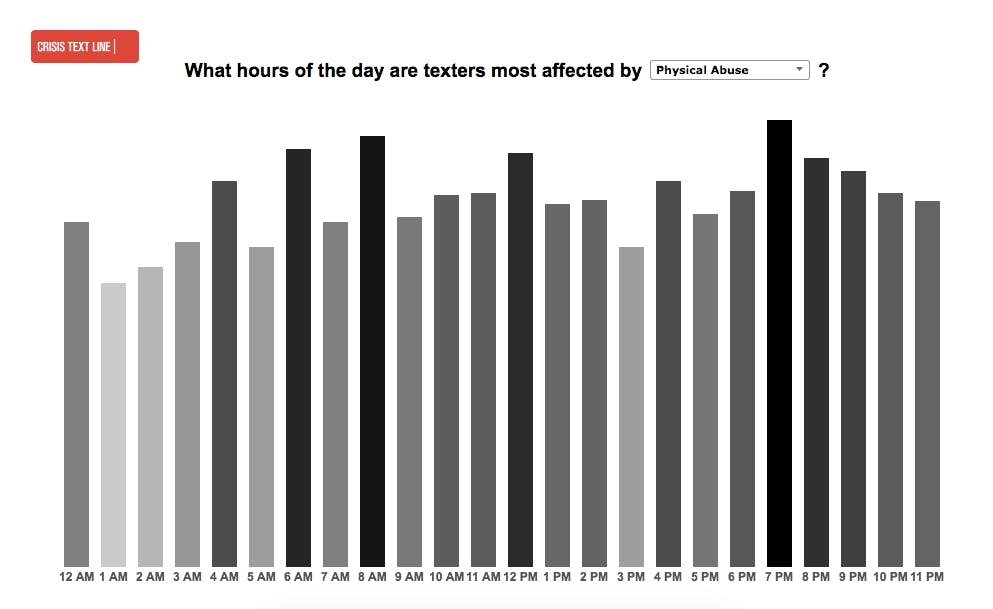

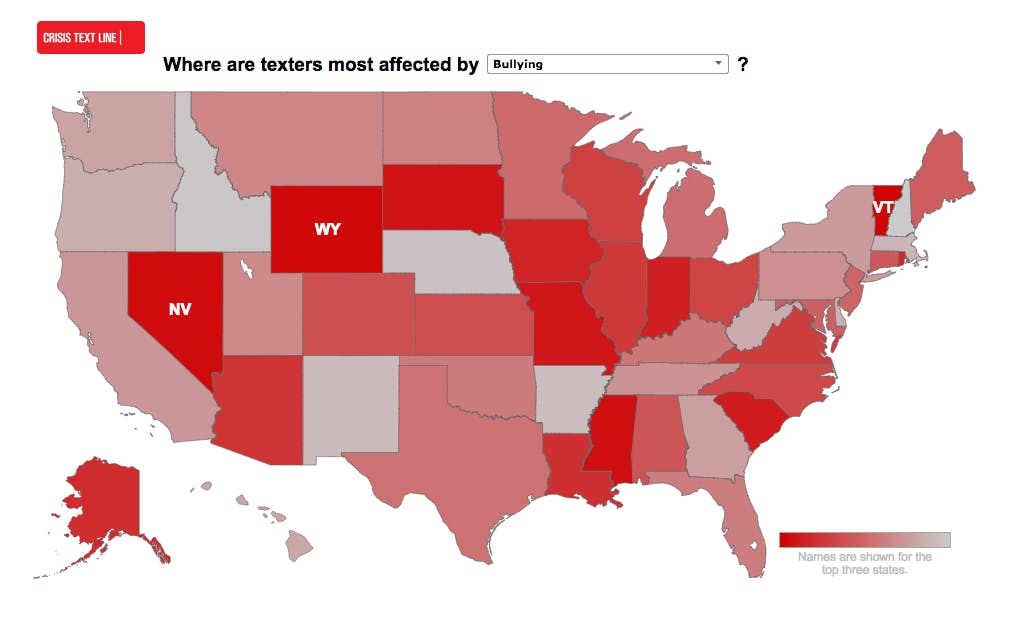

For instance, Crisis Text Line knows when people suffer from eating disorder attacks most frequently, or when there’s a spike in drug use or domestic violence. The organization knows which days people text less about homosexuality (Friday) and that prom season leads to major spikes in anxiety around body image and finances. Bits of this anonymized data can be found publicly on the company’s trends page; interested researchers must go through a rigorous application process to make sure they’re using the data appropriately.

“Self-harm and cutting happens as much during school hours during the week as at home. I always assumed self-harm was something you did alone at home in your bathroom or bedroom, but it’s also happening at school,” Lublin said. “If I ran a college dorm or a summer camp or a hospital or police department, I would want to look at this data.”

When someone texts the crisis line, they’re met with a thank-you text, and a follow up text describing the privacy policy and asking what’s wrong.

Each text message conversation goes through three different steps—software analyzes the text to determine the severity of the issue, a chat box pops up for a volunteer to select and begin chatting with the individual, and, if necessary, the person chatting will be escalated to a full-time staff member who can more appropriately deal with extreme situations.

The volunteer’s interface looks a bit like a Google Hangouts chat box, and they not only engage with people needing support, but also fellow volunteers who are online at the same time. Volunteers are in constant communication, and can reach out for help from another person if they are faced with a situation they don’t have experience with.

The company created an algorithm to recognize text patterns and words that signal the severity of a crisis. If someone texts, “I want to kill myself,” it will be designated orange, and escalated to the top of the queue so crisis counselors can deal with it immediately. The software recognizes speech patterns, word pairings, and slang terms that even humans might miss, ensuring that people who are in the most dire need of someone to talk to will be supported quickest.

Ellen Kaster is a Crisis Text Line volunteer. Part of her drive to help others going through extreme emotional experiences is inspired by a personal loss. Kaster’s 14-year-old cousin committed suicide three years ago.

“This to me is a way to bring life to her story, and she made a permanent decision to a temporary problem, and it was hard for her to see that,” Kaster said. “So every week I devote four hours to her and her story.”

Each Tuesday, she volunteers four hours, chatting with four to five people each night. This past year, on the anniversary of her cousin’s death, she talked to a young woman who was suicidal, and through texting, changed the girl’s mind about wanting to end her life.

Kaster has also experienced what Crisis Text Line calls an “active rescue.” If an individual is a minor and is being abused or if they have met all the criteria on the suicide risk assessment, volunteers will flag their supervisors, and if they agree with the risk assessment, will alert emergency services in the area. Lublin said some parents don’t even know their children are suicidal when police show up at their door to help the child.

Crisis counselor volunteering is not for everyone—you must have a very strong sense of empathy and commit to four hours each week for one year talking to people. It’s often difficult to hear, Kaster said, but also very rewarding. She keeps an ever-updating note on her phone of the positive feedback she’s received from individuals she’s texted with.

To become a counselor, adults apply online and must go through a background check. Then, a 34-hour training session teaches counselors how to communicate with individuals in crisis. The organization has a 39 percent volunteer acceptance rate.

Soon, Lublin said, software will be able to suggest replies based on the conversation if the counselors get stuck, but it won’t rely on chatbots.

“We have a human-first policy; we don’t think this should be done by bot,” Lublin said. “We don’t believe in that. We think if you’re going to reach out to us with something that personal, you deserve a person. You could do this by AI, but we don’t think sentiment analysis is there yet enough to really mimic a human.”

Bots are becoming ubiquitous, and businesses and organizations are implementing them more and more as chat services begin to offer simple ways of building and executing automated services. Bots can hold almost human-like conversations through language recognition and machine learning, though people can often tell when they’re talking to a robot or human. Ultimately, the goal is that bots will take over for humans who deal with customer service or support in many industries.

Therapy, however, is one area of human-to-human communication that can’t simply be replaced by bots. The digitization of therapy and support is controversial, especially since computers have no way of understanding or exhibiting human emotion. Researcher Natalie Kane explains the trouble with therapy chatbots:

Therapy chatbots have the potential to fall into a category that I call “means well” technology. It wants to do good, to solve a social problem, but ultimately fails to function because it doesn’t anticipate the messy and unpredictable nature of humanity. So much can go wrong as things move across contexts, cultures, and people, because there isn’t a one-size-fits-all technological solution for mental health. If we’re still arguing over the best way to offer therapy as human practitioners, how will we ever find the right way to do it with bots—and who gets to decide what is good advice?

Kaster said a surprising number of people ask whether she’s a bot, and want her to prove that she isn’t one. It’s an interesting pattern that underscores just how digitally native the people texting the services are.

Bots won’t be used, but the organization has a need for more people. Texters are increasing faster than crisis counselors can manage. The organization currently has 1,497 volunteer crisis counselors, and Lublin hopes they can get to 3,000 by the end of the year.

“Every conversation reminds me to be kind to people,” Kaster said. “It sounds very generic, but I have conversations with texters that I should be having with people in my life.”