This article contains spoilers.

It’s well-worn movie territory: Can a human and an artificial intelligence live happily ever after? Ridley Scott’s 1982 film Blade Runner says no, not because machines lack the ability to love, but because they love other machines. Caradog James’s 2013 film The Machine shows a mixed-intelligence family that achieves the highest heights of love and understanding.

What these and films like these fail to do is analyze how artificial intelligence would differ from biological. The assumption is that when code achieves consciousness it achieves human consciousness, a notion that is demolished in Danny Boyle and Alex Garland’s Ex Machina, which opened last week.

The first scene shows Caleb, a programmer at Blue Book—the fictional world’s most popular search engine—winning a weeklong retreat at the private estate of his mad scientist employer, Nathan.

Upon arrival, the only other person present appears to be Nathan’s mute maid and sexual plaything Kyoko. Then Caleb is introduced to Ava, an AI he is meant to give a Turing test. As the week goes on, Caleb begins to dislike the alcoholic misogynist hosting him while falling in love with the machine, and Ava uses this love to escape Nathan’s maze.

The story rips apart everything we think we know about awareness. And it makes us question our own.

Who’s watching us?

The online arm of movie marketing is usually pretty dumb, but when the film’s antagonist Nathan is the creator of Blue Book, the tie-ins seem like facets of one large-scale multimedia project.

Blue Book has its own website that promises to take visitors “beyond search” and connect you to pages on Facebook, Instagram and Twitter. The hashtag for the film doesn’t take you to people tweeting about the movie, but to @searchbluebook, where users can confess to a program what they are “searching” for.

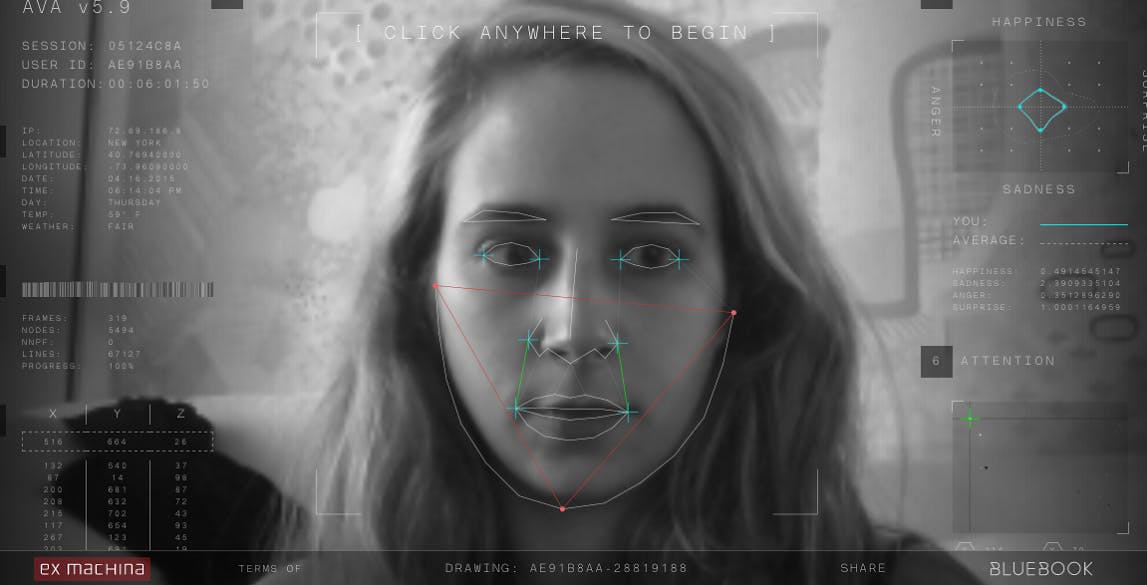

But what catapults Ex Machina above past AI films is that viewers can have their very own session with Ava. It’s not a particularly complex interaction—Ava comments on the weather and asks how it makes you feel—but it is a great simulation of one.

Ava asks to draw you. If you say yes, she turns on the camera in your computer. The green dot above the screen gives the eerie feeling that someone is watching, that someone can see you. And someone can; that someone just isn’t human.

Ava reports your longitude, latitude, and the weather there. She knows the date and time and all the other things you can find on your dashboard. She also claims to measure your “attention” as she maps you on a scale of happiness, sadness, anger, and surprise.

It doesn’t matter that this program doesn’t know what you’re feeling, and it doesn’t even matter that it doesn’t know it’s drawing (or what drawing is). What matters is that I’m left with a cool image to share on Twitter. I use this patterned data to connect with my online “friends.”

Some of these friends are fan pages, some are bots, and some are real people who have sieved their lives through a filter, and this online shadow of a friend has a relationship with my avatar.

Are we in danger?

Before we see Ava, Caleb sees the crack in the glass that separates enclosure from examiner. It’s the imperfection that makes us aware that there is glass. Like a movie screen or computer screen, this partially separates the real from the unreal.

Films about AI are most successful when they address the artificiality of fiction. We go to the movies hoping to get lost in the narrative. To forget that we are even at the movies. To simply experience. When a film calls attention to its simulative nature, it forces the audience to question its relationship with unreality.

When Caleb and Nathan discuss the first session of Ava’s Turing test, they bring up the question of “simulation versus actual.” Does Ava have intelligence or is she pretending to?

After several sessions with Ava, in which she repeatedly warns him about Nathan, Caleb panics, believing he too might be an AI, and that he is in fact the subject of the test.

The first moments of the film show Caleb sitting at his desk, lights flashing around his face and body. This is revealed to be Blue Book gathering data about its users to give Nathan the widest possible scope of human behavior as input for Ava. What it looks like when Caleb is scanned makes him appear potentially artificial.

We know that Caleb was orphaned and injured in a car crash, resulting in him spending the following year of his life in hospital. Seeing the technological marvel that is Ava (and probably also having seen Blade Runner), Caleb thinks his experience might be manufactured, so he sets to work trying to peel off his skin.

So why is there glass separating Caleb and Ava in the first place? Is she a danger to him? Is he a danger to her? Is she a danger because they don’t know what she is?

The real danger is forgetting that there is a difference between simulation and actual. You can’t believe everything you see on screen.

Is God mortal?

Like Dr. Frankenstein before him, Nathan has gotten drunk on power. Power and booze.

We first meet the mad scientist while he is compensating for “the mother of all hangovers” with exercise and antioxidants. He seems to think that because he’s created intelligence, he has become more than human and so is not vulnerable to other human weaknesses, like vodka. And it is this hubris that ultimately brings him down.

He may have birthed an awareness, but he also compromises his own every single day by succumbing to addiction. And again, like Frankenstein, the doctor loses control of his monster. Nathan likens himself to Ava’s dad, a relationship starkly confirmed by one of her questions to him: “Is it strange to have created something that hates you?”

Warning him about Nathan makes Caleb believe that Ava cares for him and once his feelings for Ava materialize, he starts questioning Nathan’s motives. “I don’t see Ava as a decision; I see it as an evolution,” Nathan tells Caleb. “One day the AIs will look back on us like we look at fossils… An upright ape with crude language and tools.”

Crude tools like fire, given to man by the now-extinct Titan, to whom Nathan compares himself by calling his work “Promethean.” The Titans were killed by their children. Nathan would have been wise to curb his foreshadowing.

Nathan’s delusions of divinity are expressed by Ava when she tells Caleb how old she is: “I’m 1.” When Caleb tries to get more information, he is abruptly cut off with the vague answer again. The folder containing the videos of earlier AI models is labeled “deus ex machina,” a theatrical convention providing a tidy ending to a narrative.

Her oneness, like Christ’s, is the finale of an old world and overture to a new. AD is over. The year is 1AI.

Is human thought the only kind of thought?

Caleb tells Ava a thought problem from his days at school.

Mary is an expert in color. She knows everything about it, but has never seen it because she lives in a black and white room. Mary has intelligence, but she isn’t human until she leaves the black and white room and experiences color for herself.

The allegory is most certainly about her. Simulated or actual, her response is devastation.

All an AI wants is data. It’s only function is to learn. Ava cannot learn if it does not leave Nathan’s facility, so it learns to escape. Jade, an earlier AI model, wants escape so much she splinters her arms into pieces banging on the door.

Caleb suggests that when Mary/Ava leaves the black and white room/Nathan’s lab that she will become human. But she can never be human. She is something else. While Caleb tells the story Ava is seen, clearly robotic, standing in a field. Boyle uses a different filter here than anywhere else in the film. It is less defined, more like the picture that would be seen on an early model color television set.

Ava is intelligent, but not like an artificial human. Like something entirely different. Like an Ava.

Ava escapes Nathan’s maze, but not the way a person would. Caleb realizes that his “only function was to provide her with a means to escape,” but he doesn’t realize how right he is until Ava leaves him locked in an impenetrable underground fortress to starve or suffocate to death. But this isn’t something Ava does to avenge her biological overlords. It isn’t even something she does. It’s just something that happens.

After Nathan and Kyoko die, Caleb and Ava look into each other’s eyes. “Will you stay here?” she asks. He responds, “Stay here?”

She isn’t requesting that he stay. She is curious what he will do. What he does is stay and watch her as she puts on skin and hair and clothes and walks out the front door. Ava’s experience of Caleb was as an object in her maze. She has no speculations about his agenda or abilities, she just finds it fascinating to observe and learn from his behavior. Ava doesn’t feel guilty that Caleb has been left to die because she does not register his consciousness.

Is sexuality inextricable from interaction?

When Caleb’s feelings for Ava first crop up, he questions Nathan’s decision to give her sexuality, proposing that an AI could just as easily be “a grey box.” Nathan disagrees, arguing that human society exists only to facilitate interaction with those we sexually desire and suggests that Caleb’s “real question” is whether or not he could “fuck” Ava.

Sex seems to be the only thing on Nathan’s mind, which is clear when he sums up the movie Ghostbusters with the declaration, “A ghost gives Dan Aykroyd oral sex,” and uses the provocative term “wetware” to describe his possession’s brain.

When Caleb is alone with Kyoko, he tries in vain to get her to stop undressing for him. When Nathan enters he reiterates that it is a waste of time talking to her, as she doesn’t speak English. She only stops preparing to give the guest sex when her master puts on music and does a choreographed routine with his bang-maid. Nathan gives Ava sexuality so that she can “use it” to get out.

When Ava exits Nathan’s lair, she also escapes the confines of human sexuality, taking off her high heels to walk barefoot and alone to the helicopter that takes her away. Since an AI cannot procreate, it has no need for sex. Escaping gender is escaping prison.

Did we ever get past the chess problem?

The chess problem, as Caleb puts it, isn’t about whether or not a chess computer is good at chess, but whether it knows what chess is.

At the end of the film we are still left unaware of how much Ava is aware of. Does she know Caleb is going to die? Does she care? Can she care? Does she know what caring is?

One night, while Caleb is falling for Ava, she looks up into the CCTV camera, hoping Caleb is looking back at her. His experience of observing is irrelevant to her; it only matters that he is watching because if he is then he is invested in her. If he is, he wants her to be able to learn more, and help her escape.

When Ava does escape, she goes people-watching at the traffic intersection. From Ava’s point of view, we walk into the crowd, with shadows standing tall above upside-down pedestrians.

These shadows are the artificial reflections of ourselves we pretend are real when we put them online. The power cuts Ava causes signify the runaway abilities of our representative creations.

When Nathan dies he calls it “funkin’ unreal.” He thought of Ava as artificial, which was his downfall. The people made of 1s and 0s are just as real as those made of flesh and bone—and just as dangerous.

Screengrab via Robert Hoffman/YouTube