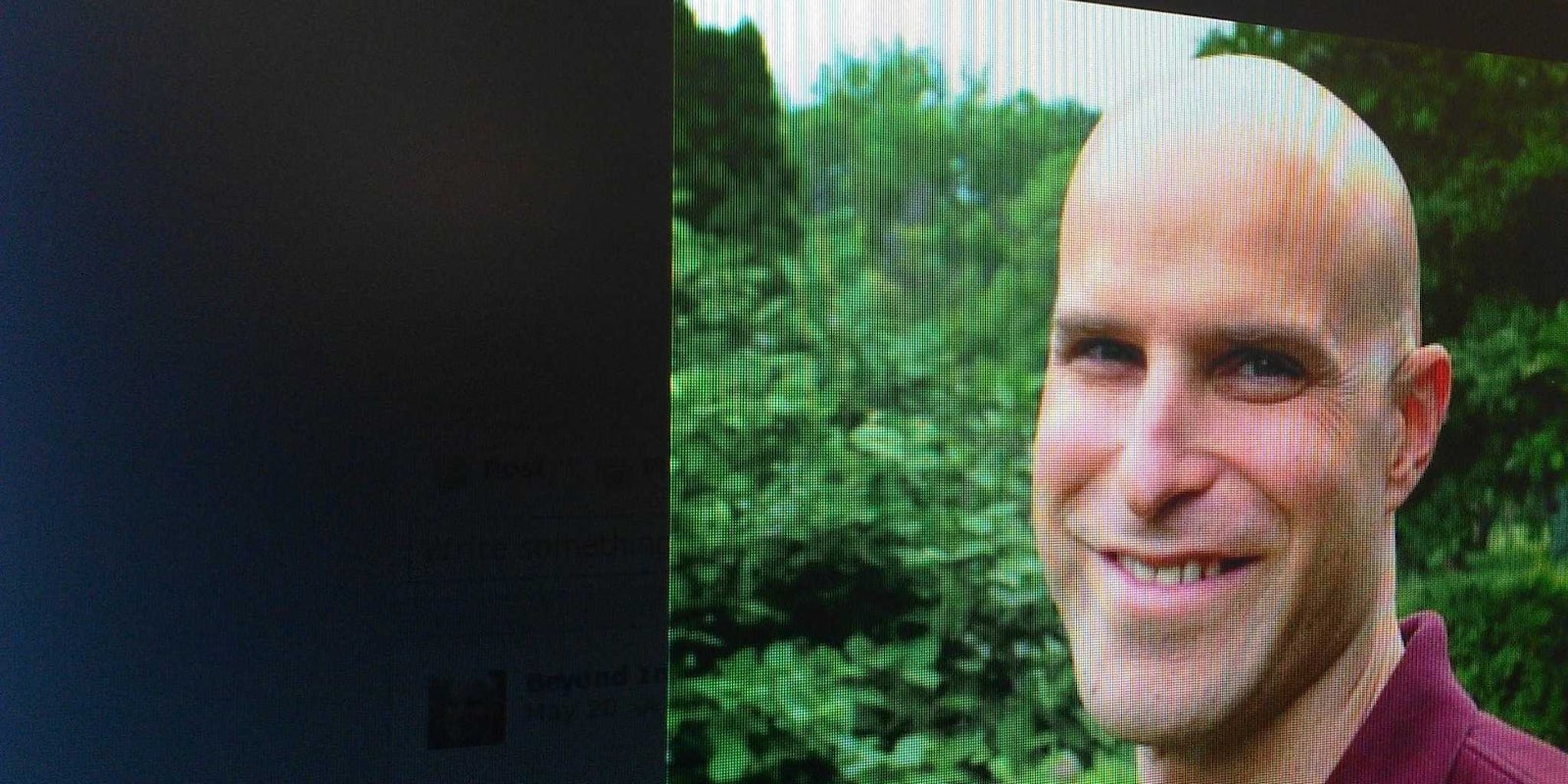

Meet Scott. He’s led a pretty amazing life: He’s got master’s degree in business, ran a major national consulting firm, and saved lives as part of a “clave/cliff” rescue squad. That was all before he got brain cancer, which he beat.

It goes without saying that Scott is a very real and inspiring individual.

Facebook, however, has decided otherwise.

Scott’s aggravating story was relayed recently by his friend, Charles Apple, an editor at the Orange County Register in Santa Anna, Calif. Scott, who calls himself a “full time optimist” runs a blog about his experiences. Scott wanted to build up his audience and, like just about everyone nowadays, figured Facebook would be a great place to do that, sharing about overcoming among the most extraordinary of adversities and offering some pretty valuable advice.

Here’s what happened next, according to Apple:

Scott received a warning that he had been friending people who didn’t actually know him outside of Facebook. Scott had difficulty understanding the complaint. And, in truth, I did, too. After all: Isn’t that the whole point of Facebook? Yes, to renew and maintain contact with old friends. But also to build a network of new friends?

It makes sense that a Facebook algorithm would watch out for fake accounts. There are a lot of them. At the time of the company’s initial public offering last summer, for instance, the Securities and Exchange Commission estimated that about 9 percent, or 83 million, of Facebook users were fake, zombie accounts used to distribute fake likes and followers.

How could Facebook possibly think Scott was fake? Sure, he was making friends with people who probably didn’t overlap in social circles. But a good proportion of the people Scott was friending had been recommended to him by Facebook.

Scott did everything Facebook demanded, but soon his personal profile disappeared completely. And that’s when things got really weird.

To reactivate his account, Scott next had to jump through a series of tests administered, no doubt, by an inhuman Facebook algorithm. First, he had to complete a Captcha, the security tool that helps separate humans from robots by scrambling words. Scott, however, has lingering vision problems due to his fight against brain cancer. It was hopeless, and neither Facebook nor Captcha provided him any other option. He had to call in Apple for help.

Next up: Facebook demanded that Scott identify his Facebook friends in pictures in which they had been tagged. This is a hilariously poor way to judge relationships. Facebook’s tagging feature is far from perfect; it’s susceptible to trolling and imperfection. I was tagged once in a photo of the brochure for my college graduation. It might be hard for anyone to point me out from a picture of a pamphlet.

Scott ran into just that problem.

Two of the three photos that were supposed to contain photos of his real friends weren’t even photographs. They were advertisements someone had uploaded to their wall. They went through three rounds of this and, on the final round, Scott failed. He couldn’t recognize a particular woman.

Facebook didn’t care about Scott’s visual and memory problems—the lingering effects of his harrowing fight with brain cancer. He was not real, Facebook determined. And he’s still locked out of his account. His blog’s page is still there, but he can no longer use it. His last post was from May 20.

“It’s not intentional discrimination,” Apple concluded. “But the result is discriminatory, just the same.”

Photo via Facebook