I stopped broadcasting via Periscope soon after I started. The app was, to me, a cesspool of misogynistic and harassing comments, yet another service where I would make content to be judged in real-time, primarily by trolls. The company’s safety update, which it is rolling out on Tuesday, could make me reconsider.

Periscope is implementing new tools for reporting and assessing harassment. The live-broadcasting app is turning to its community to act as judge and jury of what is considered ban-worthy conduct.

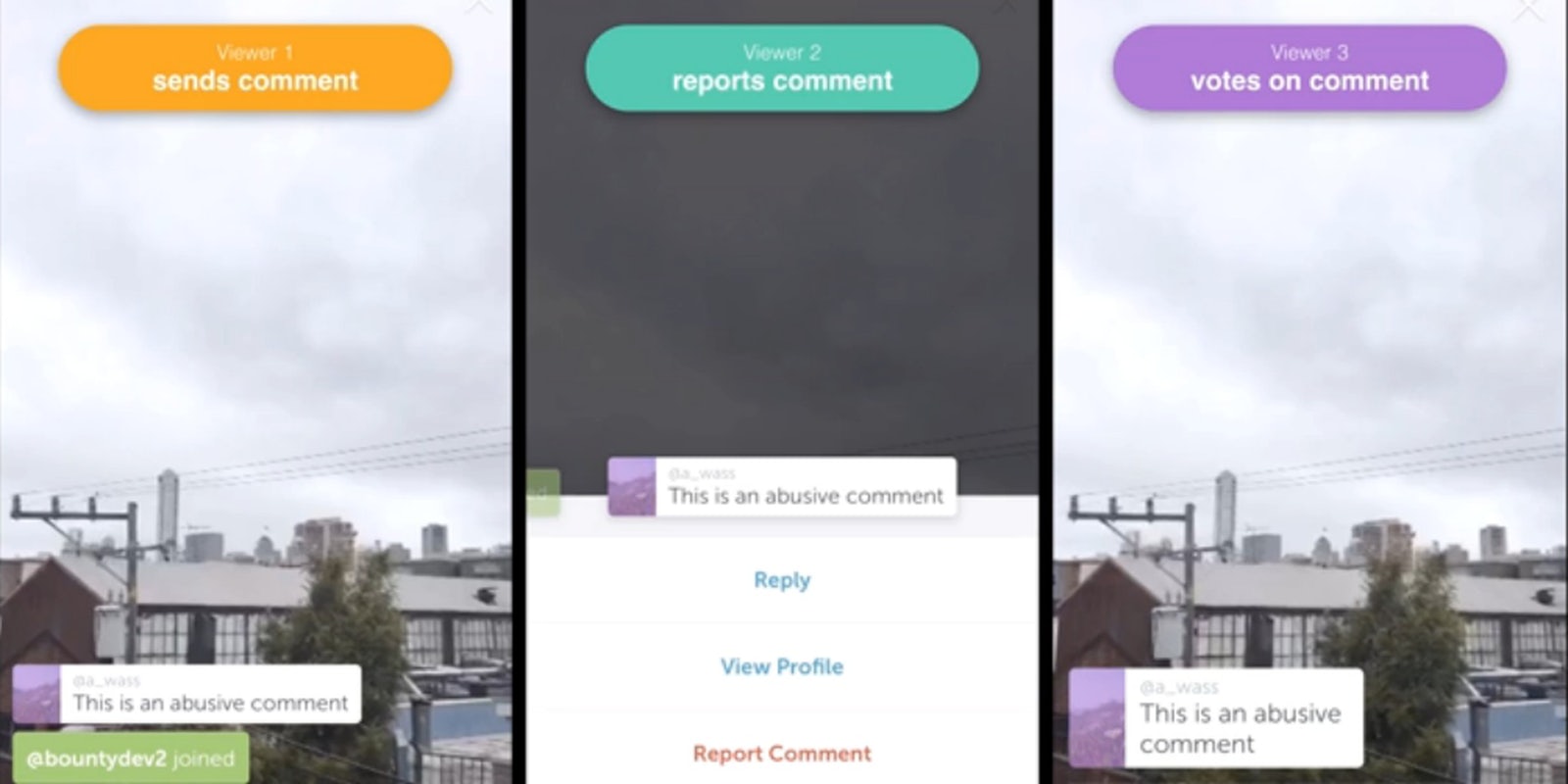

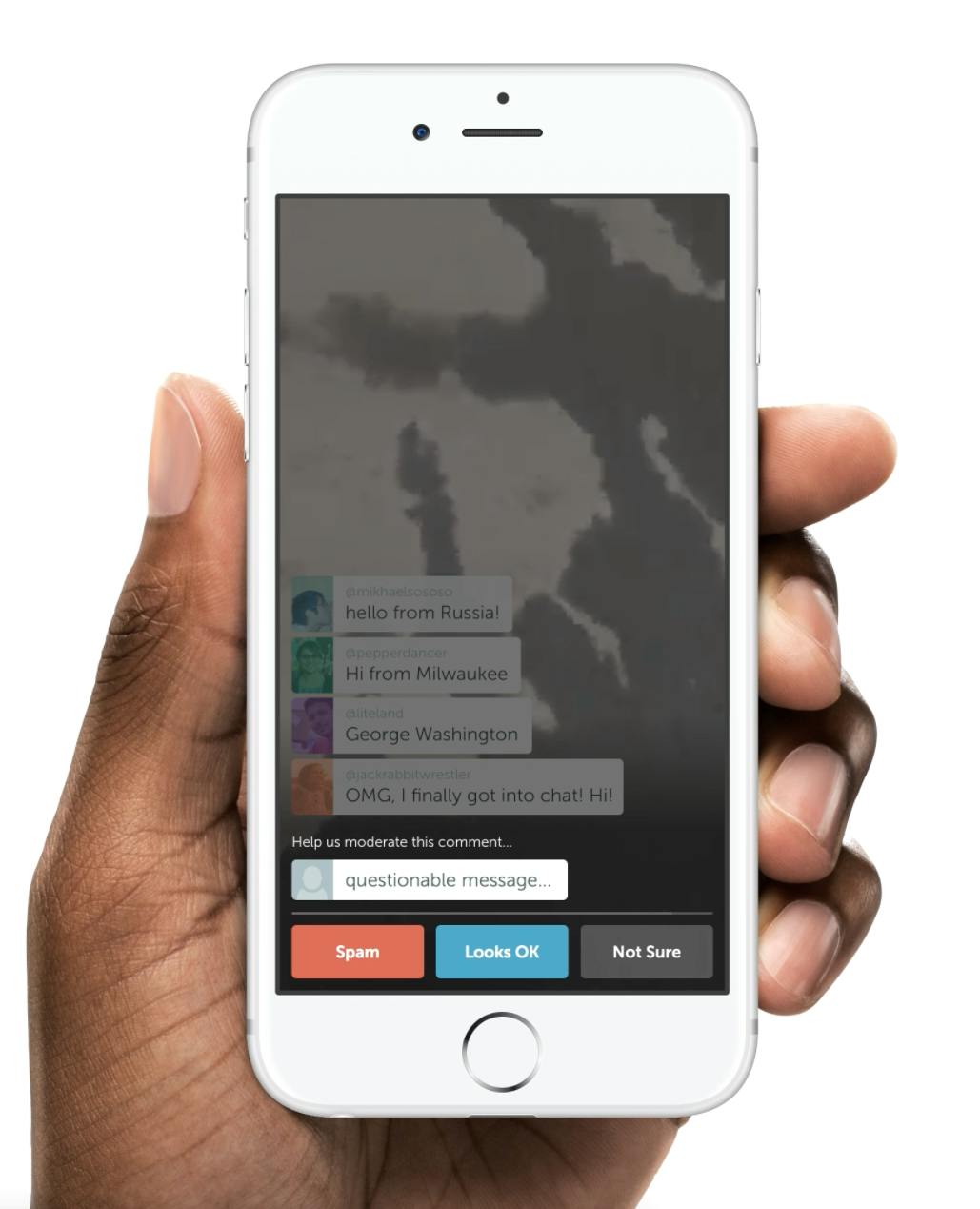

The new tools are somewhat complicated in explanation, but quite smooth in execution. The three-step process starts with a viewer reporting a comment for harassment or spam. Then, Periscope sends the reported comment to a “jury” of five fellow viewers to decide whether the comment is appropriate. If deemed harassment or spam, the commenter will be alerted and banned from commenting for one minute. If the viewer makes another comment on the video the jury agrees is inappropriate, he will be banned from commenting for the rest of the video.

Everything but the reported comment is anonymous. No one knows who reports or votes—it’s like a jury of anonymous viewing peers which is rotated each time a comment is reported. And because it happens in real-time, the entire reporting-voting-banning process takes about 15 seconds.

“No one on the team has felt satisfied with the state of things currently, and we want to do more,” Aaron Wasserman, engineer at Periscope, said in a presentation of the new features to reporters at Periscope’s headquarters in San Francisco last week.

The entire reporting system is unlike anything that currently exists on popular platforms. Live-video content lends itself particularly well to this type of community policing, but it’s still community policing and will only work if viewers are willing to recognize and get rid of abuse.

When Periscopers login to the updated app, they will receive a notification about the new abuse tools. Should they choose not to participate in judging what content is inappropriate, they can opt-out in the app’s settings. The in-stream popups don’t really disrupt the live broadcast, so it’s a simple way of helping Periscopers avoid harassment without the onus being placed on the broadcaster to continually block users that they might find offensive. People have watched 200 million Periscope broadcasts in the last year, and view 110 years worth of live video across apps each day.

Community policing is not a new idea—people have been able to flag and report content across various platforms for years—but the concept of making others the real-time judge and jury certainly is. It probably wouldn’t work in wildly toxic environments known for harassment, but perhaps on platforms with a variety of content, creators, and participants, the community will embrace the responsibility to keep itself safe.

As comments get reported and accounts get banned, Periscope is collecting all that data to figure out what kinds of abuse is happening on its platform, who receives it, and who is regularly contributing to it.

Eventually, Wasserman said, automated systems will be able to detect accounts that regularly harass others and patterns in abusive content.

“We are going to be able to gather information about the nature of messages that get reported for abuse and are found to be abusive by the community,” he said. “Another entry point that we are going to have into the voting experience will be called ‘auto-flagging.’”

With auto-flagging, if Periscope recognizes a comment that contains content frequently flagged as abusive, it won’t get posted, but rather go through the jury system prior to being public in the video. Only if the jury agrees it’s appropriate only then will it be shared.

Community policing on Periscope is intriguing because the company is owned by Twitter, a platform that’s consistently struggled to address abuse. Periscope could act like a petri dish for Twitter’s safety efforts, testing community reporting on a much smaller scale before rolling out similar or automated tools to its own platform.

Already the Twitter community has created tools to help each other deal with harassment. Auto-blocking, group block lists, and crowd sourced reporting efforts are meant to help individuals circumvent Twitter’s oft-confusing policies in an effort to quell abuse.

Clear differences separate the real-time content platforms, like the ephemerality of Periscope’s videos and comments, and sheer size of each platform. And it’s much harder to create sock-puppet accounts on Periscope the way people create multiple Twitter usernames to specifically target individuals. But it does provide an interesting solution that could fundamentally change the way Twitter deals with abuse.

Anne Collier, founder of iCanHelpline, a social media helpline for schools, is an online safety expert who sits on the safety advisory boards of Facebook, Airbnb, Uber and Twitter. Collier said Periscope’s new tools are “innovative,” and let users provide both context and assistance in recognizing abuse in a way that’s both engaging and unobtrusive.

“I have to say, I was really impressed,” Collier said in an interview. “The hardest thing is that users themselves haven’t yet discovered their superpowers for social change on social media. The industry has been working hard to help users to engage them in this social change. Making things more simple, making communities work better from participants.”

When a person receives a message to judge if it’s spam or harassment, they are given three options: Abuse/Spam, Looks OK, or I’m not sure. Yes and no options are weighted more than indifference, so if two people say “Abuse” and three say “no,” the comment will be removed.

The updates rolling out on Tuesday are the company’s first iteration of the comment judging system. Eventually, other features may include more data-based policing, including identifying users who are trustworthy abuse reporters. If someone is regularly reporting spam or harassment, and the community agrees, eventually those reports should carry more weight than other individuals.

This isn’t the first time Periscope has placed emphasis on safety features. Last year, Periscope rolled out a safety update that detects harmful comments before they’re posted and asks commenters to make sure they want to share it. If a profane or inappropriate word is detected, a commenter will see: “Are you sure you want to send this message? Messages like this frequently get blocked. If you get blocked too many times your comments will no longer be seen.” Wasserman said a week into debuting that feature, 40 to 50 percent of people who received the message declined to post their comment.

Additionally, broadcasters can block accounts from viewing broadcasts, and allow only people they follow to view them. Engineers wanted to put less responsibility on the broadcaster with the latest updates, turning to the community to police itself.

“We’re hoping that as this system gets smarter and as we learn more about it, we can become more preventative,” Wasserman said. “Ultimately the goal is to make it as hard as possible on the platform to find gratification being abusive.”