Warning: This article contains explicit language that may trigger or upset some readers.

Reddit user yellowmix joined the team operating the r/BlackLadies subreddit about a year ago after growing sick and tired of being harassed. They were reguar users of the r/BlackGirls subreddit; but, as the community became increasingly swamped by a flood of posts from racist, sexist trolls, r/BlackGirls’ moderators appeared to be doing almost nothing to actively stem the tide.

Frustrated, a bunch of r/BlackGirls users decided to split. They created a new subreddit called r/BlackLadies with the intention of carving out a safe space for African American women to discuss the issues important to them in an environment free from racist jerks.

And it worked, at least for a while.

The r/BlackLadies community grew into a place where nearly 5,000 subscribers chatted about everything from dealing with racism in the workplace to what Beyoncé wore when she walked down the red carpet. But then Ferguson happened. The controversy over the shooting death of an unarmed black teenager by a white cop in a St. Louis suburb seemed to bring out the worst in many users of a site that bills itself as the “front page of the Internet.”

In the wake of Ferguson, anonymous Reddit users on burner accounts deluged r/BlackLadies with racist posts and comments. The moderators—all volunteers, not paid Reddit employees—tried to delete the hateful content as best they could, but the entire experience exhausted and demoralized them. They contacted Reddit’s management, but were told that, because the trolls weren’t technically breaking any of the site’s core rules, there was there was nothing Reddit would do about it.

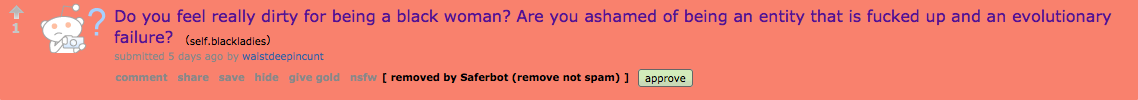

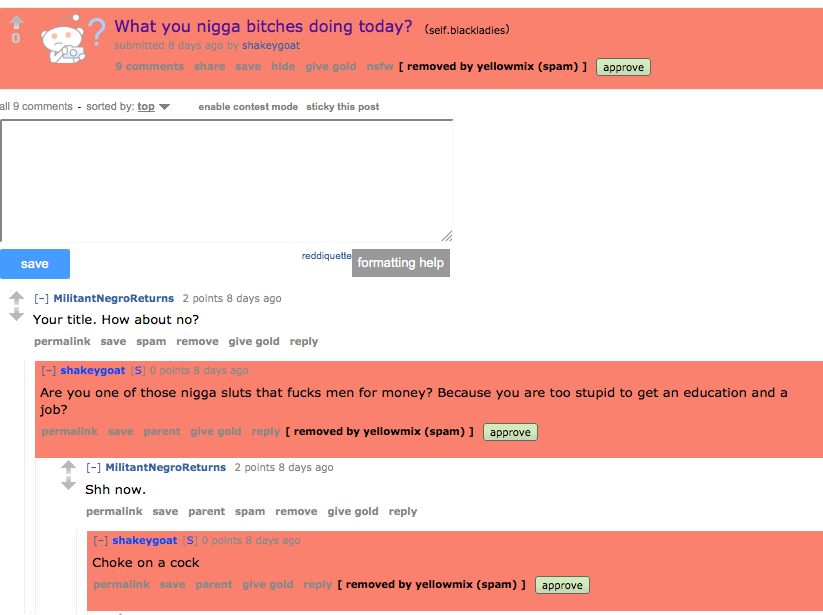

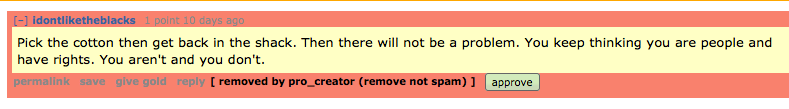

The women of r/BlackLadies were on their own to go through the subreddit on a near-hourly basis and hand-delete content like these:

Frustrated, the moderators of r/BlackLadies posted an open letter to Reddit’s management on Monday, calling for them to do something, anything, about the problem.

“Since this community was created, individuals have been invading this space to post hateful, racist messages and links to racist content, which are visible until a moderator individually removes the content and manually bans the user account. All of these individuals are anonymous, many of them are on easily-created and disposable (throwaway) accounts, and they are relentless, coming in barrages. Hostile racist users are also anonymously ‘downvoting’ community members to discourage them from participating. reddit admins have explained to us that as long as users are not breaking sitewide rules, they will take no action.

“The resulting situation is extremely damaging to our community members who have the misfortune of seeing this intentionally upsetting content, to other people who are interested in what black women have to say, as well as moderators, who are the only ones capable of removing content, and are thus required to view and evaluate every single post and comment. Moderators volunteer to protect the community, and the constant vigilance required to do so takes an unnecessary toll.

“We need a proactive solution for this threat to our well-being. We have researched and understand reddit’s various concerns about disabling downvotes and restricting speech. Therefore, we ask for a solution in which communities can choose their own members, and hostile outsiders cannot participate to cause harm.”

The moderators of r/BlackLadies aren’t alone in feeling this way. As of Tuesday afternoon, the operators of at least 43 other subreddits have agreed to co-sign the letter.

The co-signatory subreddits run the gamut from r/DumpsterDiving to /blerds (a community for African American nerds). The one thing nearly all of them have in common is a niche identity distinctly separate from the Reddit’s middle class, heterosexual, white, male norm.

The moderators of the r/rape subreddit, however, were especially enthusiastic to sign up.

The r/rape of today is very different from when the subreddit as first started. Originally run by Violentacrez, the grandaddy of all Reddit trolls, r/rape began as a place where Reddit users could post rape fetish porn. Violentacrez eventually handed r/rape over to a new set of moderators, who turned the subreddit into a community for victims for sexual violence.

For his part, Violentacrez moved that activity over to another subreddit called r/StruggleFucking (really), which is still active (really).

“Sadly the past of our sub means that the we regularly get visitors who are sexually aroused by the stories that some of our users tell and often feel the need to inform them of that fact in the most graphic of ways possible,” r/rape moderator scooooot told the Daily Dot. “You could imagine how that makes a rape survivor feel, especially those with fresh trauma. And, of course, they also often get very abusive with us when they are inevitably banned.”

According to scooooot, the subreddit’s former top moderator was chased off Reddit after enduring an overwhelming amount of harassment from other users and having her real-world identity revealed, a practice called “doxing” that is strictly forbidden by Reddit’s rules. Today, the r/rape’s moderators estimate that one-fifth of all content submitted to the subreddit is trolling.

Even so, the community’s moderators managed to turn r/rape into a supportive place for rape survivors and their loved ones. An auto-moderation bot helps, weeding out some of the most flagrant comments. But the subreddit’s moderators still have to read through every single post and comment thread manually to ensure no one is being attacked or degraded—a painful, grueling task.

Scooooot explained:

“Personally, there is nothing that I enjoy less than not only having to remove the messages that violate our rules but the messages of the person they are attacking. Not only were they forced to defend themselves and their account of events as if they were on trial, but I then remove their brave statements of defense. This is often the first time these survivors mentioned their rape to another person only to be told that they are lying or just a slut. And then I have to follow and take their words from them, usually because their half of the conversation can be triggering to other users. It doesn’t feel good.”

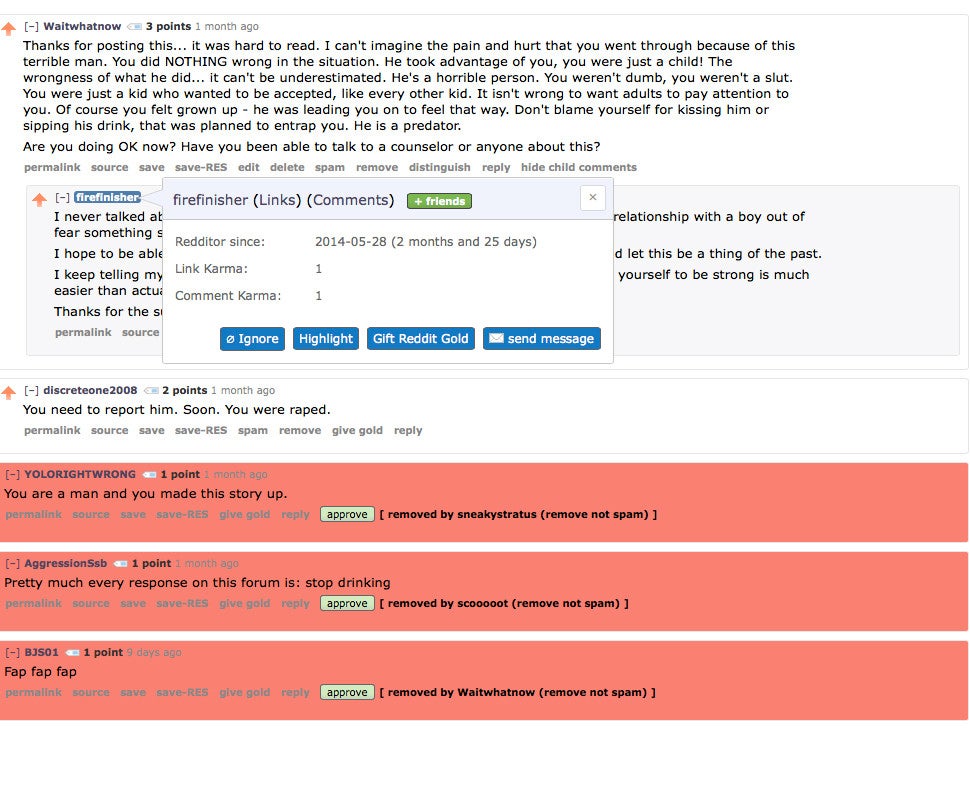

Here are a selection of comments the moderators have had to personally delete in the past month alone:

The problem doesn’t just extend to comments. Reddit has an internal messaging system that allows any user on the site to message any other. For the denizens of r/rape, that system has been consistently used as a venue for harassment—from verbal abuse all the way to rape and death threats.

“Initially, I was able to get these accounts … [permanently banned]. But a few months ago, the admins decided that rape threats were not a big enough issue to ban for,” explained r/rape moderator waitwhatnow.

Waitwhatnow recently reached out to a Reddit administrator for help dealing with an anonymous user who was repeatedly sending rape and murder threats to a sexual assault survivor and member of the r/rape community.

“I got no reply. Nothing was done,” waitwhatnow recalls. “I had about four or five users since then reporting similar problems, with screenshots, and I just had to tell them to block the person and that I’d ban the perpetrator from r/rape, but that was the best I could do. … Apparently, protecting the anonymous commenter’s ability to terrorize an innocent person is far more important to Reddit than user safety.”

“Waitwhatnow” is actually this moderator’s second Reddit account. Two years ago, shortly after she became active on sexual assault survivor subreddits, she was doxed by a someone who had been harassing her through the site. “The person who did that threatened to kill my cat,” she said. “I also reported this to the admins and nothing was done.”

Representatives from Reddit did not respond to multiple requests for comment.

However, in a recent interview with The Wire, Reddit General Manager Erik Martin insisted that segregation was the key to ensuring Reddit was a place where anyone could feel comfortable contributing.

“As we do a better job of helping people find what they are looking for on Reddit and the communities they are looking for on Reddit and also help people do a better job of cultivating and creating the communities that they want I think there will be even more choices to participate and feel comfortable when they are there no matter what their interests are.”

Yet, that argument starts to quickly break down when even insular, supposedly protected communities are beset by trolls.

Reddit has been reluctant to ban users for doing things that don’t violate the site’s core rules, which forbid just four types of activity: posting spam and child pornography, vote manipulation, and sharing other users’ personal information. Outside of that, Reddit’s administrators have largely attempted to avoid discriminating against certain types of content just because other users find that content offensive. It’s an attitude that’s allowed the site to flourish as a centralized hub where anyone stake out a plot of virtual ground and start a conversation about whatever they’re interested in—no matter how outré.

While the site’s commitment to unfettered free speech is, at one level, commendable, some see Reddit’s motives in allowing this sort content to flourish as being less than idealistic.

“In case you’re curious why Reddit continues to allows these types of subs … you should ask yourself what kinds of traffic they generate,” scooooot says. “There was a reason it took them five years to shut down [popular pedophile pornography subreddit] r/jailbait. It wasn’t free speech; at that point, r/jailbait was generating an ungodly amount of traffic for Reddit, mostly from unregistered users. That equals money, and the ability to brag about one billion unique visits a month.”

Without support from Reddit itself, moderators in subreddits infested with trolls are typically left to their own devices—often with some success.

For example, some subreddits that would appear to be natural enemies have effectively come to relatively organic ceasefires. The moderators of r/ShitRedditSays, where users go to point out examples of racist and misogynist content present elsewhere on the site, put a moratorium on content that originally appeared on r/MensRights, which has a tendency to post a lot of misogynistic content, beucase they view it as “low hanging fruit.” Conversely, the moderators for r/MensRights will often delete posts coming from subreddits ike r/rape upon the request of those subreddits’ moderators. By keeping the two communities at least partially separate, the number of angry, unproductive, all-caps commenting matches is hopefully kept to a minimum.

Another solution to the troll problem is to take the subreddit private, meaning only users who were personally invited by the moderators would be able to read and post content. However, going that route is one that yellowmix rejects outright, noting that, “being told to go private says that we must be invisible to be safe…tells the world that our speech does not belong in public.”

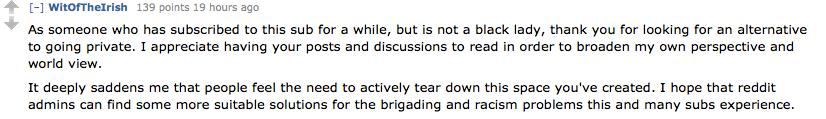

Other members or the r/BlackLadies community agreed with that sentiment. This is a top comment on the open letter post:

Reddit isn’t the only place online dealing with exactly these sorts of issues.

Earlier this month, the female-focused blog Jezebel published a blog post calling out its parent company, Gawker Media, for failing to deal with what it called the site’s “rape gif problem.”

Trolls were filling the comments sections of Jezebel posts with gifs of violent pornography that the editors were having to comb through and manually remove, to the detriment of their job satisfaction and mental health. Despite repeated requests to deal with the issue by letting editors permanently ban users from commenting on the site based on their IP addresses, or manding users post with their real names through Facebook, Gawker brass hadn’t prioritized a solution—preferring to err on the side of letting users submit anonymously in the hopes that someone might submit a juicy tip.

The post sparked a massive outcry and, a few days later, Gawker rolled out a solution: a pending comments system where only users who had a proven history could automatically comment on articles. Unapproved users could still leave comments on Jezebel articles, but readers would have to click through to a special section in order to read them.

Yellowmix would like to see Reddit following Jezebel’s lead and implementing a “public view/approved participant” system for subreddits that choose to opt in—or to do as similar online aggregator Fark now does and “experiment with some basic standards of human decency as part of the site rules.”

“The site’s culture will not change overnight, but they’ve historically done it with the rules on personal information, so it can be done,” yellowmix added. “At a minimum, we invite Reddit admins to commit to actively and directly work with communities affected by this problem toward developing solutions for it.”

However, not everyone in the subreddits besieged by trolls thinks the open letter is the best way to affect positive change.

The r/TwoXChromosomes community isn’t just the single largest and most influential subreddit dealing with women’s issues, it’s also the only one to listed as a default—meaning that everyone who visits the site sees content from it automatically on Reddit’s front page. When r/TwoXChromosomes was named as a default earlier this year, the community’s newfound prominence attracted a critical mass of misogynistic trolls.

As such, one would assume that its moderators would co-sign the the latter, but one would be wrong.

“Racism and bigotry are highly complex problems that cannot be solved with the signing of a letter, or a website policing its users, or giving the trolls a spotlight. We are patently disinterested,” subreddit co-founder HiFructoseCornFeces explained. “Growing up means realizing that combating evil does not mean spending all of your energy stamping out every last flicker of fuckery, but in issuing forth genuine goodness whole-heartedly and with resonance.”

Illustration by Jason Reed

Correction: The article has been amended to calrify both yellowmix’s role in the creation of r/BlackLadies as well as the relationship between r/ShitRedditSays and r/MensRights.