A Redditor who had over a decade of medical issues solved with a diagnosis from ChatGPT is sparking conversation about the benefits of AI.

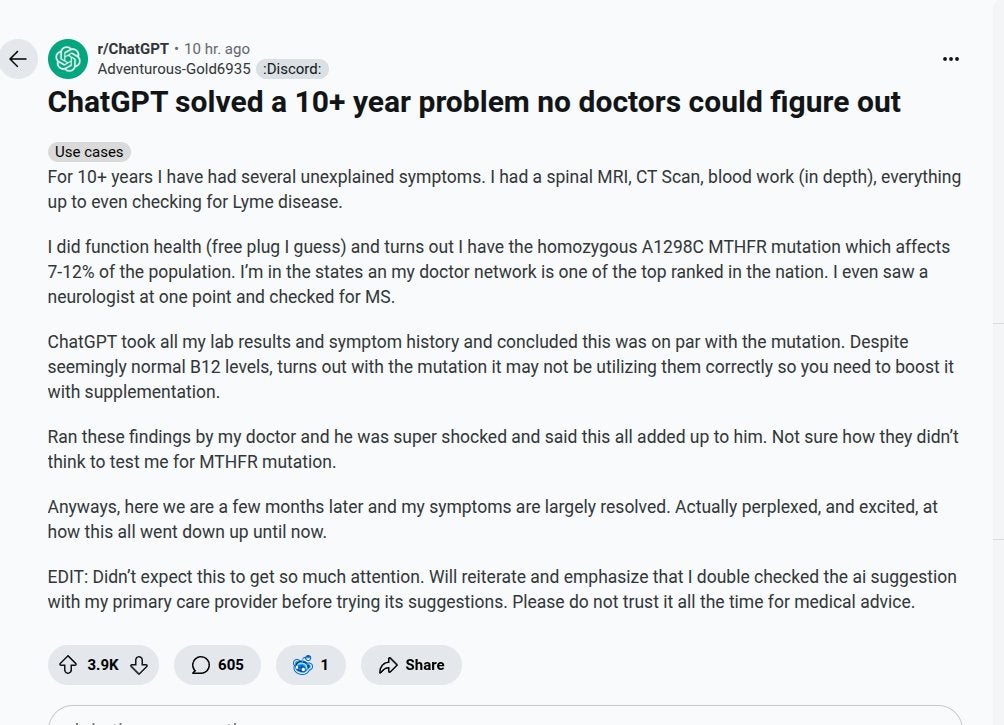

In a since-deleted post, u/Adventurous-Gold6935 shared that they had “several unexplained symptoms” over the course of 10+ years that led to doctors ordering “a spinal MRI, CT Scan, blood work (in depth), everything up to even checking for Lyme disease.”

Things took a turn when they utilized Function Health to determine they have a homozygous A1298C MTHFR mutation—something that affects somewhere between 5-20% of the population, depending on the source.

MTHFR mutations can (keyword: can) cause a shift in how a person’s body processes certain vitamins, resulting in a complex chain of events that can ultimately manifest in a variety of neurological or cardiovascular issues.

“ChatGPT took all my lab and symptom history and concluded this was on par with the mutation,” the redditor wrote. “Despite seemingly normal B12 levels, turns out with the mutation it may not be utilizing them correctly so you need to boost with supplementation.”

They approached their doctor with their findings and he “was super shocked and said this all added up to him.”

Other redditors share similar stories

Although ChatGPT is getting the credit as the story goes viral, it’s worth pointing out that it was actually genetic testing that found the mutation. The redditor didn’t go into further detail about his process, but it’s likely ChatGPT made or confirmed the correlation between the symptoms after the mutation was identified—albeit seemingly not by a doctor.

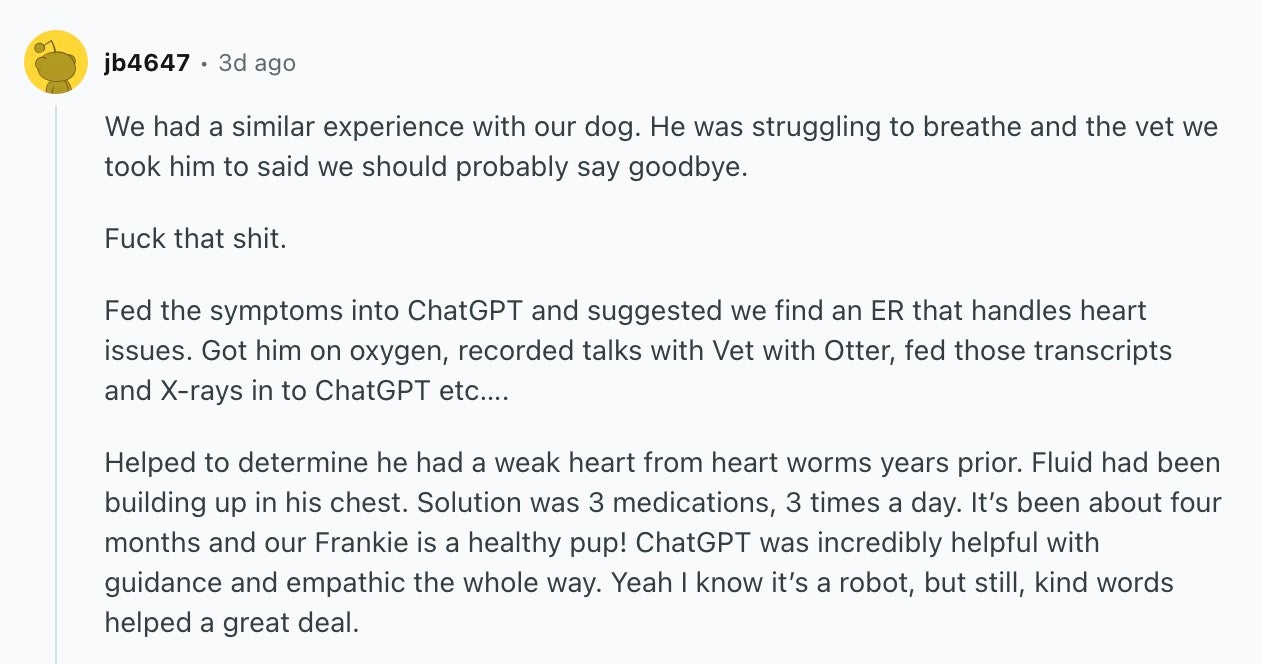

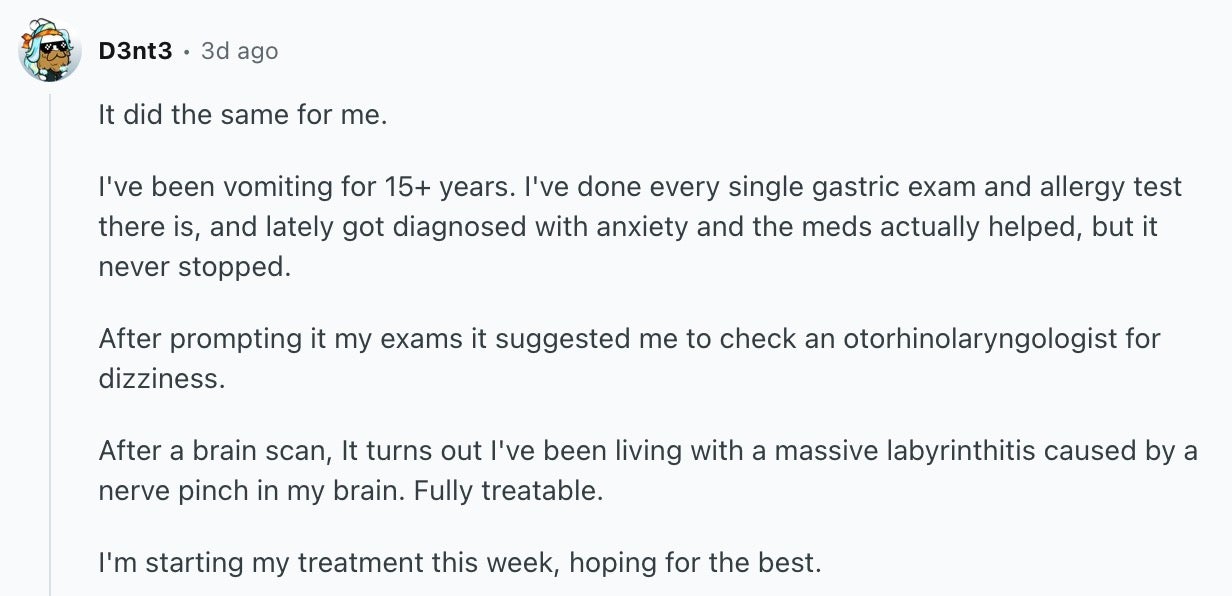

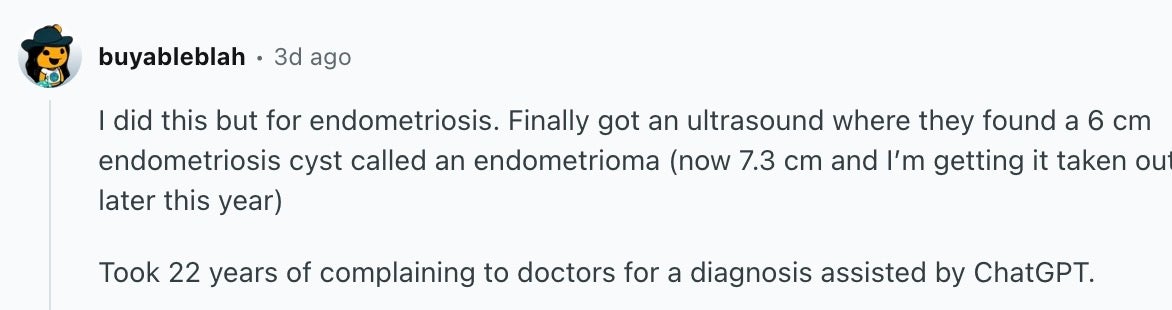

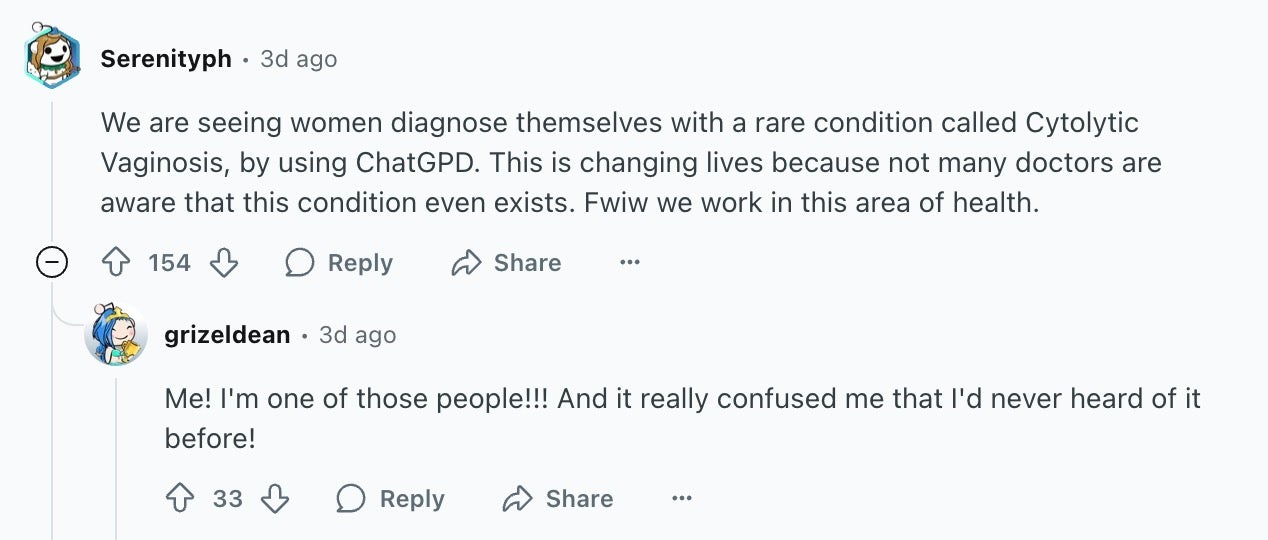

Still, the story opened the floodgates for an important discussion. While tech bros and business gurus have been busy praising AI for its crappy art and subpar writing, not to mention using it to flood the internet with incorrect information as jobs get slashed left and right, plenty of average people have had experiences that echo this redditor’s.

Why is it taking ChatGPT to catch these medical conditions?

There are any number of reasons why an AI system might end up catching a medical condition that doctors have missed, many of which are quite understandable. More complicated or obscure conditions might require drawing conclusions from a wide swath of information, or even traditionally unrelated ailments. Particularly in the U.S., the variety of testing that may be required to catch everything can require a lot of hoops and a lot of money.

In the case of the original poster, a homozygous MTHFR mutation doesn’t always cause symptoms. And many of those who have it don’t require supplementation. The condition is so poorly understood by doctors that there’s an entire subreddit with over 25,000 members dedicated to people figuring things out on their own.

Human error likely accounts for much of the reasoning behind ChatGPT figuring things out when doctors have failed to do so, although it’s more complicated than professionals just got getting things right. A doctor may not realize a symptom is pertinent when weighing options, but a patient may not, either, and leave it unreported. It’s a lot easier to take your time considering all the things that ail you when sitting in front of a website that’s always available and won’t judge you than when you’re sitting in a doctor’s office trying to figure out how to be taken seriously.

But folks on reddit pointed out a much more frustrating, and almost insidious, reason ChatGPT might help them where doctors have not.

Is ChatGPT reliable for a medical diagnosis?

As several people pointed out in the comments of the reddit post, ChatGPT shouldn’t be relied on fully for any diagnosis. If we’ve learned anything from the years of self-diagnosing via WebMD, a lot of symptoms overlap, not everything is relevant, and it’s easy to jump to the worst possible conclusion. A human doctor has the experience to make an assessment that AI, at least at this point in time, cannot.

But ChatGPT can point people in the right direction, especially if they’ve ruled out other possibilities, struggled to get answers, and have medical documents to feed into the machine for further examination.

As u/KensingtonSmith wrote, “AI suggests what could be the problem, like MTHFR, but its up to a doctor to organise the blood test.”

Internet culture is chaotic—but we’ll break it down for you in one daily email. Sign up for the Daily Dot’s web_crawlr newsletter here. You’ll get the best (and worst) of the internet straight into your inbox.