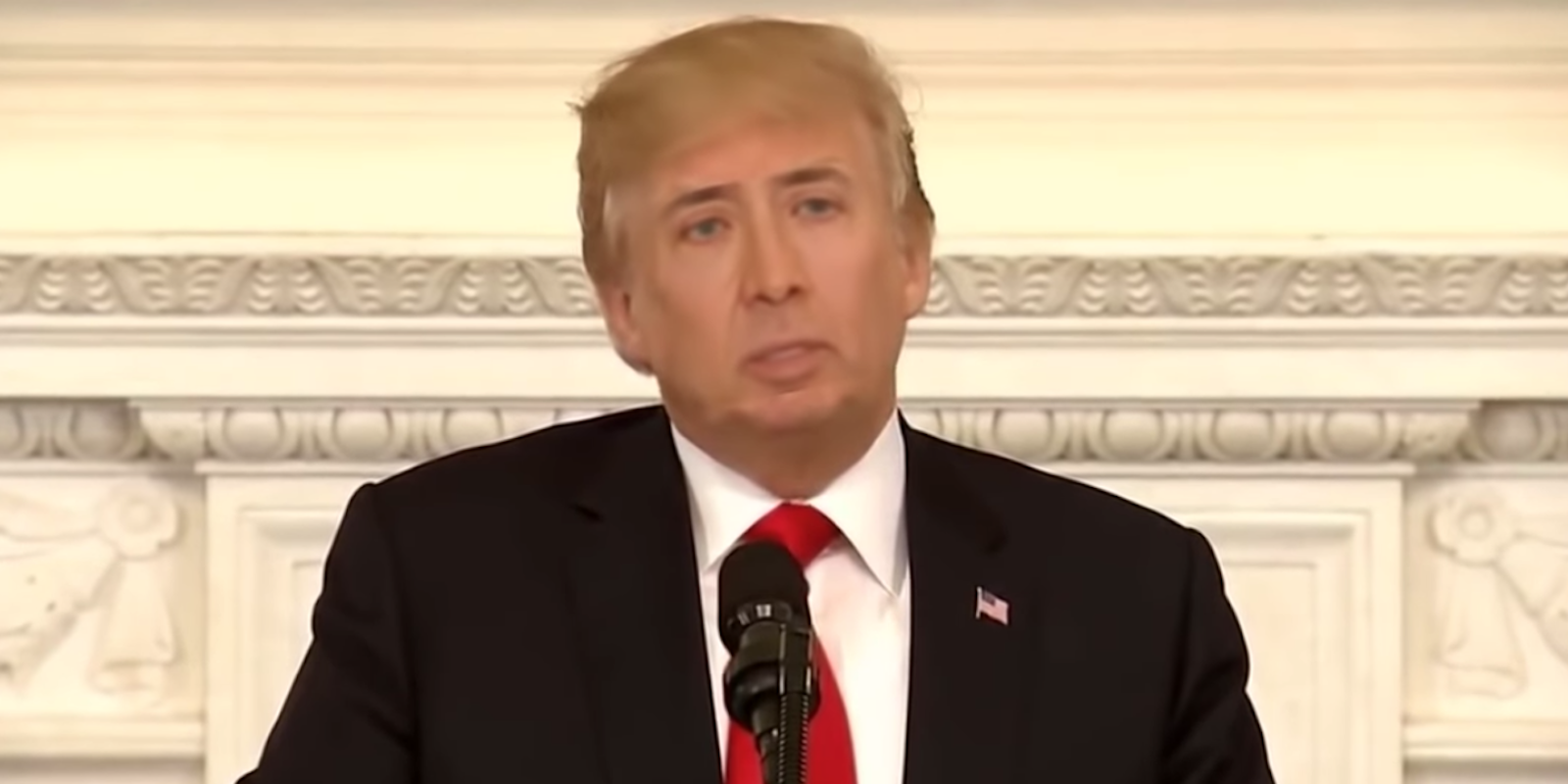

Many of the deepfakes on the internet today—videos that use AI to put someone’s head on another person’s body—are easily identifiable.

They show Mr. Bean as Donald Trump, Steve Buscemi as Jennifer Lawrence, or Nicolas Cage as just about anybody. Yet these are just the deepfakes that use celebrity images. Deepfakes of non-famous people exist, and they are much harder to identify. Everyone wants to know how to stop the spread of deepfakes, or at least develop techniques to flag video and audio clips as fake, but there are no magic solutions yet.

It’s especially hard to figure out if a file is fake if it’s shared on a social site like Twitter or YouTube.

“There’s a lot of image and video authentication techniques that exist but one thing at which they all fail is at social media,” Matthew Stamm, an assistant professor at Drexel University, said at SXSW on Tuesday.

These techniques look for “really minute digital signatures” that are embedded in video files, said Stamm, and when a video file is shared on social media, it’s shrunken down and compressed. These processes are “forensically destructive and wipe out tons of forensic traces,” he added.

If a video is “pristine,” experts can find out a lot of information about them that can help determine their authenticity, Stamm said, but it’s much harder to extract information from videos found on social sites.

Stamm, who develops algorithms to figure out if images and videos are fake and how they are edited, spoke on a SXSW panel called “Easy to Fool? Journalism in the Age of Deepfakes,” which covered the recent spread of deepfakes as well as other synthetic information online. Stamm was joined by Kelly McBride, vice president at Poynter, and Paul Cheung, a director at the Knight Foundation. The panel was moderated by Jeremy Gilbert of the Washington Post.

Stamm stressed that there was not currently a “silver bullet” to combat deepfakes, while Cheung said that any techniques to combat fake audio and video will quickly become outdated as the technology rapidly develops.

Last year, Cheung said, researchers noticed that the people in deepfakes videos didn’t blink. But that changed as the technology improved. “The minute…we thought we had a mechanism for detecting a deepfake,” Cheung said, “someone had out-faked the solution.”

“We’re constantly being challenged and we constantly have to figure out new solutions,” he said.

McBride thinks that news organizations need to work together to tackle the problem of doctored videos and audio clips. Big news organizations like Associated Press and the Washington Post have more resources to research synthetic media like deepfakes, while small local newspapers do not, she said.

“Journalism itself is really going to have to ask some existential questions about creating a collective research organization,” said McBride, “that will eventually help with this problem of truth in democracy.”

Stamm, meanwhile, said that researchers are “very rapidly coming to the point to run analysis on videos and images” that can help news agencies. Stamm is also starting to look into synthetic audio, which can be detected by phase shifts in the audio file, among other techniques.

But even the best fact-checking and identifying techniques are irrelevant if people think it’s real and start to spread it on social media.

READ MORE: