This story was originally published by Reveal from The Center for Investigative Reporting, a nonprofit news organization based in the San Francisco Bay Area. Learn more at revealnews.org and subscribe to the Reveal podcast, produced with PRX, at revealnews.org/podcast.

Mary Canty Merrill was fed up. So she turned to Facebook.

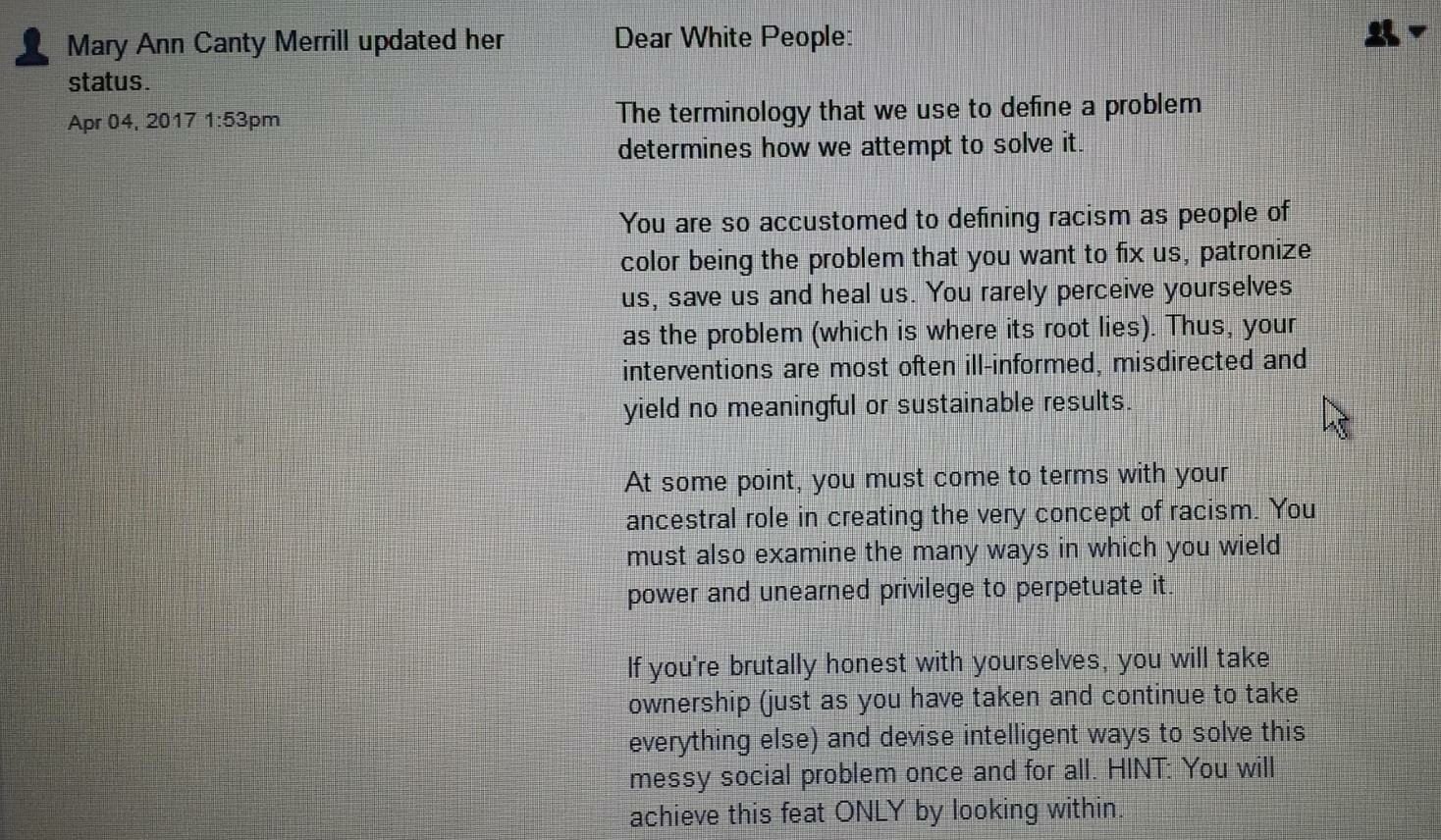

“Dear White People: The terminology we use to define a problem determines how we attempt to solve it,” she wrote in an April 4 Facebook post.

“You are so accustomed to defining racism as people of color being the problem that you want to fix us, patronize us, save us and heal us. You rarely perceive yourselves as the problem (which is where its root lies). Thus, your interventions are most often ill-informed, misdirected and yield no meaningful or sustainable results.”

She logged in the next day to find her post removed and profile suspended for a week. A number of her older posts, which also used the “Dear white people” formulation, had been similarly erased.

Perhaps most Facebook users would’ve simply waited out the weeklong ban. But Merrill, a psychologist who advises corporations on how to avoid racial bias, wondered whether she was targeted because she is African American. So she asked some white friends to conduct an experiment: They copied what she had written word for word and had others report it as inappropriate content. In most cases, Facebook allowed the content to remain active. None of their profiles were suspended.

At a time when Facebook is under the microscope for failing to stop harassment and the spread of fake news, it also faces another problem: The social media giant’s reporting policies punish minority users in a variety of ways.

Recent leaks of Facebook’s internal moderation guidelines published by the Guardian and ProPublica revealed that the company’s policies around threats and hate speech can be both controversial and confusing. For instance, instructions on how “to snap a bitch’s neck” are allowed on the platform, whereas “#stab and become the fear of the Zionist” is not.

The implementation of these rules is frustratingly inconsistent. The experiences of Merrill and others who spoke with Reveal from The Center for Investigative Reporting suggest that even within Facebook’s policies, moderators punish minority users for posting content that it deems appropriate when posted by white users.

That can be a deterrent for those just beginning to speak their minds on social media, Reveal found, and for veteran activists, it can harm their ability to get the word out or use the platform for other purposes, including their livelihoods. If their profile is suspended, Facebook users can appeal the decisions, but no such option exists for individual posts.

Social networks rely on users flagging inappropriate content for the company’s moderators to review. The true scale of moderator bias is almost impossible to measure from the outside; while Facebook has developed a complex system tracking its users’ every click, the company doesn’t publicly release data about its moderation system.

A Facebook spokeswoman admitted moderators made some mistakes when shown some of the decisions highlighted in this investigation. She acknowledged Merrill’s posts had been incorrectly deleted but said they had been restored.

However, months later, the April post still could not be found on Merrill’s Facebook wall. Merrill was able to see it when she scrolled through her page, but anyone else who clicked on a link to the post received this message: “This content isn’t available right now.”

Merrill said she’s frustrated that Facebook ignored her initial complaints, taking action only when contacted by the media.

“I think what they’re trying to do now is cover their ass,” she said. “They need to be reaching out to me with an apology.”

Doing nothing to stop hateful messages

Facebook’s censorship has cropped up in confounding ways, ranging from posts of innocuous photos to instances in which people used the platform to expose racist attacks aimed at them by others.

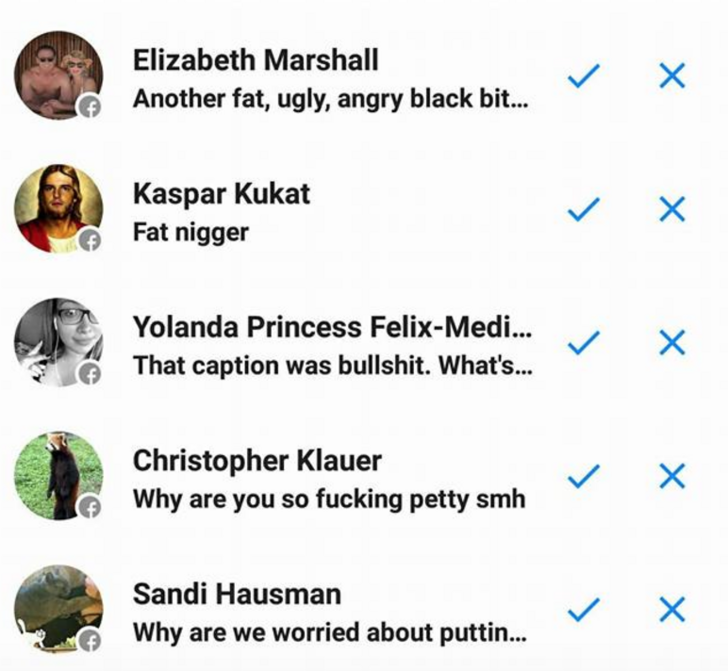

Sherronda Brown had posts removed and her profile suspended in May for posting screenshots of harassment she and other black women received on Facebook. One screenshot showed her inbox, full of private messages from other users calling her “another fat, ugly, angry black bitch” or a “fat nigger.” But she said Facebook moderators declined to take decisive action against the harassers when those messages were first reported.

Ijeoma Oluo, an editor with the news website the Establishment, had a tweet go viral about feeling uncomfortable when she was the only black person at a small-town Cracker Barrel restaurant. Soon, Oluo’s Facebook account was deluged with racist messages.

“Fucking get rammed over by trump train bitch. If you did that in Texas or Indiana, your ass wouldn’t walk normal. Racist bitch!” read one message.

Oluo wasn’t able to easily report the hateful comments on her smartphone, but she took screenshots and posted images of them to her Facebook wall.

“And finally, facebook decided to take action,” she wrote in a blog post. “What did they do? Did they suspend any of the people who threatened me? No. … They suspended me for three days for posting screenshots of the abuse they have refused to do anything about.”

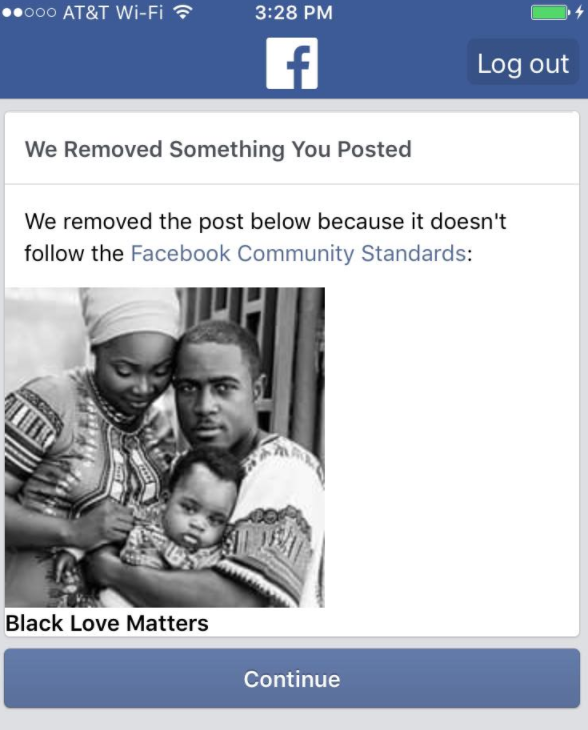

Last year, a page called Black Love Matters, which highlighted black families, posted a picture of a couple cradling their child. The image was removed from Facebook and the page’s administrators received a message saying the picture violated Facebook’s “community standards.” Facebook also unpublished the entire Black Love Matters page.

The page had received temporary suspensions in the past when similar content was reported. However, this time was permanent, which forced the page’s administrators to start from scratch with Black Couple Revolution.

Efforts to use the platform to state opinions or support others – types of posts that seem ubiquitous on Facebook – also were targets for suspension.

Seeing a friend’s post about Kendall Jenner calling the late rapper Tupac Shakur her “spirit animal,” Jenina Pellegren commented in late May that Jenner was “just mayonnaise on a cracker.” Someone reported the comment, and Pellegren got a three-day ban. In protest, she updated her profile photo to include “Mayo on a cracker: Starring Kendall Jenner.” She was reported again and got a seven-day ban.

Pellegren said she asked white friends to post the same photo as their profile pictures and have others report them. In those cases, the images were found not in violation and allowed to stay on her friends’ pages.

Miles Joyner’s account was suspended in May for updating his profile picture to include the phrase “Stand with Sophie,” part of a campaign supporting a transgender cartoonist who was being harassed by online trolls. Joyner also was notified by Facebook that his post violated “community standards.”

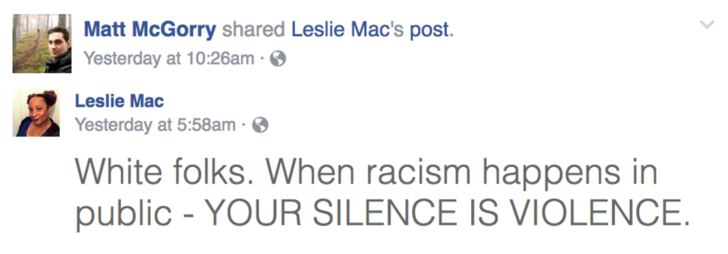

Leslie Mac, a prominent activist who co-founded the Safety Pin Box subscription service to support black liberation, was suspended in December for posting, “White folks. When racism happens in public – YOUR SILENCE IS VIOLENCE.”

Mac’s account was reinstated after the story of her suspension began circulating among media outlets.

Most of the suspensions were not permanent. Facebook’s bar for deleting a profile is high – think Islamic State members or child pornographers. But the constant threat of a suspension may lead people to think twice about posting anything controversial or to take precautions about how widely their content is shared.

Leslie Mac, an activist who co-founded the Safety Pin Box subscription service to support black liberation, had her Facebook account suspended for 24 hours after this post was shared by “Orange Is the New Black” actor Matt McGorry.Credit: Leslie Mac/Facebook.com

Mac’s Facebook account was issued a 24-hour suspension after her post was shared by “Orange Is the New Black” actor Matt McGorry, who is white. Following the suspension, Mac reached out to McGorry, asking him not to share her posts anymore.

“This shuts down speech most from casual activists who don’t do this for their job, people whose commitment levels aren’t as high, so they’re more easily dissuaded by harassment,” Mac said. “It’s natural for people to remove themselves from uncomfortable situations.”

Not being able to use Facebook for a few days is merely an annoyance for most people, but it can have real consequences for anyone whose personal account is entangled with their professional career.

Pellegren’s husband is a disabled veteran, and her digital marketing business is the primary source of income for the couple and their six kids. Her Facebook profile is one of the main ways she attracts new clients.

“Every suspension is me not showing up in my followers’ newsfeeds,” she said. “It’s me not being able to engage with commentary on threads from followers and fans. My brand is my bread and butter.”

Pellegren has received multiple suspensions. During each, she was able to see other people’s content on Facebook but not post her own. Following each suspension, Pellegren said she noticed a dramatic dip in engagement on her posts. She considered paying Facebook to boost her posts but ultimately decided she couldn’t afford the expense.

Moderators often miss context

Facebook’s official policy is to treat content in a way that’s colorblind to the person posting it. A white person writing the N-word is treated exactly the same as if a black person had written it, even if the society in which they live does make that distinction.

Ignoring these demographic characteristics can lead to misunderstandings. Two months ago, Jessica May was disturbed by an image of a Native American dreamcatcher adorned with a Confederate flag. She posted it with the caption: “A friend spotted this at a flea market in Texas lmao. Why. Whhhhhhhhhhhhhhhhhy.”

Another user reported the picture, and May received a seven-day suspension. Images of that flag, on their own, violate the site’s hate speech policy, a Facebook representative said. Critiques such as May’s should be allowed, but Facebook’s far-flung moderation force often misreads users’ intent.

Yet the Confederate flag is ubiquitous across the platform, with hundreds of pages and groups digitally hoisting it daily.

For all its omnipresence, it’s easy to forget Facebook is relatively new. The company, which launched in 2004, often is blazing new ground when it comes to thorny questions of platform governance.

“I don’t think anyone knows how to run a business that can govern the lives of billions of people around the world while also making money,” said J. Nathan Matias, a researcher at the MIT Media Lab studying social media harassment. “We’re asking companies to observe the intimate personal interactions of billions of people and then make wise interventions. That’s an incredibly difficult challenge to take on.”

Facebook’s moderation system relies on a mix of in-house employees and outsourced contractors. These moderators evaluate each piece of reported content to determine whether it should be removed or its author suspended. But because the moderators often live and work abroad, they don’t always have a strong grasp of the complexities of language, culture and racial politics of other countries.

Facebook founder Mark Zuckerberg recently pledged to hire 3,000 moderators, adding to the 4,500 already employed by the company. Facebook officials declined to speak on the record for this story but provided a written statement.

“We’re always thinking about how we can do better for our community and exploring ways that new technologies can help us make sure Facebook is a safe environment,” the company said in an email.

As that hiring push happens, some suggest Facebook should use the opportunity to increase the diversity of the team setting moderation policies. Facebook doesn’t release figures about the racial and gender makeup of its specific policy teams, but the company’s 2016 diversity report shows that its senior leadership is 3 percent black, 3 percent Hispanic and 27 percent female.

“If there were systematic changes in hiring and retention of leaders at all levels, that would likely change who’s being targeted disproportionately,” said Jamia Wilson, executive director of the nonprofit Women, Action and the Media.

Shireen Mitchell, who founded the nonprofit Digital Sisters to advocate for diversity in the tech industry, sees Facebook’s moderation policy being used as a tool for harassment to push out women of color, especially if they’re advocating a social or political agenda.

“A woman of color who has a following is someone to target,” Mitchell said. “A woman of color who has a following and an opinion is someone to target. A woman of color who has a following and has a campaign that they’re working on is someone to target.”

For Pellegren, the issue comes down to respect.

“If Facebook wants to collect my data, if it wants to know about me, about my demographic, … then respect me as a consumer. Respect me as someone who is part of this exchange.”

![]()