In a blog post Thursday, Facebook CEO Mark Zuckerberg outlined his company’s plan to tackle the biggest issues the company faces in its battle against viral misinformation and prohibited content. The second in an end-of-year series, the post sketches the company’s blueprint for “Content Governance and Enforcement.”

Throughout the post, Zuckerberg emphasized the company’s focus on “proactive enforcement” of its policies and its reliance on carefully trained, artificially intelligent systems to detect and even take down problematic content before it’s shared. By the end of 2019, the company claims its AI should be sophisticated enough to identify “the vast majority” of problematic content such as fake accounts and instances of self-harm or hate speech.

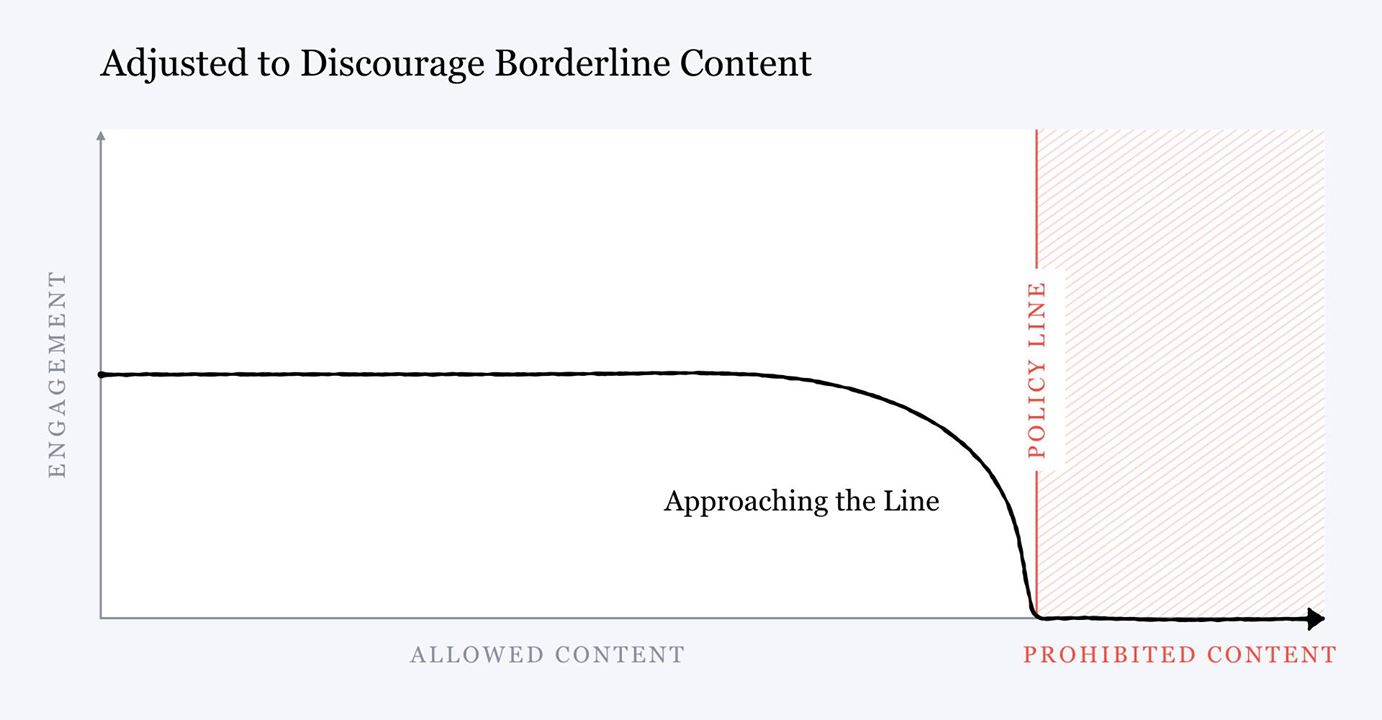

Facebook also plans to target what it refers to as “borderline content,” or content provocative enough to toe the line of what’s prohibited without necessarily warranting removal. Zuckerberg explained that closer to this policy line content falls, the greater engagement it garners, thus incentivizing polarizing and sensationalist content. To deter this trend, Facebook is calibrating its AI systems to catch borderline content before it goes viral and limit its distribution.

With this new goal in mind, Facebook particularly hopes to target clickbait and posts that spread misinformation. Its approach isn’t limited to news, either. Photos that skate dangerously close to nudity or posts with offensive language just short of hate speech were flagged by these system changes and subsequently saw declining distribution.

Zuckerberg emphasized that Facebook’s ongoing struggle with borderline content isn’t solved by simply moving the threshold of what’s considered acceptable. “This engagement pattern seems to exist no matter where we draw the lines, so we need to change this incentive and not just remove content,” he wrote.

Additionally, Zuckerberg announced the development of an independent group to oversee the appeals process for content users argue was wrongly flagged. The company is only beginning to decide the makeup and criteria of this body, but it plans to begin receiving international feedback by the first half of 2019 and roll out an established group by the end of next year.

“Over time, I believe this body will play an important role in our overall governance,” Zuckerberg said. “Just as our board of directors is accountable to our shareholders, this body would be focused only on our community.”

The CEO’s post comes a day after a scathing New York Times report detailing Facebook’s attempts to deflect a negative image given recent scandals, most notably Russian interference during the 2016 election and the exposure of 87 million users’ information to Cambridge Analytica. According to the Times, Facebook launched a campaign to shift the public’s anger away from itself and toward Apple and Google by financing critical articles about the rival tech giants.

H/T the Verge