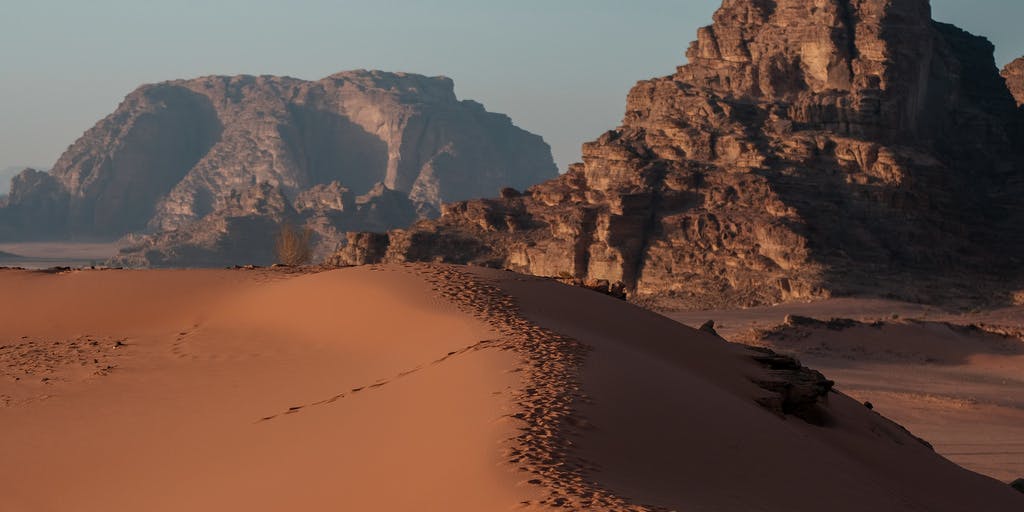

London’s police force hopes to use artificial intelligence in the fight against child pornography. As it stands though, their systems need a little bit more work. The image recognition system the London Metropolitan Police are using to spot photos of child abuse can’t effectively distinguish nude photos from pictures of the desert.

Right now, human staffers have to look through indecent photos and manually grade the images—the grades correspond with different sentencing levels. That sort of task is emotionally and psychologically taxing, making it just the type of job that AI can potentially handle better than human individuals. The London police have used the fledgling system on more than 53,000 devices last year in search of incriminating evidence.

“For some reason, lots of people have screen-savers of deserts and it picks it up thinking it is skin colour,” Mark Stokes, head of digital and electronics forensics for London’s Metro Police, told The Telegraph. The department already uses software to successfully identify guns, money, and drugs in images without the aid of human eyes.

Stokes’ team has another issue to deal with besides image recognition: photo storage. The department stores its sensitive images at a London data center for now, but it hopes to move the photos to a cloud-based provider. The benefits of this move include utilizing Silicon Valley companies’ tremendous processing power in analytics efforts, as well as an expansion in the number of photos law enforcement can store. However, while police can legally store these types of images, cloud providers cannot. Plus, the thought of a data breach with this imagery is horrendous. Stokes’ forensics team has a plan in place to curb the risk and is optimistic they’ll reach an agreement with cloud providers, according to The Telegraph.

While using AI to spot porn is proving complicated, the team hopes this particular software will be ready for action in two to three years.

H/T Gizmodo