After it was reported last weekend that Facebook had conducted an experiment on hundreds of thousands of users by emotionally manipulating the content on their feeds without their consent, people felt… sad. And mad, and a bunch of other feelings you would be asked to identify on flashcards if you went to child sociopath camp.

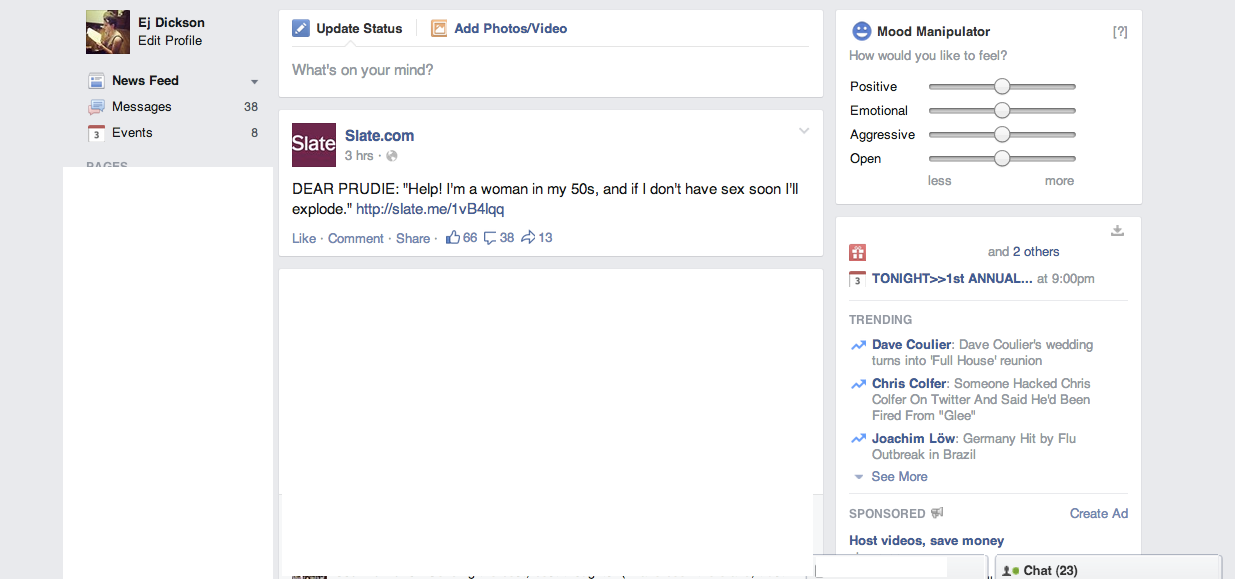

But Lauren McCarthy, a programmer and researcher at New York University’s Interactive Telecommunications Program, didn’t feel sad or mad by Facebook’s betrayal. She felt inspired. So she created the Facebook Mood Manipulator, a browser extension that lets you recreate the Facebook experiment on your own feed by allowing you to see either positive or negative posts—except this time, it’s with your consent.

Just like the Facebook experiment, McCarthy’s Facebook Mood Manipulator uses word recognition software to identify whether words in your friends’ posts skew “positive” or “negative.” The idea is that a feed that predominantly features positive posts will make you happier, while feeds that skew negative will make you sad.

As I was feeling pretty neutral today myself—there were no options in the Facebook study or McCarthy’s extension for “hot, sweaty, and kinda having to pee a little bit”—I decided I’d be a good test subject for the Facebook Mood Manipulator. So I downloaded the software to see whether my Facebook News Feed would make me feel Happy Face Emotion Flashcard or Sad Face Emotion Flashcard.

First, I decided to make my News Feed look more “positive.” The first posts that came up were a Buzzfeed headline for a video of a little boy comforting his friend on her first day of school, and an xoJane article on how cotton beauty swabs were going to change my life. Apparently, to Facebook, “positive” content translated into glurge listicles and product placement for Q-tips. I was not impressed.

I then pushed my slider all the way to the left, indicating I wanted “negative” content on my news feeds. Although I expected news articles about global catastrophes and unemployment rates skyrocketing in developing countries, I instead got the next best thing: A Vulture post about Justin Bieber. That seemed about right.

Finally, I pushed my slider all to the right, controlling for more “aggressive” and “emotional” content. Considering I have more than a few Facebook friends whose personalities fall into both of those categories (I was a theater kid in high school), I had high hopes for this filter.

I did see a bunch of outraged posts linking to articles about the Supreme Court Hobby Lobby ruling, and a pretty poignant post from a college classmate I hadn’t spoken to in ages about her struggles with depression. On the other hand, I also saw a video of a Russian lady snorkeling and making friends with an eel, which I would say made me feel neither aggressive nor emotional. (It did make me say “Awwwwww little babies they r frondzzzzzz” really loudly at my office though, which might have made my coworkers feel both of those emotions toward me.)

Ultimately, I didn’t feel like the Facebook Mood Manipulator actually manipulated my mood that much. If anything, it made me feel annoyed, because I had to keep tweaking the settings and scrolling through my feed instead of taking a lunch break and peeing. But McCarthy says that’s kind of the point of her project, as Facebook’s experiment didn’t significantly change the emotional tone of users’ feeds at all.

“When you manipulate things, it’s a little subtle what’s changing. There’s not like a positive mode or a negative mode,” she told the Atlantic. That’s in part because the software that she and Facebook used in its original experiment wasn’t actually designed for brief, social media status updates, and is geared at identifying “positive” or “negative” words in speeches or longer blocks of text.

The fact that tweaking the emotional tenor of your Facebook feed doesn’t yield any obvious changes doesn’t mean, of course, that people shouldn’t get pissed off at Facebook for playing us without our knowledge. “Aw yes, we are all freaked about the ethics of the Facebook study,” McCarthy writes on her website. The question that the Facebook Mood Manipulator attempts to answer is, “then what? What implications does this finding have for what we might do with our technologies? What would you do with an interface to your emotions?”

H/T The Atlantic | Photo by Michael Coughlan/Flickr (CC BY SA 2.0)