In 1897, French social scientist and philosopher Émile Durkheim published Suicide, the first book to explore self-inflicted death in-depth. Durkheim’s research helped people better understand suicide, though it couldn’t develop an antidote to the impulse. It remains a wrenching social problem.

And it’s a problem made obvious online. In many ways, the Internet has made suicide more visible. People document depression on the social Web. Cyberbullying can push people to kill themselves. We bring our mourning online with us, with Facebook memorial pages full of messages lamenting friends who have committed suicide. Organizations like the American Foundation for Suicide Prevention offer helpful online resources, and fund research. And people who are considering suicide use the Internet as a forum to work through their thoughts (or encourage them).

There are many examples of times people have used social media as a place to call for help. Sometimes people who see these messages as signs are able to intervene. But more often, erratic flurries of Facebook updates or despairing tweets are not recognized in time.

If someone sees suicidal Facebook updates, the social network has a reporting tool, and it urges users to call a suicide prevention hotline, providing a list of numbers and organizations. But there are so many people on Facebook, the company can’t monitor every post, so the onus is on users to report.

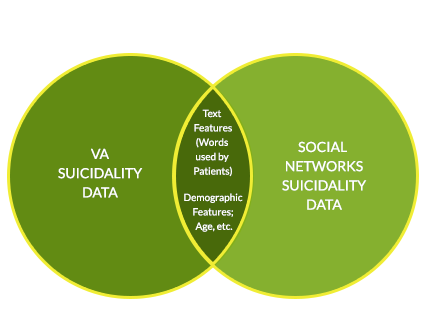

That’s where the Durkheim Project comes in. Suicide is an especially pressing problem with U.S. veterans of Iraq and Afghanistan: A report from the U.S. Department of Veteran Affairs revealed earlier this year revealed suicide rates among male veterans between 18 and 24 have tripled in recent years. It’s a full-blown crisis, which is why DARPA is funding research relating to veterans contemplating suicide. The Durkheim Project, led by Chris Poulin, a predictive analytics specialist, is using big data to research ways to pinpoint when veterans are suicidal based on their social media activity.

The Durkheim Project built a predictive model to assess whether veteran posts contain red flags, building a database from material gathered from veteran volunteers who have agreed to be part of the study.

It can now identify when someone is making posts that could indicate they’re suicidal with 65 percent accuracy. That might not sound very high, but this suggests that with more tweaking the algorithm could increase that percentage and the certainty of warning signs.

The project is getting support from social media partners. “Our initial public launch supported Facebook and Twitter,” Poulin said. “However, we have since developed (though not released yet), support for LinkedIn and Android Phone Text messages.”

Right now, the Durkheim Project is still in research mode. There’s no intervention happening yet, but eventually the team wants to have the predictive algorithm tweaked enough so they can alert clinicians to problems so they can assess individuals in real-time, using their medical records and information about their pasts to make a more thorough assessment. For instance, if a veteran had previous bouts with depression or prescriptions to drugs known to cause suicidal thoughts, the clinicians would be able to see this and augment their assessment. The team created a HIPPA-compliant demo to show what it’d look like when clinicians signed on to view the at-risk veterans’ information. Patients would get assigned an overall risk rating, and it’d be easy to pull up all of their information to make an assessment just seconds after they posted something alarming.

Poulin hopes to open up the research to involve civilians, but there are several roadblocks to overcome before a suicide prevention algorithm could realistically be deployed in any effective way in the near future. First, there are major privacy concerns here. For veterans, it might be possible to have a wide-scale opt-in offered when vets came back from their deployment, or even when they enlist—but, of course, this would involve basically giving the researchers access to all social media posts, something many might not want to do. And within the general population, granting a government-funded research project access to Facebook posts and tweets doesn’t seem like a particularly appealing move, even if it is for our own safety. So even if Poulin and The Durkheim Project develop the algorithm to the point where it’s extremely accurate, the group of people willing to cede their privacy to use it may be too small to really make a difference.

Then again, for veterans currently being treated for mental health issues, signing up could be so obviously beneficial that the privacy issue would be secondary.

Poulin told us that the project lost its government funding during the government sequester last summer, so the team had to put the third phase on hold. They’re currently applying for grants to continue the work.

Photo via gruntzooki/Flickr (CC BY-SA 2.0)