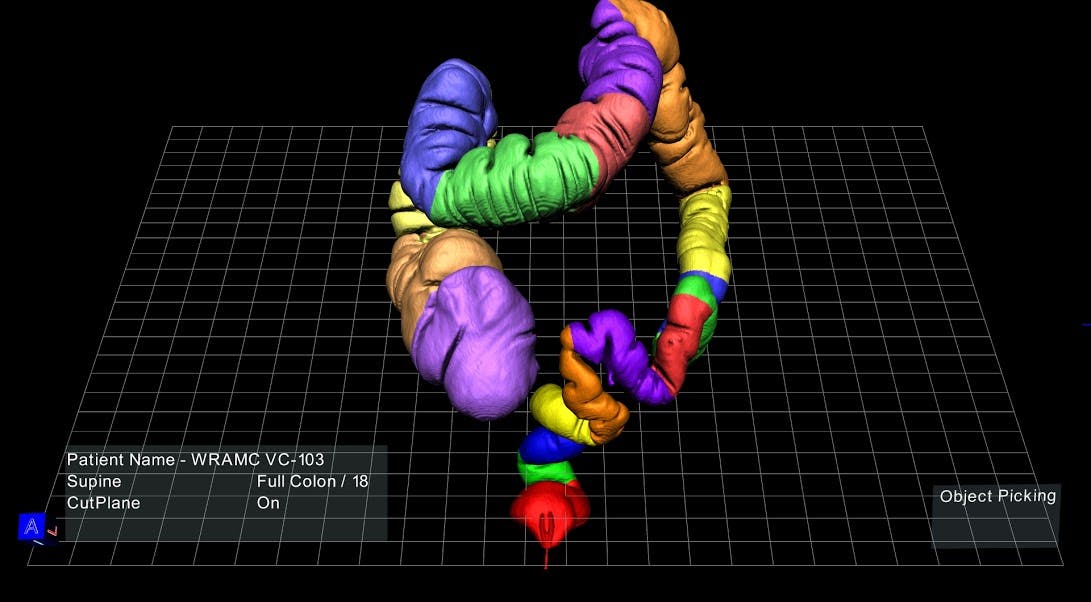

If you or I could look at a 3D model of a patient’s colon, divided into neatly color-coded segments and floating on a screen in front, we probably wouldn’t marvel at the anatomical intricacies of the large intestine. We’d more likely be drawn to the novelty of this immersive, unfamiliar technology—even if it requires wearing plastic glasses similar to those from 3D movies in the ’90s, sans red and blue cellophane.

But when doctors sits in front of this screen, they see an answer to a problem that has plagued their profession since technology like ultrasound, computed tomography (CT), and magnetic resonance imaging (MRI) first allowed a peek inside patients’ heads and hearts and bowels, but could only display the resulting anatomical data on a two-dimensional plane.

With this virtual modeling platform, medical technology startup EchoPixel hopes to eliminate the disconnect of having to “solve a 3D problem using 2D images,” as the company’s CTO and founder, Sergio Aguirre, put it.

The company, which launched four years ago and has been quietly testing the product at Stanford University, the Cleveland Clinic, and the University of California, San Francisco (UCSF), is essentially bringing virtual reality to the medical profession.

In March, the platform received U.S. Food and Drug Administration 501(k) clearance, which allows healthcare providers nationwide to use the technology for diagnostics and surgical planning. The workstation can be purchased by a hospital or medical practice for $75,000, or offered as a yearly subscription for $20,000.

“I want this workstation outside the surgical suite, and I want every surgical resident to go through this model five or 10 times before they go into the operating room.”

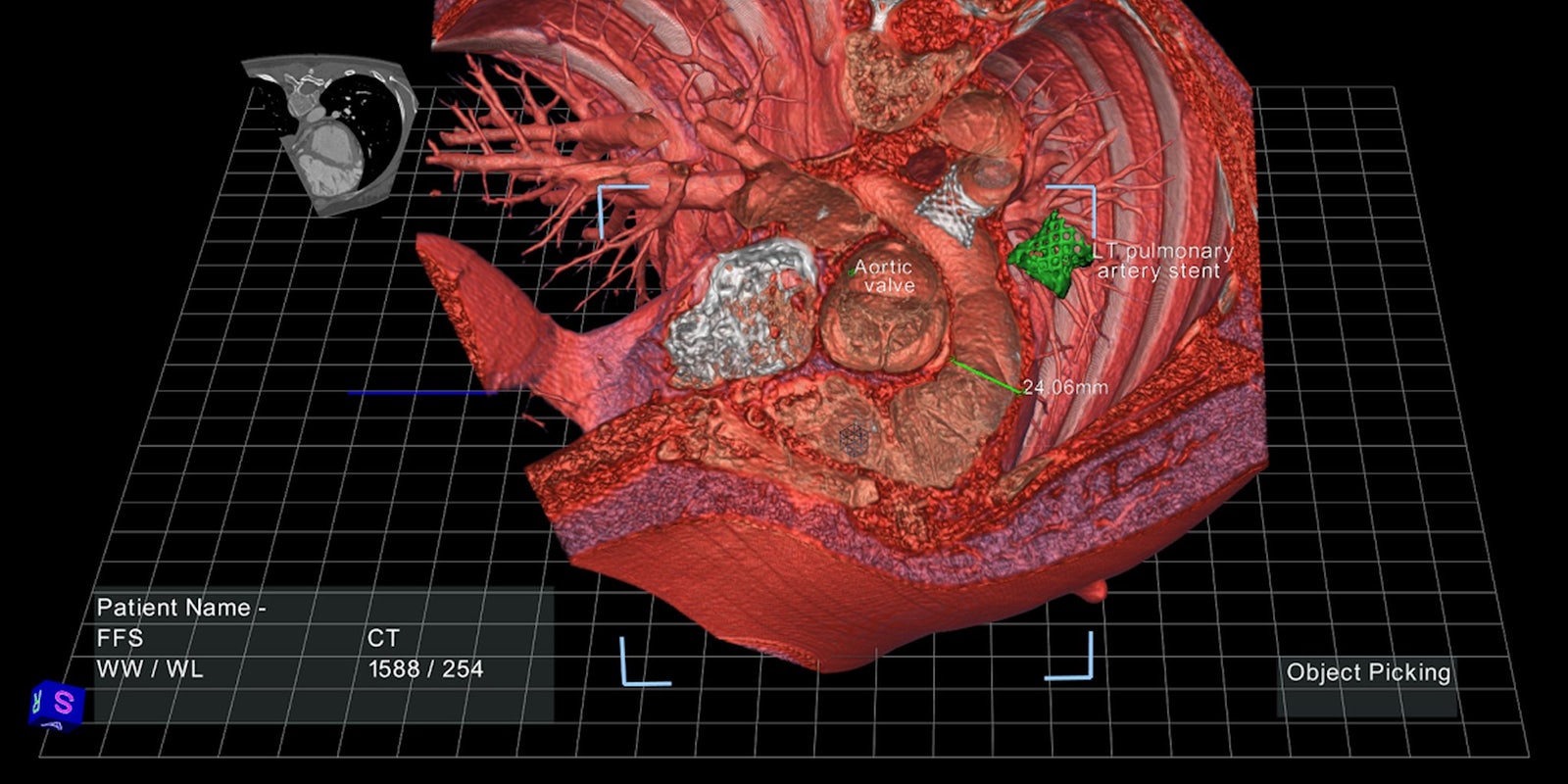

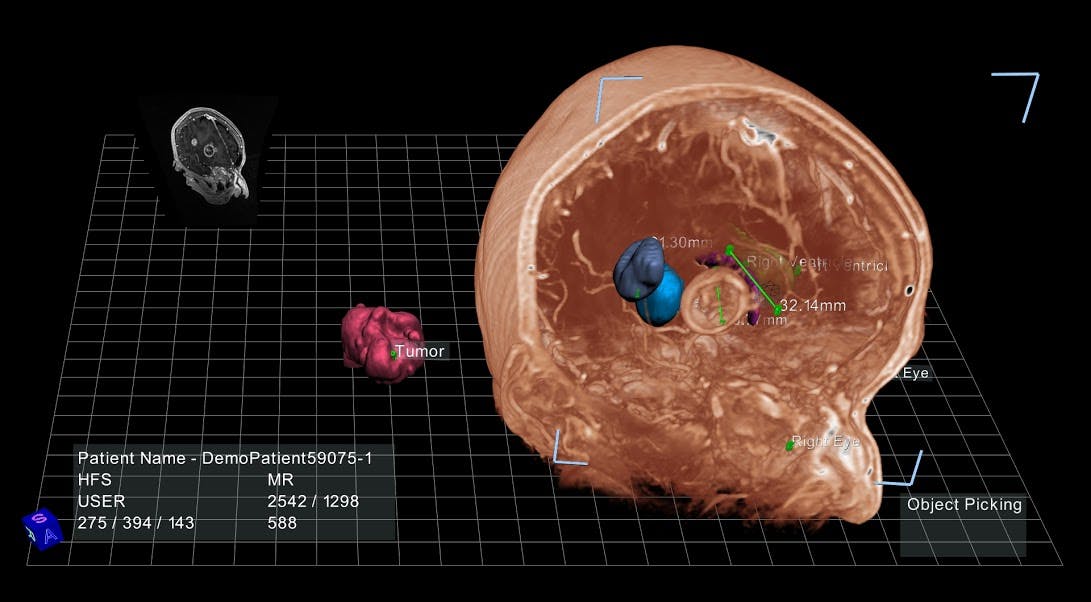

The software can transform data from ultrasound, CT, and MRI scans into three-dimensional anatomical models projected onto a computer screen, with which doctors can interact using a stylus. A doctor can, for example, click on one brightly colored segment of the virtual colon to scan the interior tissue for soft, spongy polyps, or point and click to extract an aneurysm from deep inside a virtual brain.

By giving physicians the means to examine that data in 3D, Aguirre said, they are free to focus on diagnosis rather than data synthesis. “What we found is that doctors get better results, and they do them faster as well.”

Take that colorful model of the colon: It was created for an experimental virtual colonoscopy protocol in which Judy Yee, MD, an associate professor of radiology at UCSF, used the 3D model—quickly synthesized from hundreds of CT images—to scan a patient’s intestinal tract for lesions. Dr. Yee was able to reduce the procedure time from an average of 30 to 40 minutes to around five to eight, while two junior radiologists in her lab were able to complete the procedure in 10 or 15, Aguirre explained.

Doctors can also use these virtual models to create surgical plans without excessive exploratory slicing and dicing. Such plans are still essentially drawn up on a sheet of paper, which can only offer so much insight about a patient’s actual anatomy. It would be much more simple, Aguirre said, if a surgeon could have a sense of precisely how far they’ll have to reach inside a child’s chest cavity to rebuild a detached pulmonary artery.

In a clinical trial conducted at Stanford, EchoPixel’s technology allowed radiologists and surgeons to do just that, working together to draw up a three-dimensional surgical plan with a 40 percent reduction in interpretation time.

And those surgical plans can be saved and shared, Aguirre added. “Let’s say there’s a pediatric patient with a congenital heart defect in Oklahoma and the surgeons aren’t sure if they can actually treat this patient. Using our technology, they can send the data to our expert at Stanford, and he can draw the surgical plan he feels is best for this patient and send it back to the surgeons in Oklahoma. We see this sharing of information as something that’s very, very important.”

And it could prove equally useful for training medical students, who struggle to square the flat images they see in textbooks with the real anatomy of their patients. “A liver surgeon at UCSF said, ‘I want this workstation outside the surgical suite, and I want every surgical resident to go through this model five or 10 times before they go into the operating room,'” said Ron Schilling, Ph.D., EchoPixel’s CEO.

Aguirre and Dr. Schilling hope that this software will eventually be compatible with any device with a screen, including smartphones and tablets, to facilitate quick data-sharing between doctors. The platform could even be used to help patients better understand a difficult diagnosis or upcoming surgical procedure. But most computers aren’t equipped to process 3D visualizations, so for now the software is tethered to the workstation’s high-resolution screen, which is manufactured by zSpace, a firm that specializes in holographic imaging displays.

The fact that the software still relies on proprietary hardware is a possible barrier to large-scale adoption, said Sandy Napel, Ph.D., a professor of radiology at Stanford who sits on EchoPixel’s advisory board. “If somebody came up with an app that allowed these models to float in space before the monitor that you have at your desktop, that would be amazing,” he said. “All sorts of things could then be tried and proven or disproven. But the fact that it requires hardware that not everyone has is a limitation.”

That said, Dr. Napel agreed that the technology holds great promise in areas like virtual colonoscopy, citing Dr. Yee’s work with the platform. “EchoPixel has created a way to actually float sections of the large intestine in front of the screen and allow radiologists to spin them around and look at those surfaces really efficiently. That’s an example where it could be very, very effective.”

As they navigate the uncertain terrain of widespread adoption, Aguirre and Dr. Schilling are optimistic about their prospects—and they plan on developing a version of the workstation without the 3D glasses very soon.

Photo via EchoPixel