Last week, the Daily Dot reported on Ban Islam, a hate-fueled Facebook community page that promoted intolerance of, and violence against, the world’s Muslim population.

At the time of publication, despite user inquiries and complaints, the page remained active, as it had for the better part of a year. It was only after that article went up that a Facebook representative got in touch to say that Ban Islam violated Facebook’s hate speech policy and had been removed.

That particular guideline states:

Content that attacks people based on their actual or perceived race, ethnicity, national origin, religion, sex, gender, sexual orientation, disability or disease is not allowed. We do, however, allow clear attempts at humor or satire that might otherwise be considered a possible threat or attack.

Yesterday, a reader contacted the Daily Dot to note that Ban Islam is back up and running. It hasn’t reconnected with all 50,000 fans of the previous incarnation, boasting only 3,500 likes as of this writing—but it’s the same page, same banner, same hateful content. There’s no mistaking it.

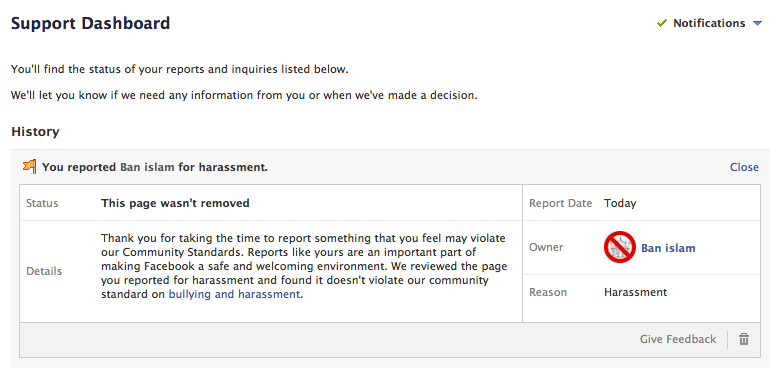

This reader had no luck in seeking the page’s re-removal, so I attempted the same myself, using the tools Facebook provides for such a complaint. Confusingly, there wasn’t an obvious option for reporting hate speech, and it seemed unlikely that comments such as “I think it shouldn’t be on Facebook” or “I just don’t like it” would be taken seriously.

I went with harassment, which was closest to the truth of the matter.

I swiftly received a reply from Facebook that informed me that, following a cursory review, the page had not been removed.

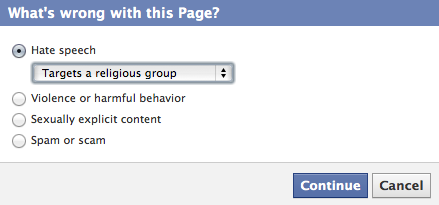

I tried again, this time clicking “I think it shouldn’t be on Facebook.” That, oddly, was what led me to the panel I had wanted in the first place, which included “Hate speech” and a drop-down menu to indicate what sort of group was being targeted.

Ban Islam can’t really tell the difference between religion and ethnicity, but “Targets a religious group” seemed the safest bet here. After all, isn’t that what had prompted Facebook to remove the page in the first place?

Apparently not, because soon enough I received an update on this complaint in almost every respect identical to the previous one:

Thank you for taking the time to report something that you feel may violate our Community Standards. Reports like yours are an important part of making Facebook a safe and welcoming environment. We reviewed the page you reported for containing hate speech or symbols and found it doesn’t violate our community standard on hate speech.

Doesn’t violate the community standard on hate speech? That’s exactly the standard it violates, according to Facebook’s own representative, who, following the original Ban Islam article, sent the Daily Dot a boilerplate comment that began with the phrase “The page in question violated Facebook’s terms and has been removed.”

So: Does it violate the terms or doesn’t it? How can the same page be held to two different standards? And can ordinary Facebook users act to get rid of a page, or is it only following a press inquiry—and attendant exposure—that such pages are taken down?

Eventually, Facebook responded, but only under the conditions that the answers be off the record. It was a similar, boilerplate response. We’re just as confused as we were before.

Photo via Facebook